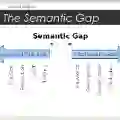

Subject to the huge semantic gap between natural and formal languages, neural semantic parsing is typically bottlenecked by its complexity of dealing with both input semantics and output syntax. Recent works have proposed several forms of supplementary supervision but none is generalized across multiple formal languages. This paper proposes a unified intermediate representation (IR) for graph query languages, named GraphQ IR. It has a natural-language-like expression that bridges the semantic gap and formally defined syntax that maintains the graph structure. Therefore, a neural semantic parser can more precisely convert user queries into GraphQ IR, which can be later losslessly compiled into various downstream graph query languages. Extensive experiments on several benchmarks including KQA Pro, Overnight, GrailQA, and MetaQA-Cypher under standard i.i.d., out-of-distribution, and low-resource settings validate GraphQ IR's superiority over the previous state-of-the-arts with a maximum 11% accuracy improvement.

翻译:根据自然语言和正式语言之间巨大的语义差距,神经语义分析通常会因其处理输入语义和输出语法的复杂性而受阻。最近的工作提出了几种形式的补充监督,但没有一种是多种正式语言的通用。本文件提议对图形查询语言采用统一的中间代表(IR),称为GreagQ IR。它有一个类似于自然语言的表达方式,可以弥合语义差距,并正式界定维持图形结构的语法。因此,神经语义分析器可以更准确地将用户查询转换为图形Q IR, 以后可以无损地汇编成各种下游图形查询语言。关于若干基准的大规模实验,包括KQA Pro, Overight, GrailQA, 和MetaQA-Cypher, 标准i.d., 超出分配, 和低资源环境验证了GimaQ IR 相对于先前的状态的优越性, 并尽可能提高11%的精度。