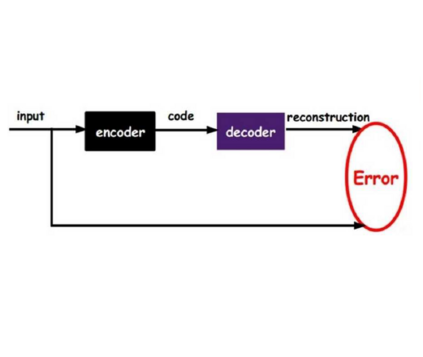

We propose a novel strategy for Neural Architecture Search (NAS) based on Bregman iterations. Starting from a sparse neural network our gradient-based one-shot algorithm gradually adds relevant parameters in an inverse scale space manner. This allows the network to choose the best architecture in the search space which makes it well-designed for a given task, e.g., by adding neurons or skip connections. We demonstrate that using our approach one can unveil, for instance, residual autoencoders for denoising, deblurring, and classification tasks. Code is available at https://github.com/TimRoith/BregmanLearning.

翻译:我们提出了一个基于布雷格曼迭代的新颖的神经结构搜索战略(NAS) 。 从一个稀疏的神经网络开始,我们基于梯度的单发算法逐渐以反向空间方式增加相关参数。 这使得网络能够选择搜索空间中的最佳架构, 从而通过添加神经元或跳过连接等方法, 使其为特定任务设计得当。 我们证明, 使用我们的方法, 例如, 可以揭开剩余自动编码器, 用于解密、 拆解和分类任务 。 代码可在 https:// github.com/ TimRoith/ BregmanLearning 上查阅 。