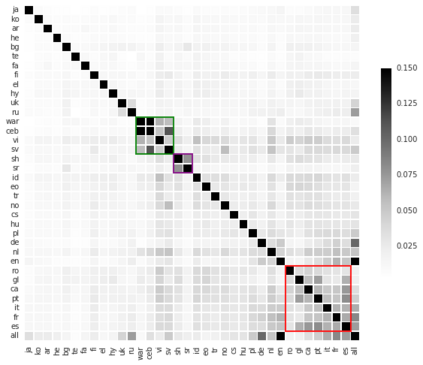

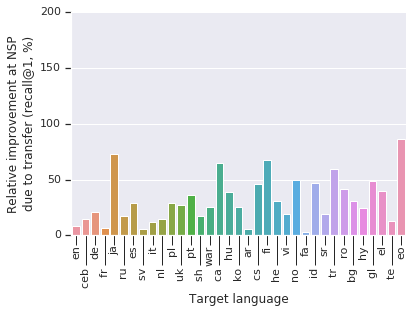

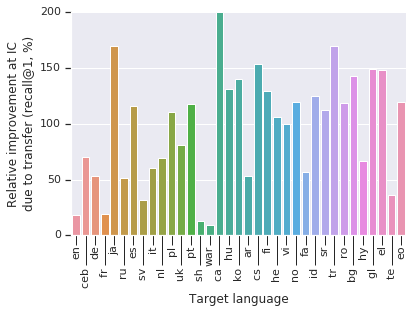

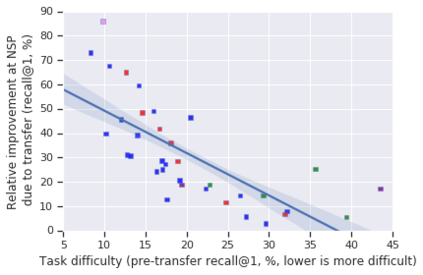

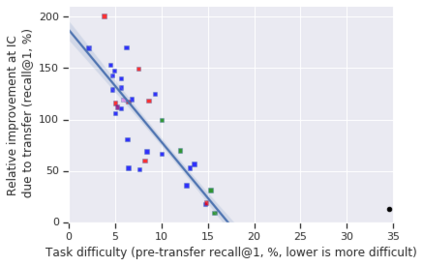

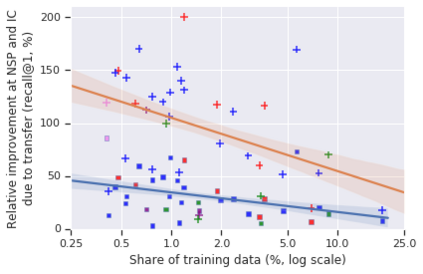

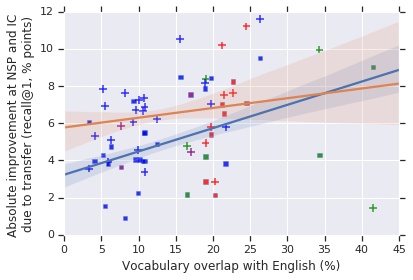

We focus on the problem of search in the multilingual setting. Examining the problems of next-sentence prediction and inverse cloze, we show that at large scale, instance-based transfer learning is surprisingly effective in the multilingual setting, leading to positive transfer on all of the 35 target languages and two tasks tested. We analyze this improvement and argue that the most natural explanation, namely direct vocabulary overlap between languages, only partially explains the performance gains: in fact, we demonstrate target-language improvement can occur after adding data from an auxiliary language even with no vocabulary in common with the target. This surprising result is due to the effect of transitive vocabulary overlaps between pairs of auxiliary and target languages.

翻译:我们集中研究多语种环境中的搜索问题。我们研究了下一轮判决预测和反向阻塞的问题,我们发现,在多语种环境中,基于实例的传输学习在大规模上非常有效,导致所有35种目标语言的积极传输和两项任务得到测试。我们分析了这一改进,认为最自然的解释,即语言之间的直接词汇重叠,只能部分地解释绩效的提高:事实上,在添加辅助语言的数据(即使没有与目标语言相同的词汇)之后,我们展示了目标语言的改进。这一令人惊讶的结果是由于辅助语言与目标语言之间的过渡词汇重叠。