LibRec 精选:基于参数共享的CNN-RNN混合模型

LibRec 精选

你的好运气藏在你的实力里,也藏在你不为人知的努力,你越努力,就越幸运。

慕课《量子机器学习》,链接: https://www.edx.org/course/quantum-machine-learning

矩阵与向量的求导计算(附详细的推导和计算过程),链接1:https://github.com/LynnHo/Matrix-Calculus,链接2:http://artem.sobolev.name/posts/2017-01-29-matrix-and-vector-calculus-via-differentials.html

【视频教程】Netflix竞赛的大规模并行协同过滤,论文:https://endymecy.gitbooks.io/spark-ml-source-analysis/content/%E6%8E%A8%E8%8D%90/papers/Large-scale%20Parallel%20Collaborative%20Filtering%20the%20Netflix%20Prize.pdf

视频1:https://www.youtube.com/watch?v=lRBZzSWUkUI

视频2:https://www.youtube.com/watch?v=6fB0McQFSHQ

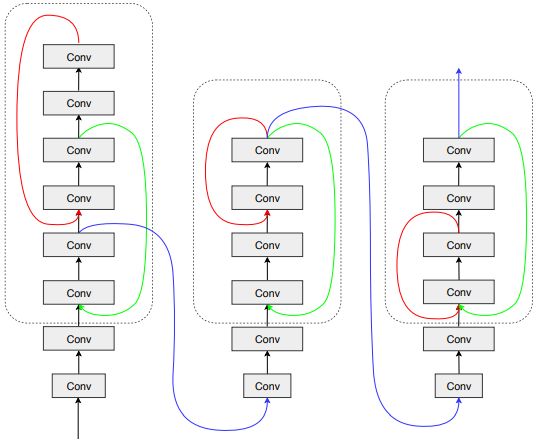

1. Learning Implicitly Recurrent CNNs Through Parameter Sharing (ICLR'19)

Paper: https://arxiv.org/abs/1902.09701

Code: https://github.com/lolemacs/soft-sharing

We introduce a parameter sharing scheme, in which different layers of a convolutional neural network (CNN) are defined by a learned linear combination of parameter tensors from a global bank of templates. Restricting the number of templates yields a flexible hybridization of traditional CNNs and recurrent networks. Compared to traditional CNNs, we demonstrate substantial parameter savings on standard image classification tasks, while maintaining accuracy. Our simple parameter sharing scheme, though defined via soft weights, in practice often yields trained networks with near strict recurrent structure; with negligible side effects, they convert into networks with actual loops. Training these networks thus implicitly involves discovery of suitable recurrent architectures. Though considering only the design aspect of recurrent links, our trained networks achieve accuracy competitive with those built using state-of-the-art neural architecture search (NAS) procedures. Our hybridization of recurrent and convolutional networks may also represent a beneficial architectural bias. Specifically, on synthetic tasks which are algorithmic in nature, our hybrid networks both train faster and extrapolate better to test examples outside the span of the training set.

2. SC-FEGAN: Face Editing Generative Adversarial Network with User's Sketch and Color

arXiv: https://arxiv.org/abs/1902.06838

code: https://github.com/JoYoungjoo/SC-FEGAN

We present a novel image editing system that generates images as the user provides free-form mask, sketch and color as an input. Our system consist of a end-to-end trainable convolutional network. Contrary to the existing methods, our system wholly utilizes free-form user input with color and shape. This allows the system to respond to the user's sketch and color input, using it as a guideline to generate an image. In our particular work, we trained network with additional style loss which made it possible to generate realistic results, despite large portions of the image being removed. Our proposed network architecture SC-FEGAN is well suited to generate high quality synthetic image using intuitive user inputs.