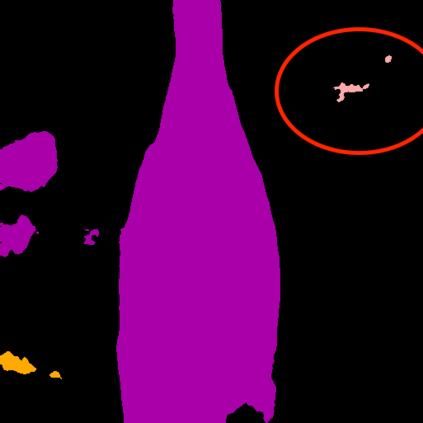

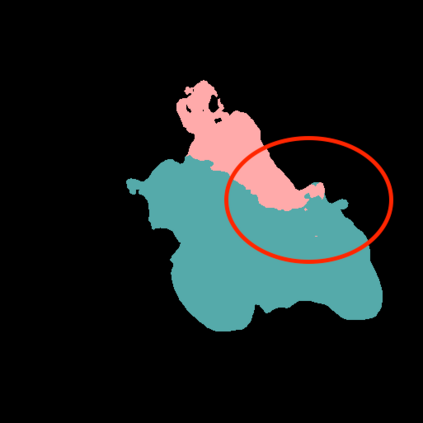

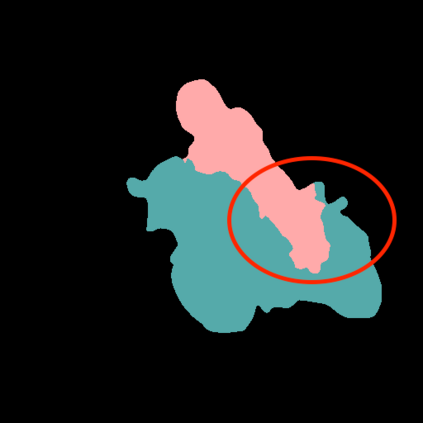

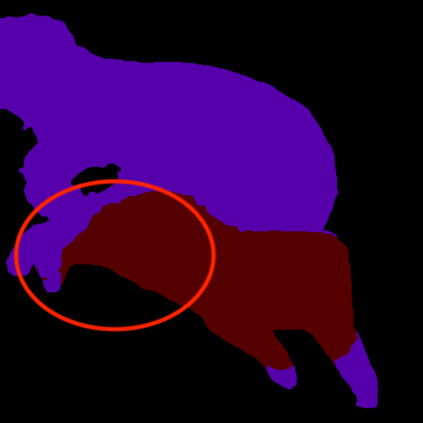

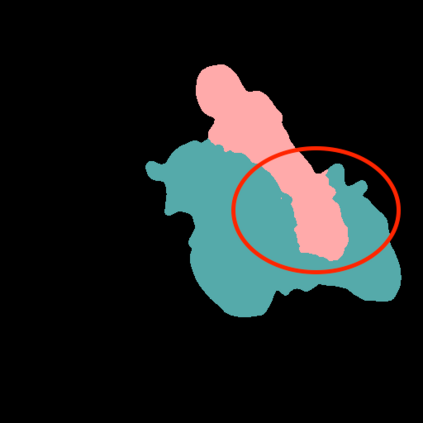

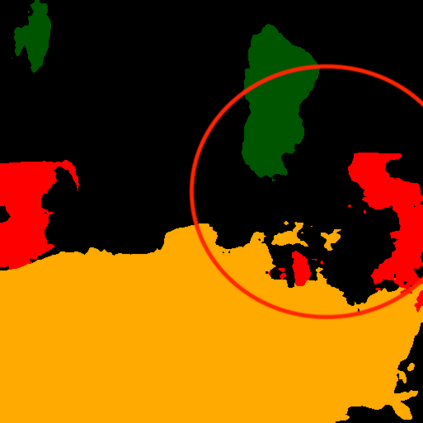

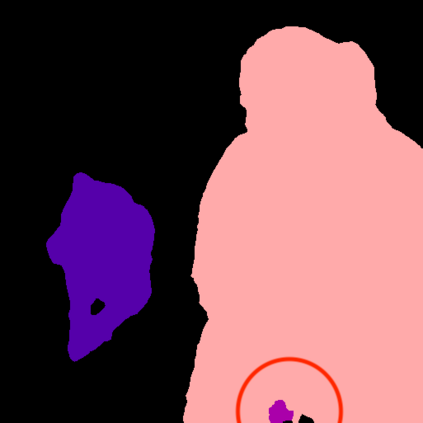

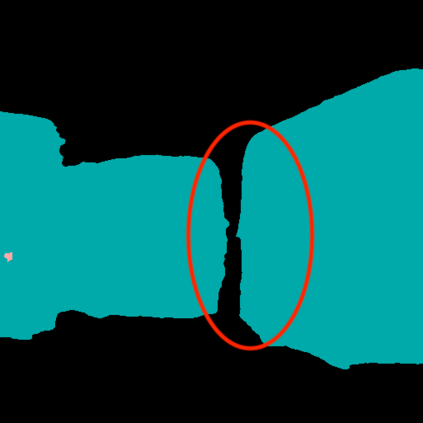

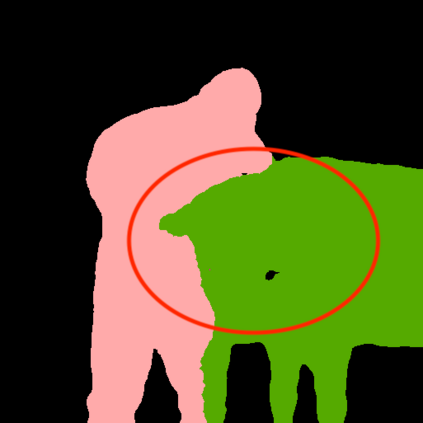

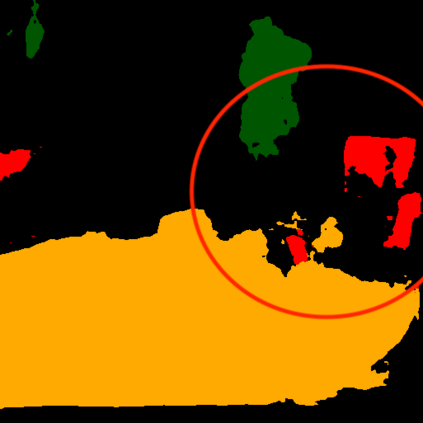

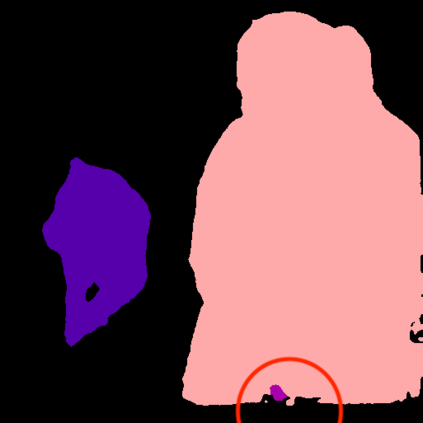

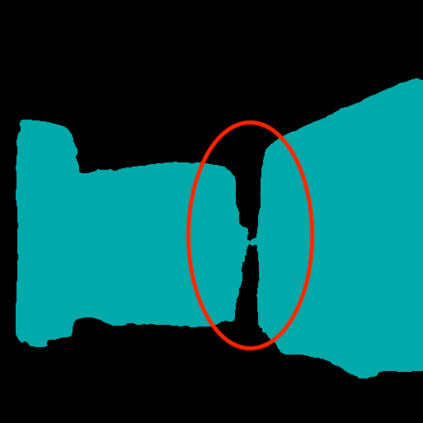

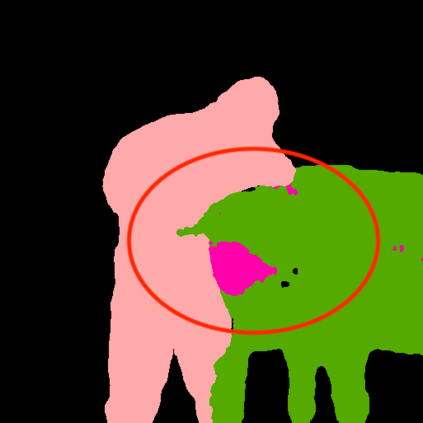

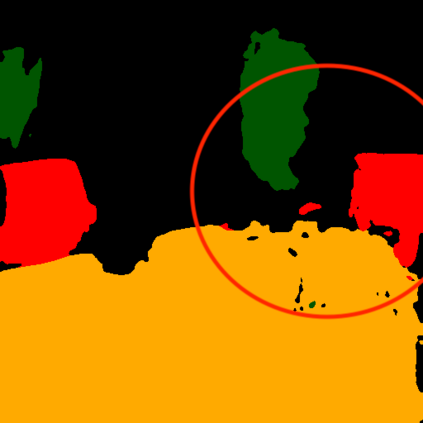

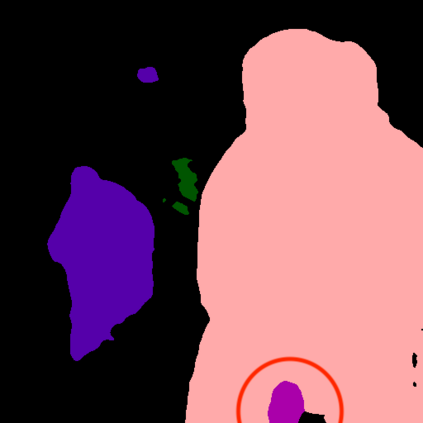

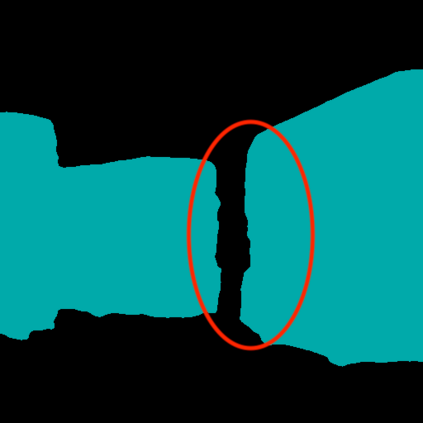

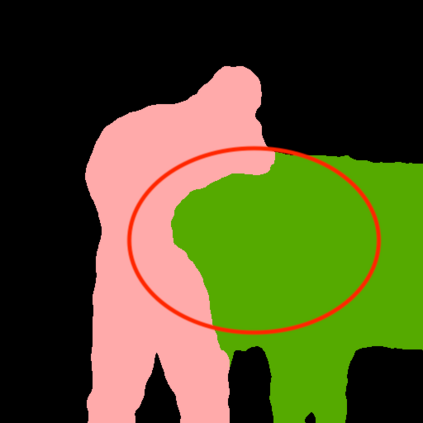

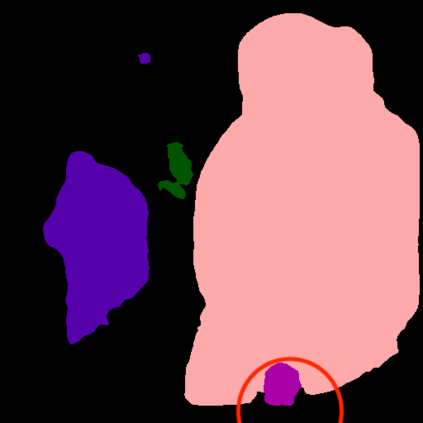

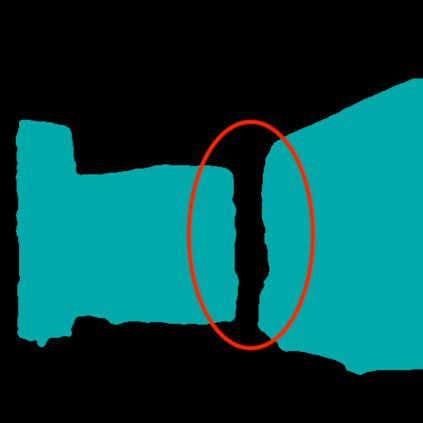

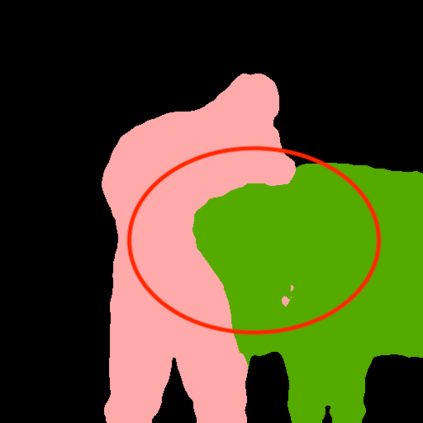

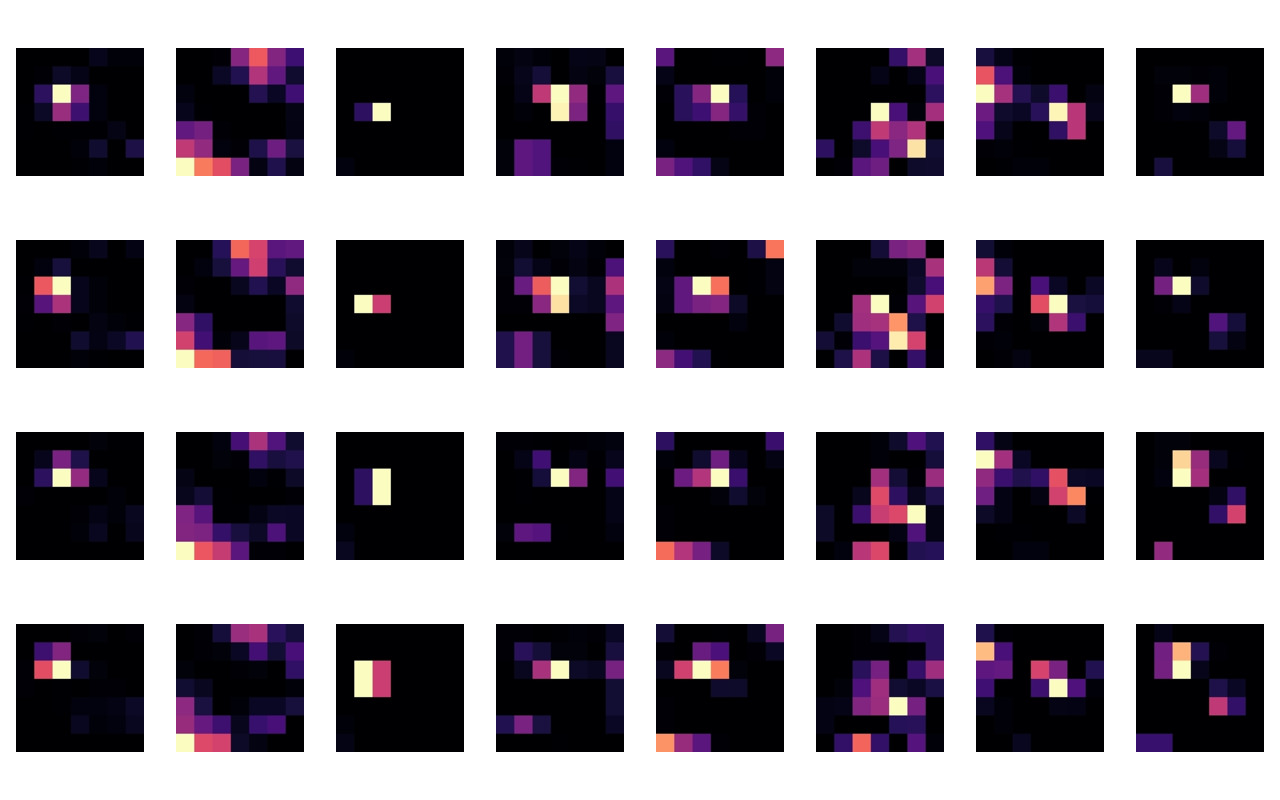

We propose learnable polyphase sampling (LPS), a pair of learnable down/upsampling layers that enable truly shift-invariant and equivariant convolutional networks. LPS can be trained end-to-end from data and generalizes existing handcrafted downsampling layers. It is widely applicable as it can be integrated into any convolutional network by replacing down/upsampling layers. We evaluate LPS on image classification and semantic segmentation. Experiments show that LPS is on-par with or outperforms existing methods in both performance and shift consistency. For the first time, we achieve true shift-equivariance on semantic segmentation (PASCAL VOC), i.e., 100% shift consistency, outperforming baselines by an absolute 3.3%.

翻译:我们提出可学习的多相取样(LPS),这是一对可学习的下游/上游层,能够真正使变异和等同变相网络得以建立。LPS可以从数据中培训端到端,并概括现有手工制作的下游取样层。它可以广泛应用,因为它可以通过替换下游/上游层而融入任何变异网络。我们评估关于图像分类和语义分解的LPS。实验显示,LPS在性能和转移一致性方面与现有方法是平行的或优于现有方法的。我们第一次在语义分解(PASCAL VOC)上实现了真正的变异(PASCAL VOC),即100%的变异一致性,超过绝对3.3%的基线。