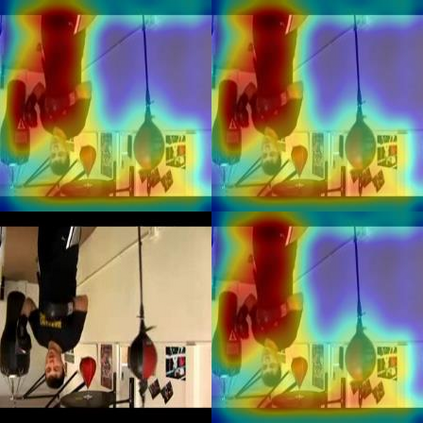

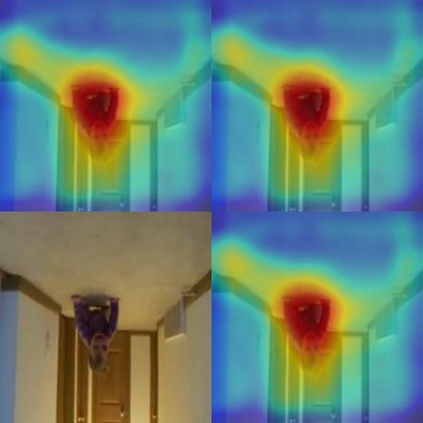

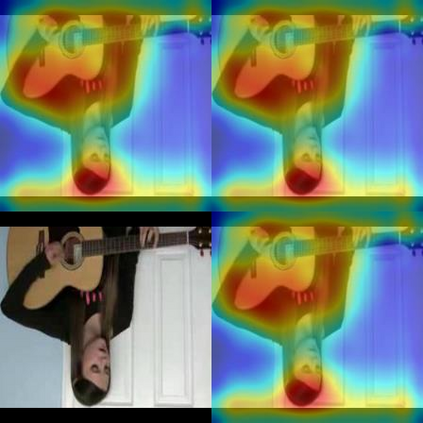

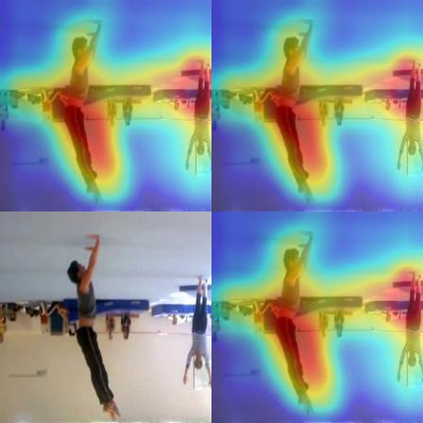

Attempt to fully discover the temporal diversity for self-supervised video representation learning, this work takes advantage of the temporal dependencies of videos and further proposes a novel self-supervised method named Temporal Contrastive Graph Learning (TCGL). In contrast to the existing methods that consider the temporal dependency from a single scale, our TCGL roots in a hybrid graph contrastive learning strategy to regard the inter-snippet and intra-snippet temporal dependencies as self-supervision signals for temporal representation learning. To learn multi-scale temporal dependencies, the TCGL integrates the prior knowledge about the frame and snippet orders into graph structures, i.e., the intra-/inter- snippet temporal contrastive graph modules. By randomly removing edges and masking node features of the intra-snippet graphs or inter-snippet graphs, the TCGL can generate different correlated graph views. Then, specific contrastive modules are designed to maximize the agreement between node embeddings in different views. To learn the global context representation and recalibrate the channel-wise features adaptively, we introduce an adaptive video snippet order prediction module, which leverages the relational knowledge among video snippets to predict the actual snippet orders. Experimental results demonstrate the superiority of our TCGL over the state-of-the-art methods on large-scale action recognition and video retrieval benchmarks.

翻译:这项工作试图充分发现自我监督视频代表学习的时间多样性,利用视频的时间依赖性,进一步提出一种自监督的新方法,名为“时间对立图表学习(TTCGL) ” 。与从单一尺度考虑时间依赖的现有方法相比,我们的TTCGL根根根根是一个混合图形对比学习战略,以将机友间和机友间时间依赖视为时间代表学习的自我监督信号。为了学习多种规模的时间依赖性,TCGL将以前关于框架和片断订单的知识整合到图表结构中,即间/间间间时间对比图表模块中。通过随机去除场内图或机组间图的边缘并遮盖节点特征,TCGL可以产生不同的关联图形观点。然后,设计具体的对比模块,以最大限度地扩大节点嵌入不同观点中的节点基准之间的协议。为了学习全球背景,并重新将关于框架和片断订单的先前知识融入图形结构结构结构结构,即内部/间间对比图形对比模型模型模型展示了我们对机能级预测的大规模图像-机能级序列的定位,我们引入了一种感官序的图像-感官平级预测,以适应性的图像-感官比关系,我们引入了一种对机能-感官级的图像-感平级的图像-感官比比。