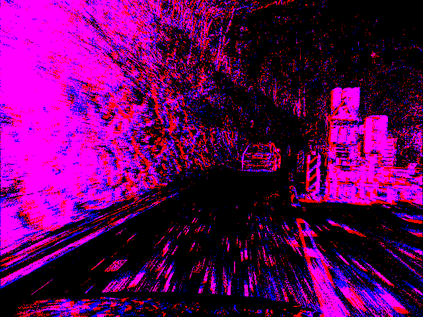

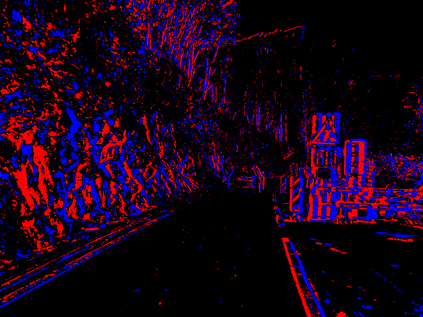

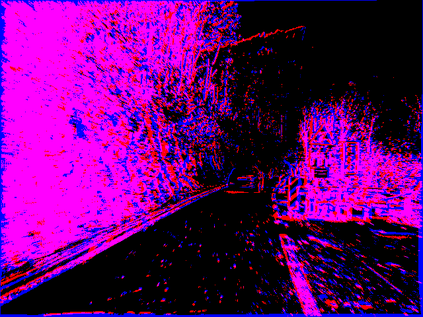

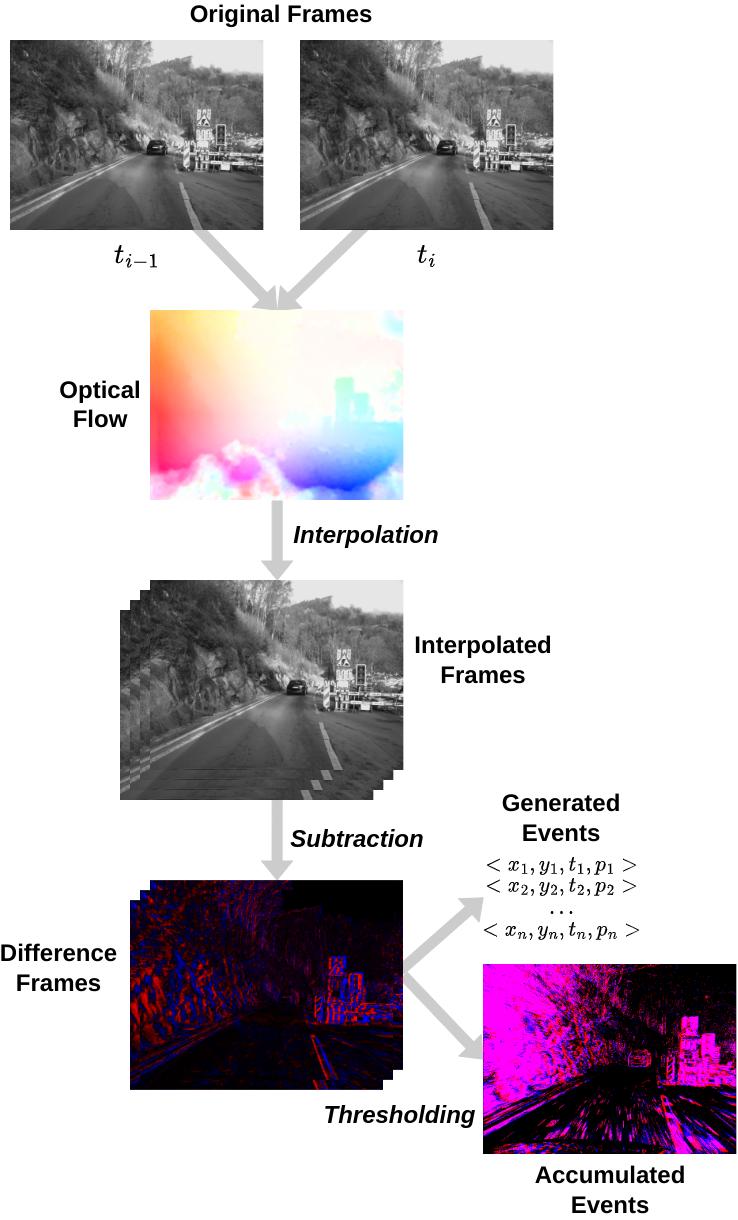

Event cameras are becoming increasingly popular in robotics and computer vision due to their beneficial properties, e.g., high temporal resolution, high bandwidth, almost no motion blur, and low power consumption. However, these cameras remain expensive and scarce in the market, making them inaccessible to the majority. Using event simulators minimizes the need for real event cameras to develop novel algorithms. However, due to the computational complexity of the simulation, the event streams of existing simulators cannot be generated in real-time but rather have to be pre-calculated from existing video sequences or pre-rendered and then simulated from a virtual 3D scene. Although these offline generated event streams can be used as training data for learning tasks, all response time dependent applications cannot benefit from these simulators yet, as they still require an actual event camera. This work proposes simulation methods that improve the performance of event simulation by two orders of magnitude (making them real-time capable) while remaining competitive in the quality assessment.

翻译:事件相机由于其优越的特性,如高时间分辨率、高带宽、几乎无运动模糊和低功耗,越来越受到机器人技术和计算机视觉领域的青睐。然而,这些相机的价格高昂,市场上稀缺,这使它们无法普及。使用事件模拟器可以最小化开发新算法所需的实际事件相机的需求。然而,由于模拟的计算复杂度,现有模拟器的事件流无法实时生成,而必须从现有视频序列预先计算或预渲染并从虚拟3D场景模拟。虽然可以使用这些离线生成的事件流作为学习任务的训练数据,但所有响应时间相关的应用程序都无法从这些模拟器中受益,因为它们仍然需要实际的事件相机。本文提出了模拟方法,将事件模拟的性能提高了两个数量级(使其实时可用),同时在质量评估方面仍具有竞争力。