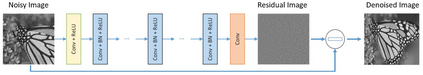

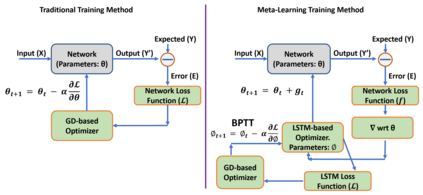

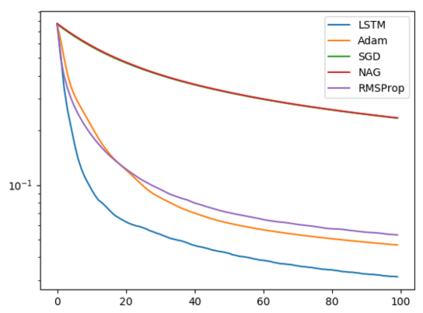

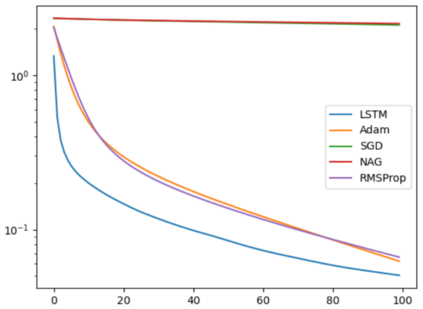

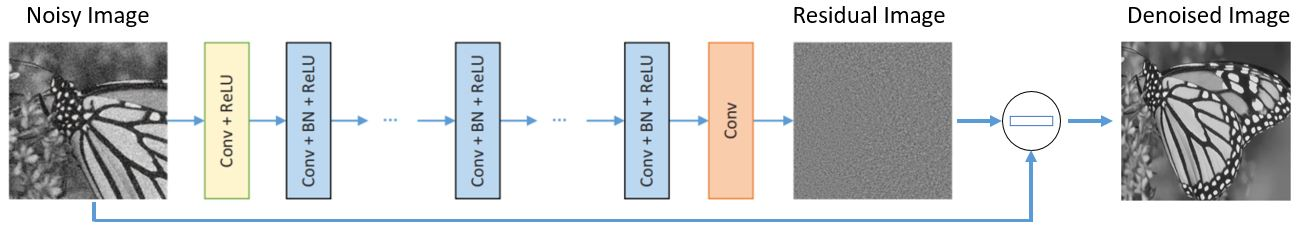

The recent application of deep learning (DL) to various tasks has seen the performance of classical techniques surpassed by their DL-based counterparts. As a result, DL has equally seen application in the removal of noise from images. In particular, the use of deep feed-forward convolutional neural networks (DnCNNs) has been investigated for denoising. It utilizes advances in DL techniques such as deep architecture, residual learning, and batch normalization to achieve better denoising performance when compared with the other classical state-of-the-art denoising algorithms. However, its deep architecture resulted in a huge set of trainable parameters. Meta-optimization is a training approach of enabling algorithms to learn to train themselves by themselves. Training algorithms using meta-optimizers have been shown to enable algorithms to achieve better performance when compared to the classical gradient descent-based training approach. In this work, we investigate the application of the meta-optimization training approach to the DnCNN denoising algorithm to enhance its denoising capability. Our preliminary experiments on simpler algorithms reveal the prospects of utilizing the meta-optimization training approach towards the enhancement of the DnCNN denoising capability.

翻译:最近将深层次学习(DL)应用于各种任务的做法,已经看到古典技术的效绩超过其基于DL的古典算法的效绩。结果,DL同样看到在清除图像中的噪音方面应用了传统技术。特别是,已经调查了利用深进进进进进进进进进的神经网络(DNNNs)来消除污染。它利用了深层结构、残余学习和批次正常化等DL技术的进步,以便在与其他古老的最先进的脱钩算法相比,实现更好的分解。然而,它的深层结构导致一套巨大的可培训参数。Meta-优化是一种培训方法,使算法能够自行学习自我培训。使用元优化器的培训算法已证明能够使算法在与传统的梯性脱钩式培训方法相比实现更好的业绩。在这项工作中,我们研究了对DCNN解析算法应用的元-奥化培训方法以加强其脱钩能力的情况。我们关于简化算法的初步实验揭示了使用元增压方法的前景。