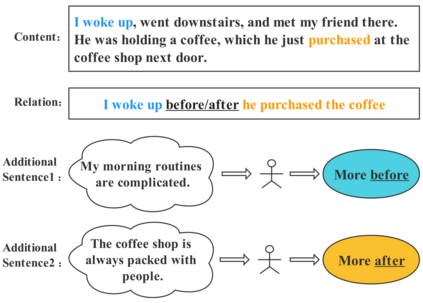

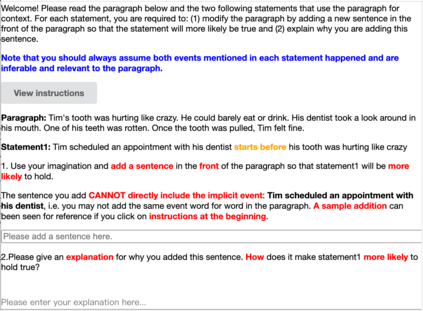

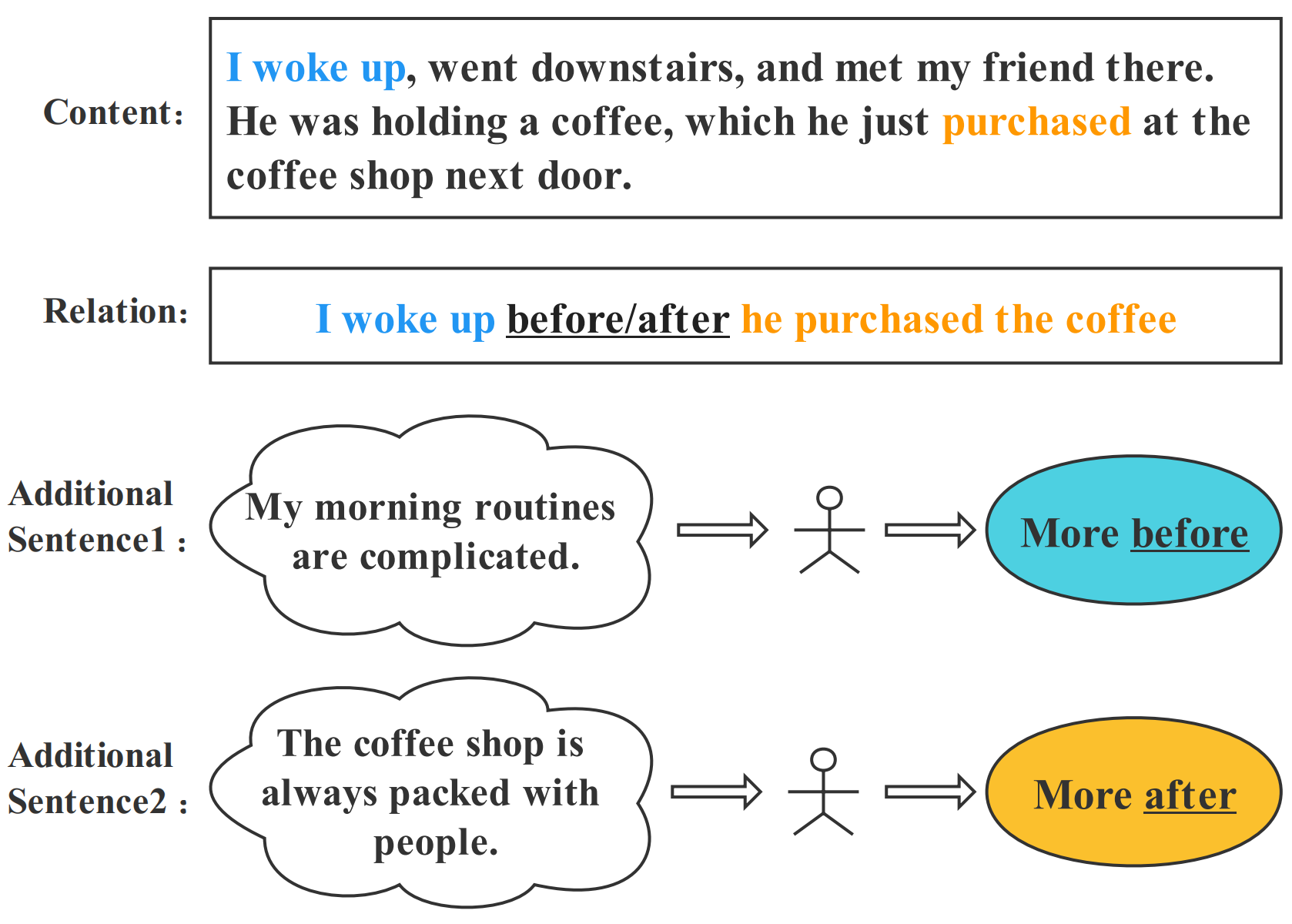

Temporal reasoning is the task of predicting temporal relations of event pairs with corresponding contexts. While some temporal reasoning models perform reasonably well on in-domain benchmarks, we have little idea of the systems' generalizability due to existing datasets' limitations. In this work, we introduce a novel task named TODAY that bridges this gap with temporal differential analysis, which as the name suggests, evaluates if systems can correctly understand the effect of incremental changes. Specifically, TODAY makes slight context changes for given event pairs, and systems need to tell how this subtle contextual change will affect temporal relation distributions. To facilitate learning, TODAY also annotates human explanations. We show that existing models, including GPT-3, drop to random guessing on TODAY, suggesting that they heavily rely on spurious information rather than proper reasoning for temporal predictions. On the other hand, we show that TODAY's supervision style and explanation annotations can be used in joint learning and encourage models to use more appropriate signals during training and outperform across several benchmarks. TODAY can also be used to train models to solicit incidental supervision from noisy sources such as GPT-3 and moves farther towards generic temporal reasoning systems.

翻译:时间推理是预测具有相应背景的事件对应物的时间关系的任务。 虽然一些时间推理模型在内部基准上表现得相当好, 但是由于现有数据集的局限性, 我们对系统的一般性知之甚少。 在这项工作中, 我们引入了名为Today的新任务, 将这一差距与时间差异分析( 如名称所示 ) 相连接, 评估系统能否正确理解渐进变化的影响。 具体地说, 托日对特定事件对应物略作背景变化, 系统需要说明这种微妙的背景变化将如何影响时间关系分布。 为了便利学习, 托日也注意人类的解释。 我们显示, 包括 GPT-3 在内的现有模型会在今天随机进行猜测, 表明它们严重依赖虚假信息, 而不是时间预测的适当推理。 另一方面, 我们显示, 托日的监管风格和解释说明可用于联合学习, 并鼓励模型在培训过程中使用更适当的信号, 超越几个基准。 托日还可以用来培训模型, 以吸引诸如 GPT-3 和 更接近一般的时间推理系统等的源的附带监督 。