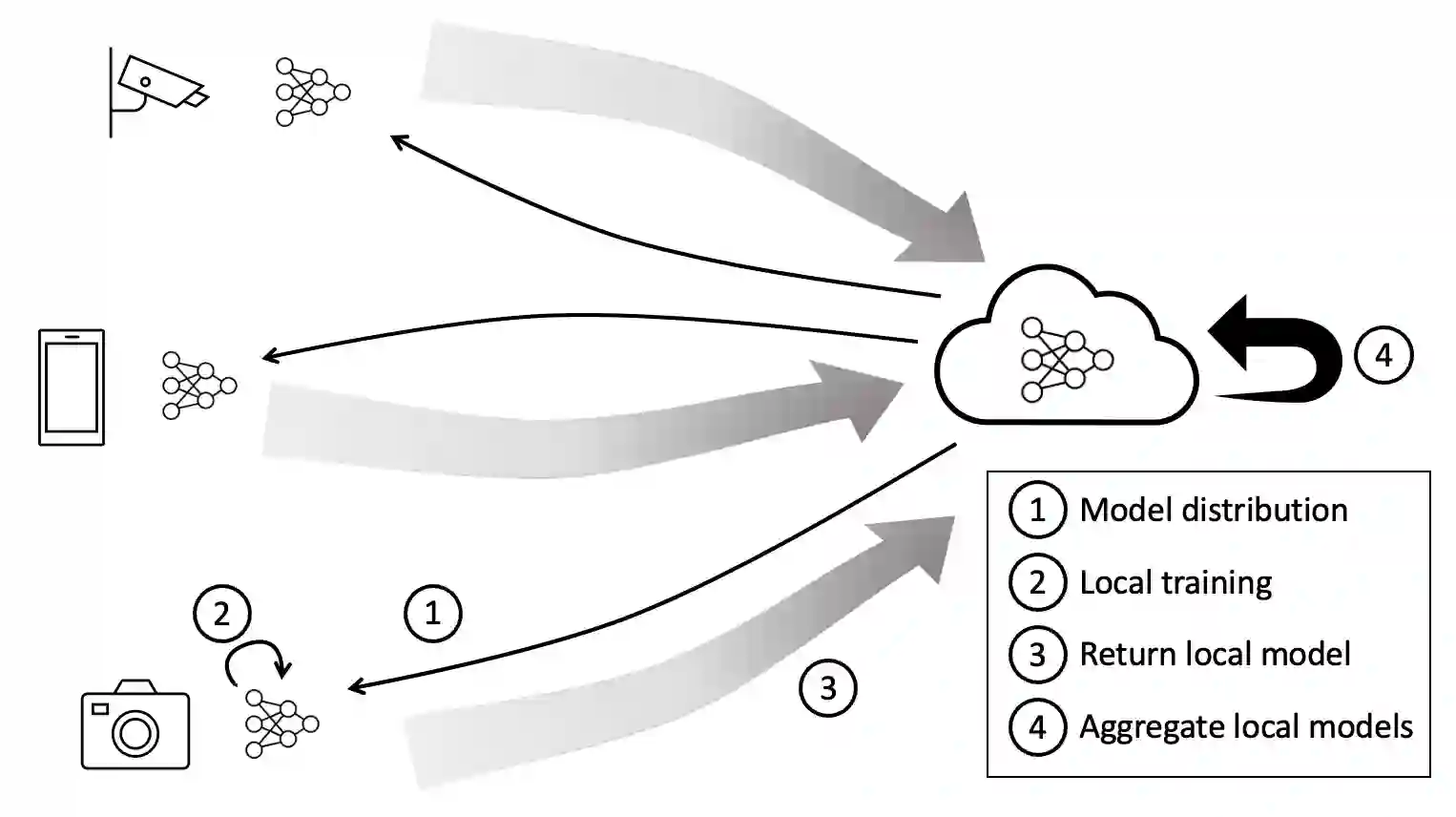

Federated Learning offers a way to train deep neural networks in a distributed fashion. While this addresses limitations related to distributed data, it incurs a communication overhead as the model parameters or gradients need to be exchanged regularly during training. This can be an issue with large scale distribution of learning asks and negate the benefit of the respective resource distribution. In this paper, we we propose to utilise parallel Adapters for Federated Learning. Using various datasets, we show that Adapters can be applied with different Federated Learning techniques. We highlight that our approach can achieve similar inference performance compared to training the full model while reducing the communication overhead drastically. We further explore the applicability of Adapters in cross-silo and cross-device settings, as well as different non-IID data distributions.

翻译:联邦学习组织提供了一种以分布方式培训深层神经网络的方法。这解决了与分布式数据有关的限制,但也引起了通信管理费,因为模型参数或梯度需要在培训期间定期交流。这可能是大规模分发学习要求的问题,否定了各自资源分配的好处。我们在本文件中建议使用平行的适应器来进行联邦学习。我们使用各种数据集,表明适应器可以应用不同的联邦学习技术。我们强调,我们的方法可以取得与培训全模式类似的推论性能,同时大幅降低通信管理费。我们进一步探索适应器在跨筒仓和跨构件环境中的适用性,以及不同的非国际开发组织数据分配。