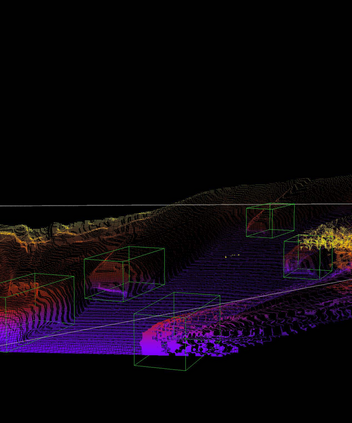

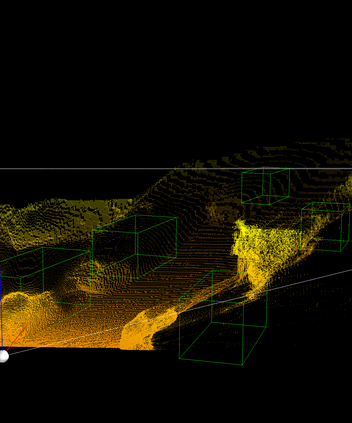

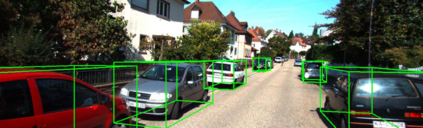

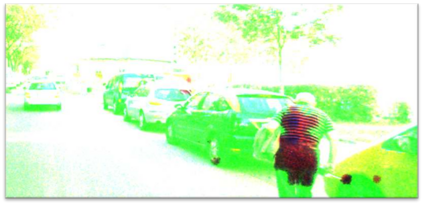

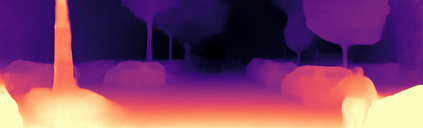

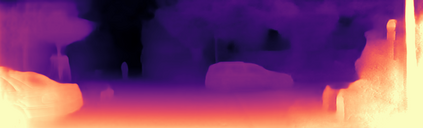

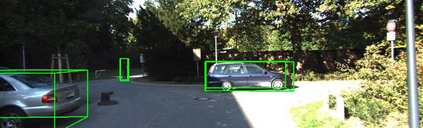

3D object detection from a single image without LiDAR is a challenging task due to the lack of accurate depth information. Conventional 2D convolutions are unsuitable for this task because they fail to capture local object and its scale information, which are vital for 3D object detection. To better represent 3D structure, prior arts typically transform depth maps estimated from 2D images into a pseudo-LiDAR representation, and then apply existing 3D point-cloud based object detectors. However, their results depend heavily on the accuracy of the estimated depth maps, resulting in suboptimal performance. In this work, instead of using pseudo-LiDAR representation, we improve the fundamental 2D fully convolutions by proposing a new local convolutional network (LCN), termed Depth-guided Dynamic-Depthwise-Dilated LCN (D$^4$LCN), where the filters and their receptive fields can be automatically learned from image-based depth maps, making different pixels of different images have different filters. D$^4$LCN overcomes the limitation of conventional 2D convolutions and narrows the gap between image representation and 3D point cloud representation. Extensive experiments show that D$^4$LCN outperforms existing works by large margins. For example, the relative improvement of D$^4$LCN against the state-of-the-art on KITTI is 9.1\% in the moderate setting.

翻译:由于缺少准确深度信息,从一个没有LiDAR的图像中检测3D对象是一项具有挑战性的任务。常规 2D 演进不适合这项任务,因为没有捕捉本地天体及其规模信息,而这些天体和规模信息对于3D天体探测至关重要。为了更好地代表 3D 结构,先行艺术通常会将从 2D 图像估计的深度地图转换成假LiDAR 代表器,然后应用现有的 3D 点球形天体探测器。但是,其结果在很大程度上取决于估计深度地图的准确性,从而导致不尽善的性能。在这项工作中,我们不使用伪LIDAR 代表器,而是通过提出一个新的本地光学网络(LCN)来改进基本 2D 的2D, 来改进基本 2D, 并缩小目前D- L 4 的图像代表器 和 3D- L 级的图像代表器之间的距离。通过 D- D- 4 的大规模图像代表器 和 D- D- L 平面的图像代表器 展示显示大型的D- D- L 的大型图像比值 。