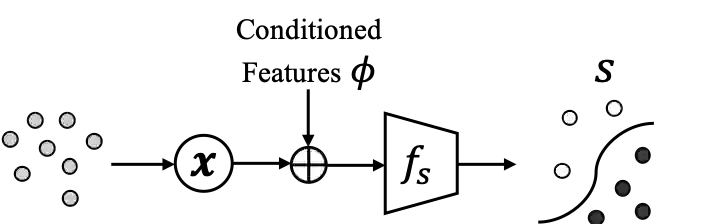

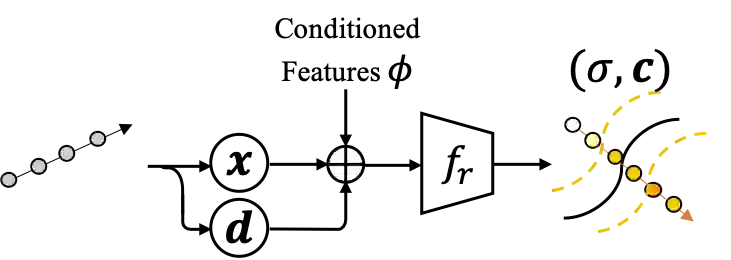

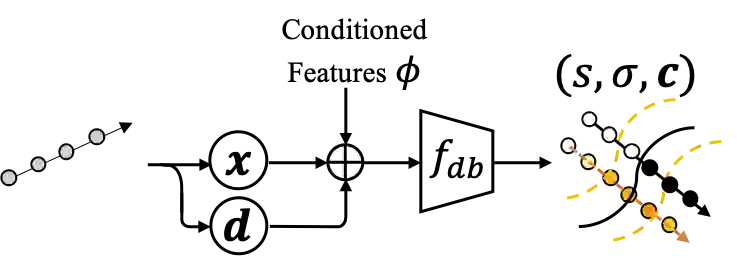

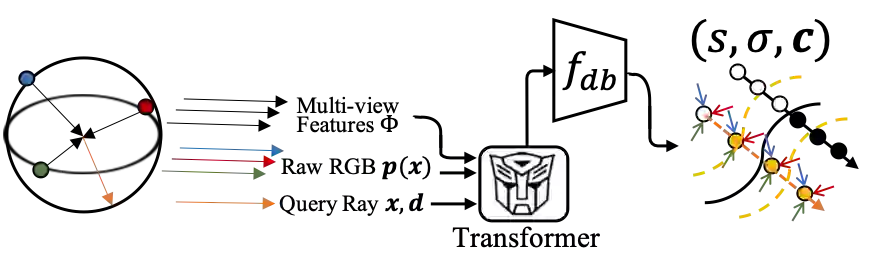

We introduce DoubleField, a novel representation combining the merits of both surface field and radiance field for high-fidelity human rendering. Within DoubleField, the surface field and radiance field are associated together by a shared feature embedding and a surface-guided sampling strategy. In this way, DoubleField has a continuous but disentangled learning space for geometry and appearance modeling, which supports fast training, inference, and finetuning. To achieve high-fidelity free-viewpoint rendering, DoubleField is further augmented to leverage ultra-high-resolution inputs, where a view-to-view transformer and a transfer learning scheme are introduced for more efficient learning and finetuning from sparse-view inputs at original resolutions. The efficacy of DoubleField is validated by the quantitative evaluations on several datasets and the qualitative results in a real-world sparse multi-view system, showing its superior capability for photo-realistic free-viewpoint human rendering. For code and demo video, please refer to our project page: http://www.liuyebin.com/dbfield/dbfield.html.

翻译:我们引入了双外观, 将地表字段和光亮字段的优点结合起来, 用于高不洁的人类造型。 在双外观中, 地表字段和光亮字段通过一个共同的嵌入功能和表面制导取样战略联系在一起。 这样, 双外观有一个连续但分解的几何和外观建模学习空间, 支持快速培训、 推断和微调。 为了实现高不洁的自由视野翻版, 双外观被进一步扩展, 以利用超高分辨率输入, 引入观视变异器和传输学习计划, 以便更有效地学习和微调原始分辨率的稀有图像输入。 双外观的效力通过对数个数据集的定量评估和真实世界稀疏多视图系统中的质量结果得到验证, 显示其光学自由视点人类造型的超强能力。 关于代码和演示视频, 请参见我们的项目网页: http://www.liuyebin.com/dbfield/dbfield.html。