【ECCV2018】24篇论文代码实现

【导读】计算机视觉领域的顶级会议ECCV2018于9月8日在德国慕尼黑举办,前两天是workshop日程。在主会议正式开幕之前,让我们先来看看24位ECCV2018论文作者开源的论文实现代码~

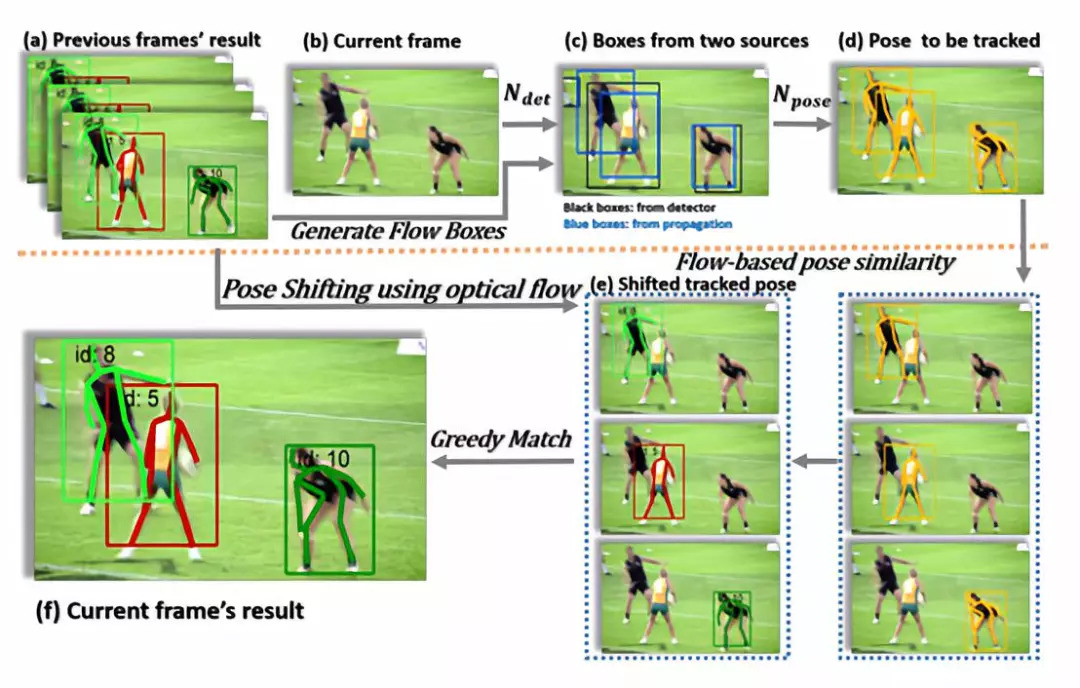

1. Simple Baselines for Human Pose Estimation and Tracking (357 stras)

论文链接:https://arxiv.org/abs/1804.06208

代码链接:https://github.com/Microsoft/human-pose-estimation.pytorch#simple-baselines-for-human-pose-estimation-and-tracking

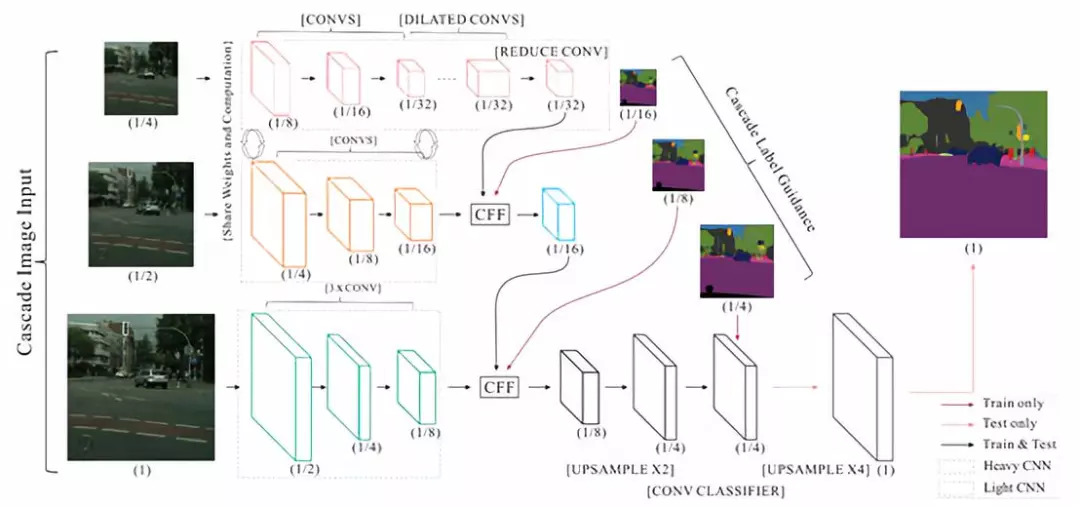

2. ICNet for Real-Time SemanticSegmentation on High-Resolution Images (344 stars)

论文链接:https://hszhao.github.io/projects/icnet/

代码链接:https://github.com/hszhao/ICNet

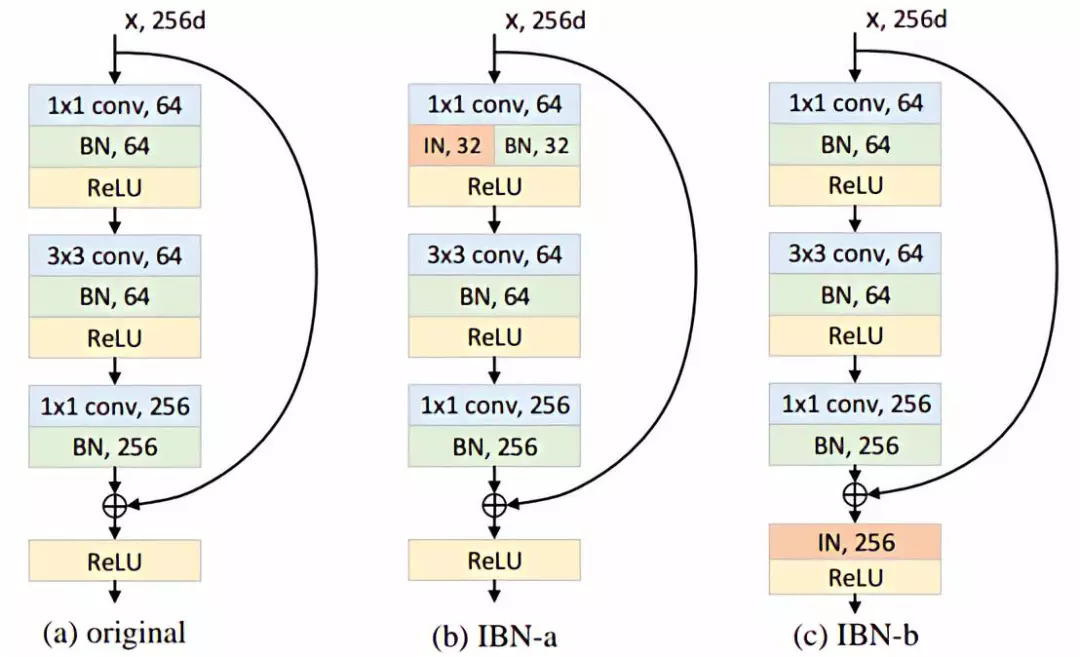

3. Instance-Batch Normalization Network (225stars)

论文链接:https://arxiv.org/abs/1807.09441

代码链接:https://github.com/XingangPan/IBN-Net

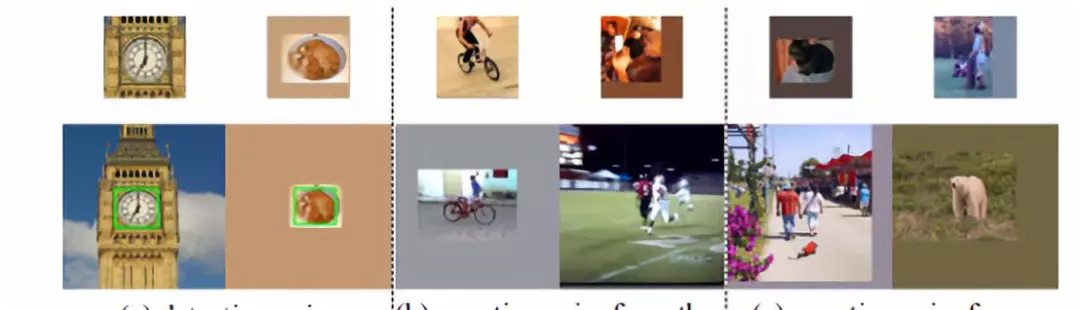

4. Distractor-aware SiameseNetworks for Visual Object Tracking (225 stars)

论文链接:https://arxiv.org/pdf/1808.06048.pdf

代码链接:https://github.com/foolwood/DaSiamRPN

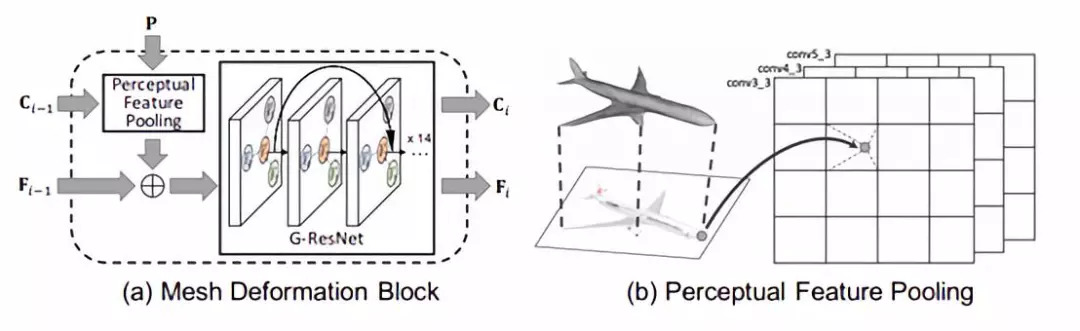

5. Pixel2Mesh: Generating3D Mesh Models from Single RGB Images (98 stars)

论文链接:https://arxiv.org/abs/1804.01654

代码链接:https://github.com/nywang16/Pixel2Mesh

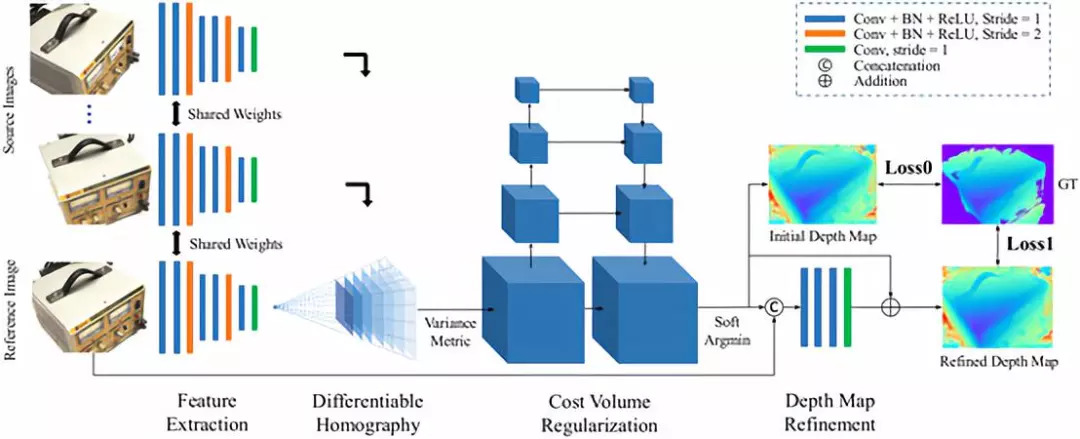

6. MVSNet: Depth Inferencefor Unstructured Multi-view Stereo(85 stars)

论文链接:https://arxiv.org/abs/1804.02505

代码链接:https://github.com/YoYo000/MVSNet

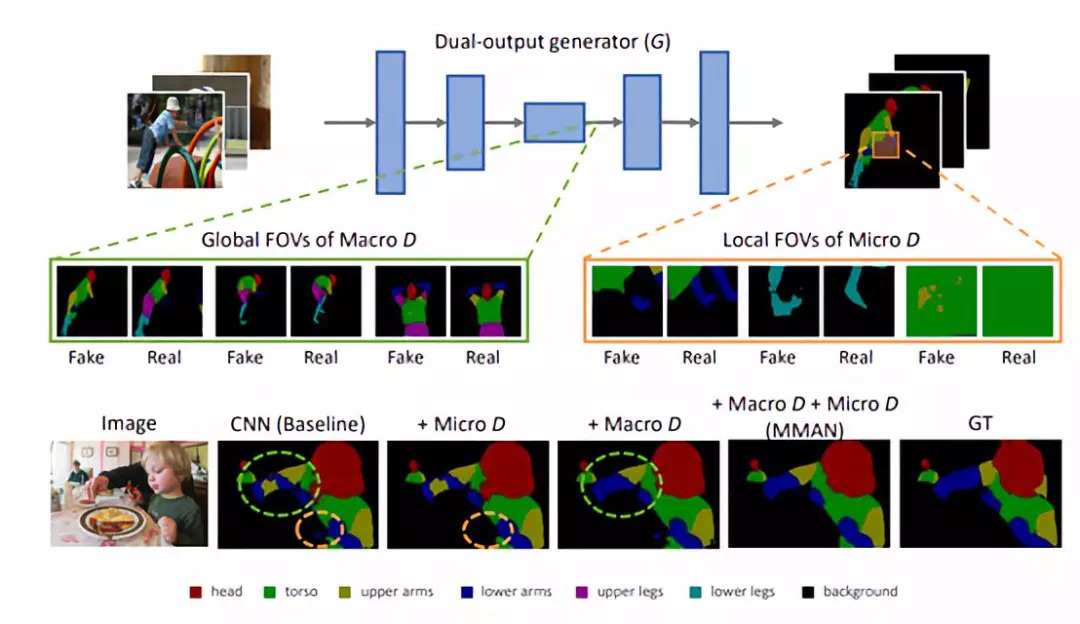

7. Macro-Micro AdversarialNetwork for Human Parsing (61stars)

论文链接:https://arxiv.org/abs/1807.08260

代码链接:https://github.com/RoyalVane/MMAN

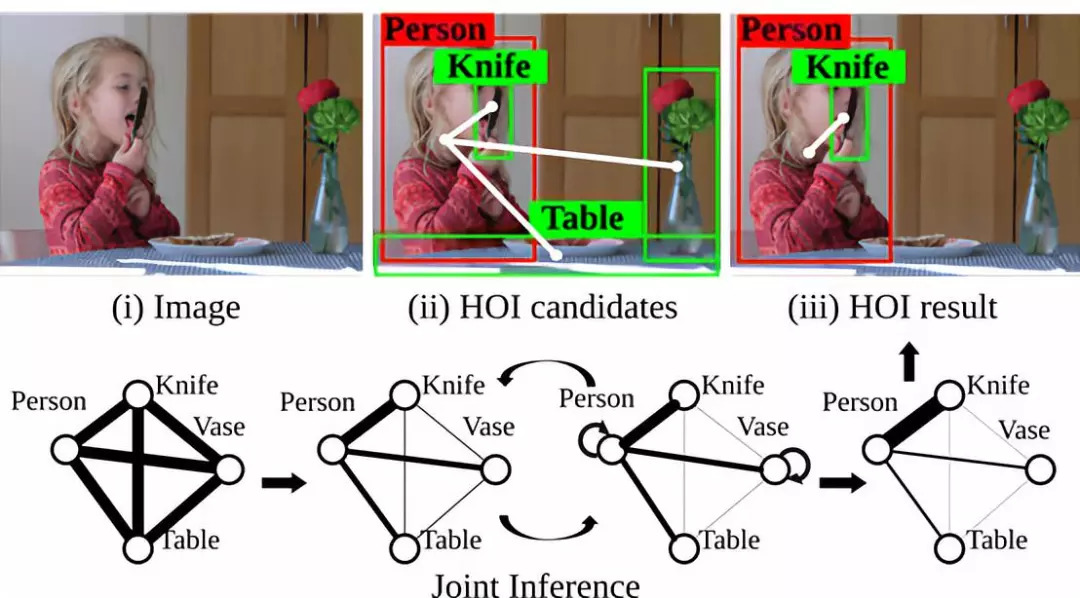

8. Learning Human-Object Interactions by GraphParsing Neural Networks (54 stars)

论文链接:http://web.cs.ucla.edu/~syqi/publications/eccv2018gpnn/eccv2018gpnn.pdf

代码链接:https://github.com/SiyuanQi/gpnn

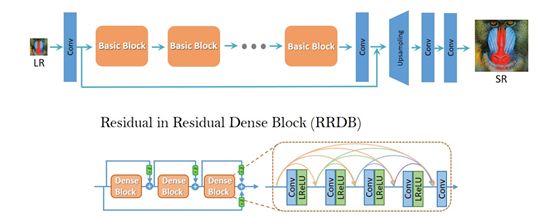

9. Enhanced Super-Resolution GenerativeAdversarial Networks

论文链接:https://arxiv.org/abs/1809.00219

代码链接:https://github.com/xinntao/ESRGAN

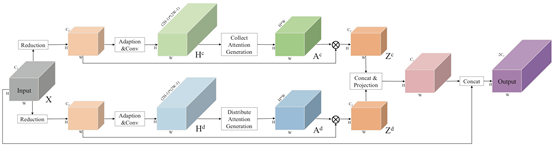

10. PSANet: Point-wise Spatial AttentionNetwork for Scene Parsing (34 stars)

论文链接:https://hszhao.github.io/projects/psanet/

代码链接:https://github.com/hszhao/PSANet

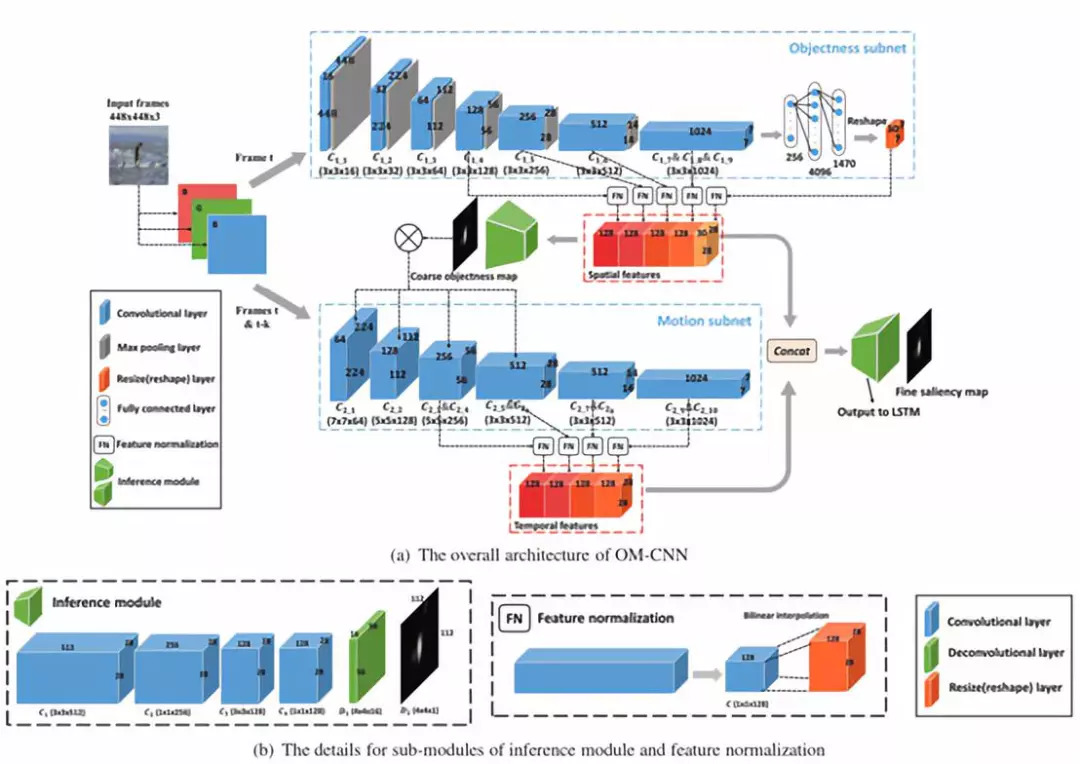

11.OM-CNN+2C-LSTM for video salinecy prediction

论文链接:https://arxiv.org/abs/1709.06316

代码链接:https://github.com/remega/OMCNN_2CLSTM

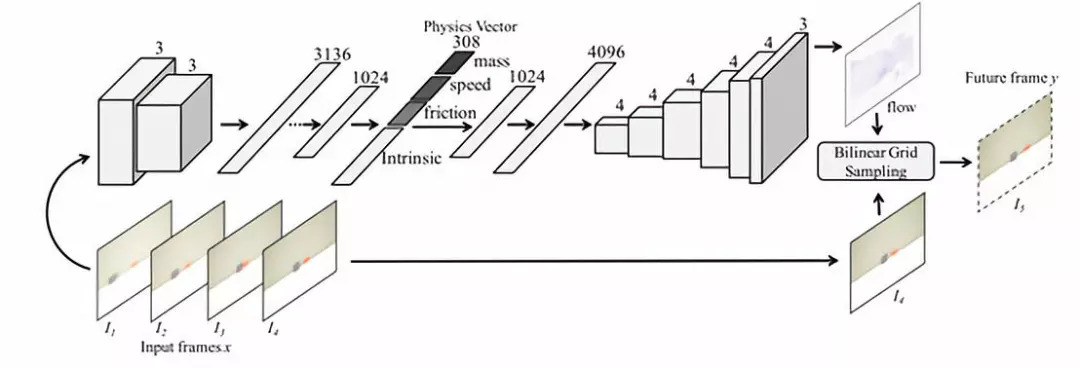

12. Interpretable Intuitive Physics Model

论文链接:https://www.cs.cmu.edu/~xiaolonw/papers/ECCV_Physics_Cameraready.pdf

代码链接:https://github.com/tianye95/interpretable-intuitive-physics-model

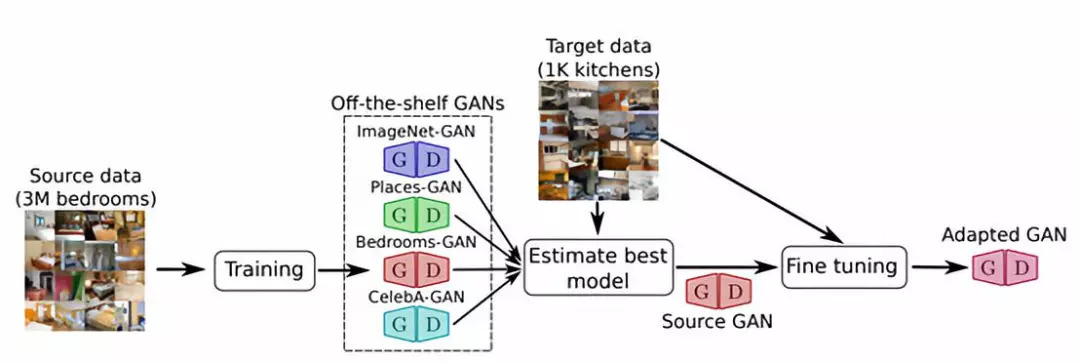

13. Transferring GANs generating images fromlimited data

论文链接:https://arxiv.org/abs/1805.01677

代码链接:https://github.com/yaxingwang/Transferring-GANs

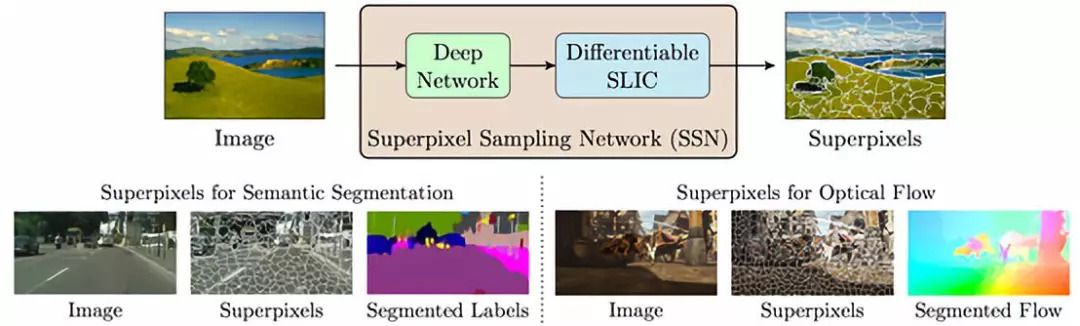

14. Superpixel Sampling Networks

论文链接:https://varunjampani.github.io/ssn/

代码链接:https://github.com/NVlabs/ssn_superpixels

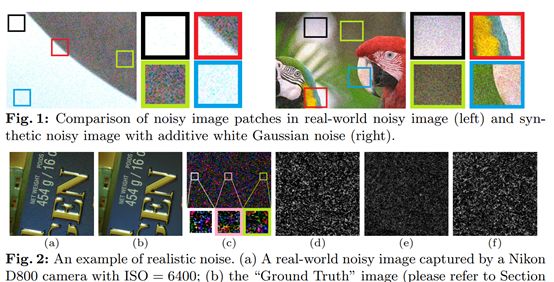

15. A Trilateral Weighted Sparse Coding Schemefor Real-World Image Denoising

论文链接:https://arxiv.org/abs/1807.04364

代码链接:https://github.com/csjunxu/TWSC-ECCV2018

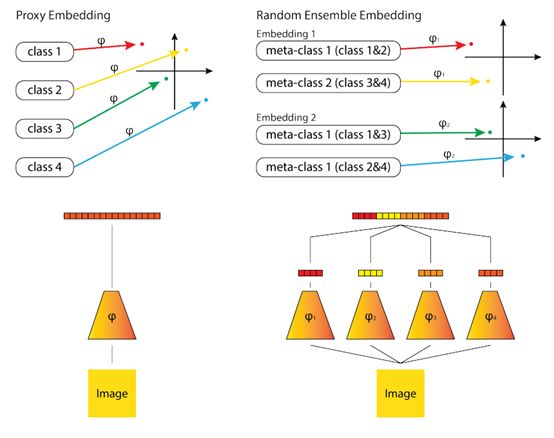

16. Deep Randomized Ensembles for MetricLearning

论文链接:https://arxiv.org/abs/1808.04469

代码链接:https://github.com/littleredxh/DREML

17. VISDrone2018: Challenge-ObjectDetection in Images

竞赛内容主页:http://www.aiskyeye.com/

实现代码:https://github.com/zhpmatrix/VisDrone2018

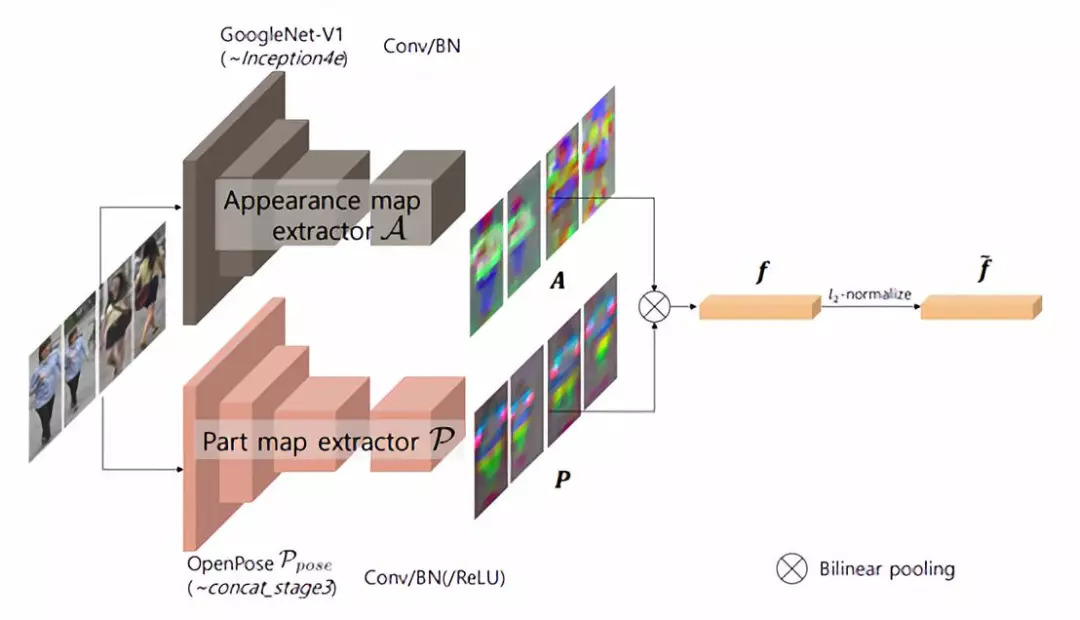

18. Part-Aligned Bilinear Representations forPerson Re-identification

论文链接:https://cv.snu.ac.kr/publication/conf/2018/reid_eccv18.pdf

代码链接:https://github.com/yuminsuh/part_bilinear_reid

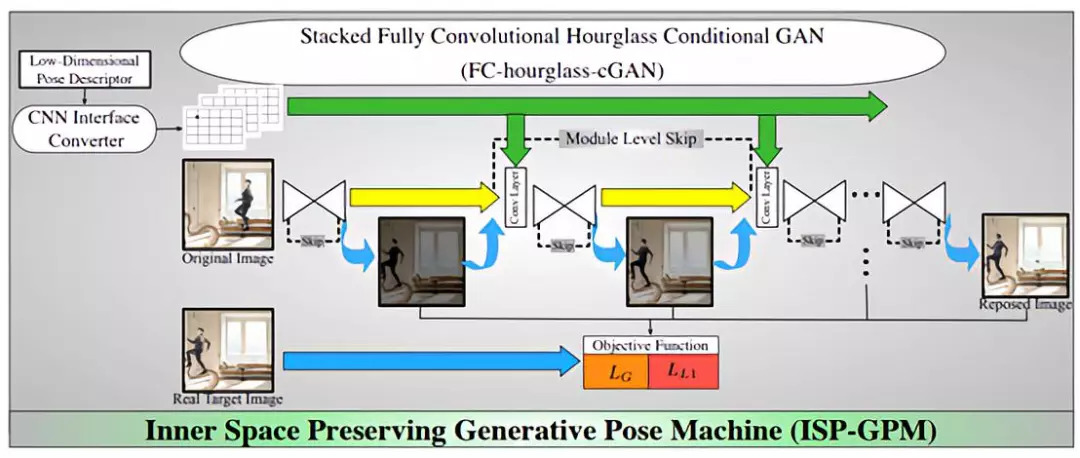

19. Inner Space Preserving - Generative PoseMachine (ISP-GPM)

论文链接:https://arxiv.org/abs/1808.02104

代码链接:https://github.com/ostadabbas/isp-gpm

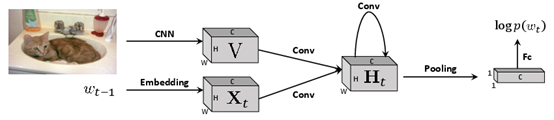

20. Rethinking the Form of Latent States inImage Captioning

论文链接:https://arxiv.org/abs/1807.09958

代码链接:https://github.com/doubledaibo/2dcaption_eccv2018

21. Diverse Conditional Image Generation byStochastic Regression with Latent Drop-Out Codes

论文链接:https://arxiv.org/abs/1808.01121

代码链接:https://github.com/SSAW14/Image_Generation_with_Latent_Code

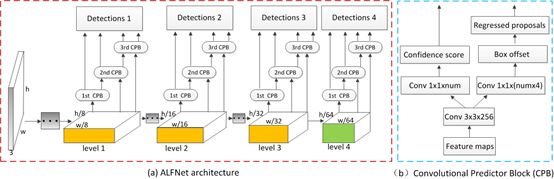

22. Learning Efficient Single-stage PedestrianDetectors by Asymptotic Localization Fitting

论文链接:https://github.com/liuwei16/ALFNet/blob/master/docs/2018ECCV-ALFNet.pdf

代码链接:https://github.com/liuwei16/ALFNet

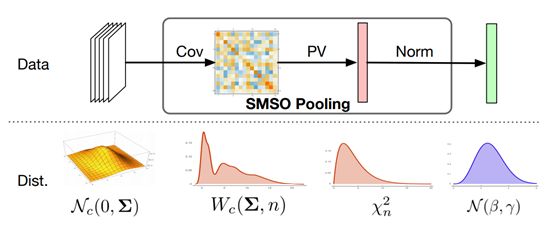

23. Statistically-motivated Second-orderPooling

论文链接:https://arxiv.org/abs/1801.07492

代码链接:https://github.com/kcyu2014/smsop

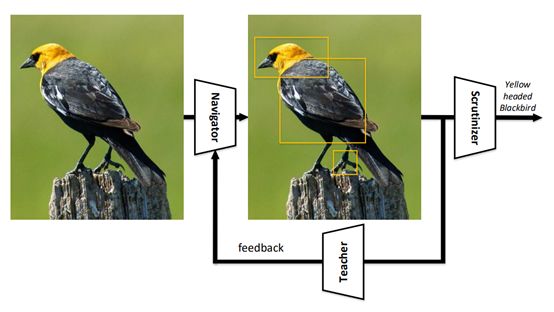

24. Learning to Navigate for Fine-grainedClassification

论文链接:https://arxiv.org/abs/1809.00287

代码链接:https://github.com/yangze0930/NTS-Net

-END-

专 · 知

人工智能领域26个主题知识资料全集获取与加入专知人工智能服务群: 欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知