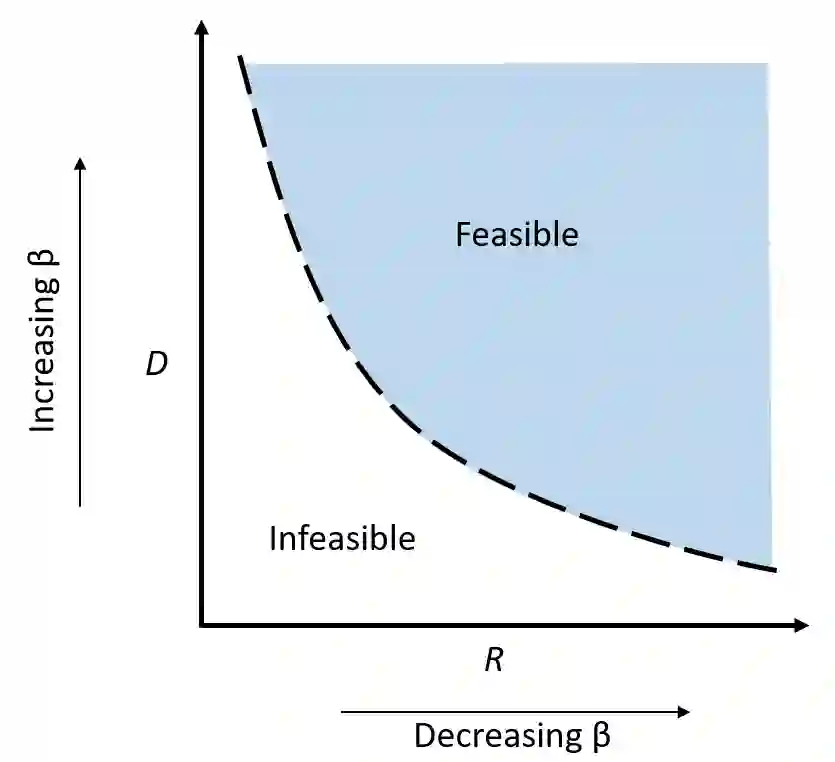

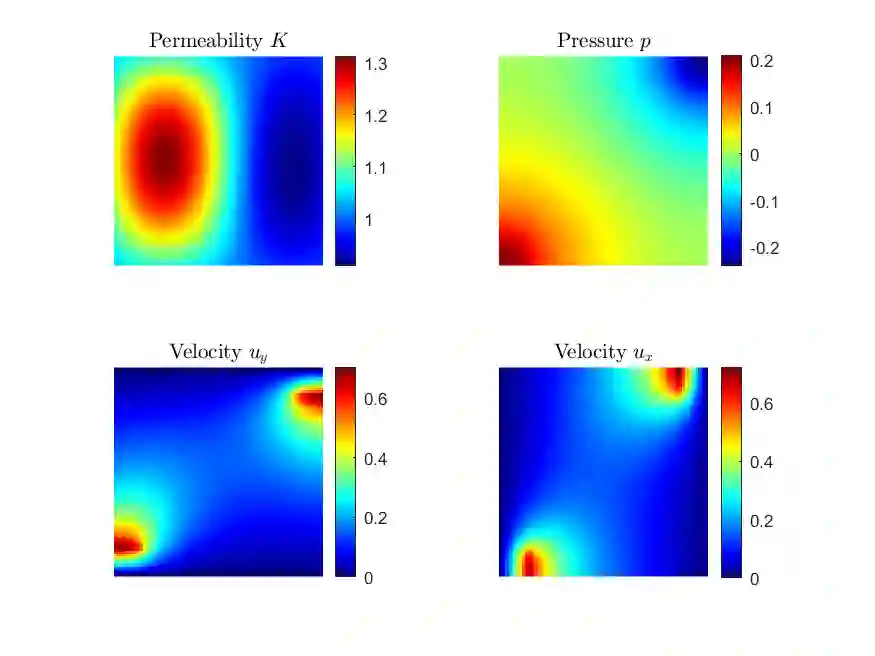

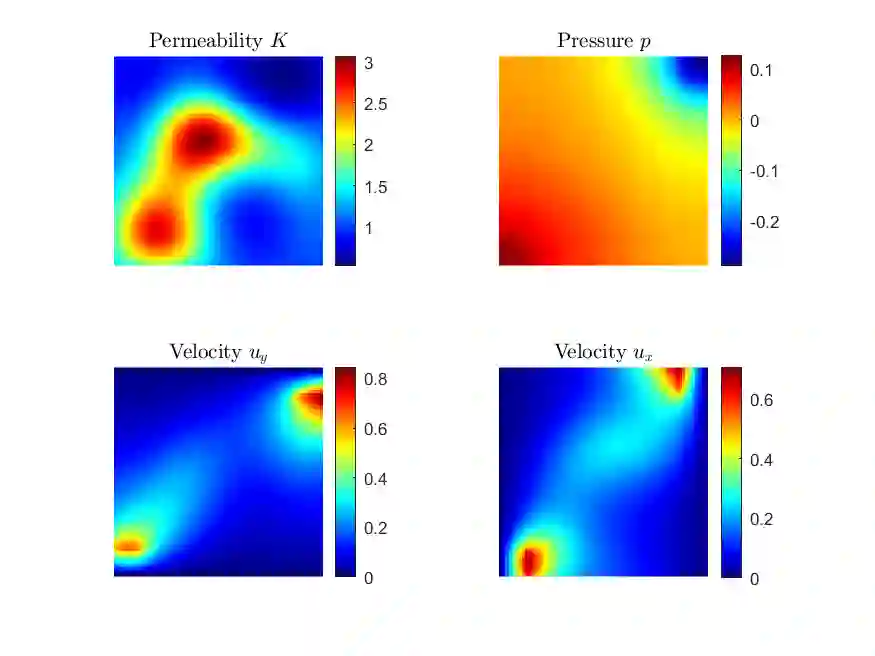

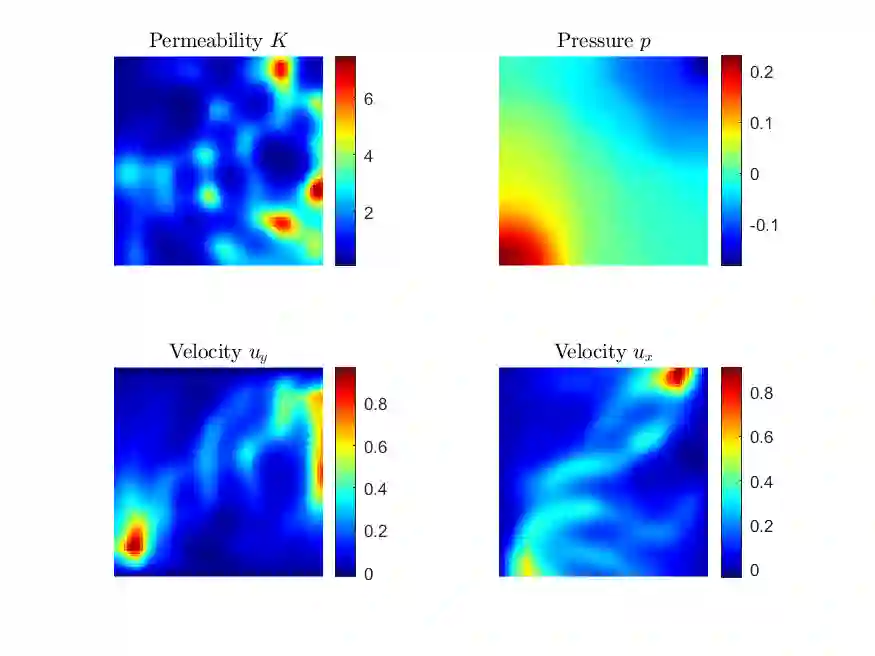

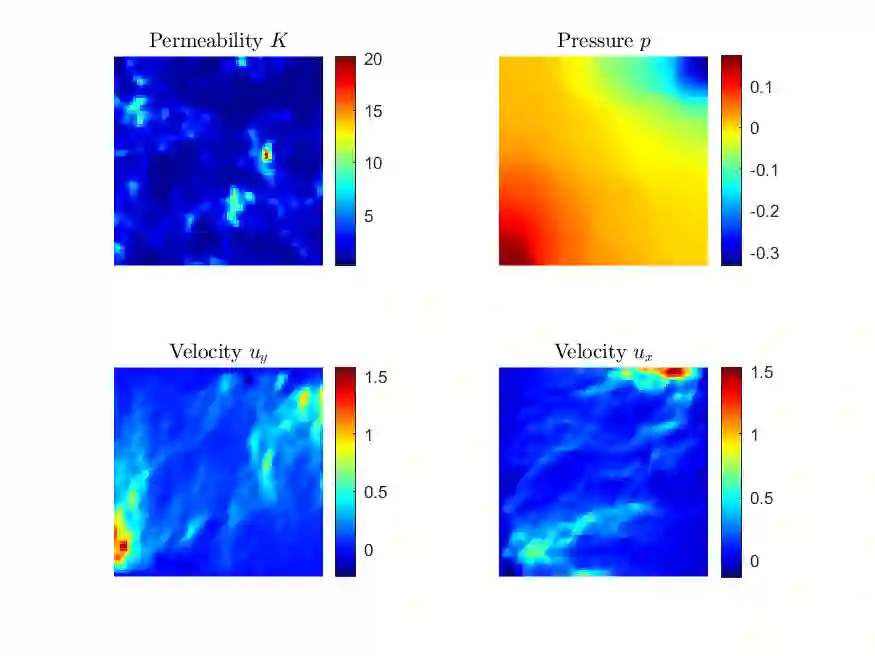

The ability to extract generative parameters from high-dimensional fields of data in an unsupervised manner is a highly desirable yet unrealized goal in computational physics. This work explores the use of variational autoencoders (VAEs) for non-linear dimension reduction with the specific aim of {\em disentangling} the low-dimensional latent variables to identify independent physical parameters that generated the data. A disentangled decomposition is interpretable, and can be transferred to a variety of tasks including generative modeling, design optimization, and probabilistic reduced order modelling. A major emphasis of this work is to characterize disentanglement using VAEs while minimally modifying the classic VAE loss function (i.e. the Evidence Lower Bound) to maintain high reconstruction accuracy. The loss landscape is characterized by over-regularized local minima which surround desirable solutions. We illustrate comparisons between disentangled and entangled representations by juxtaposing learned latent distributions and the true generative factors in a model porous flow problem. Hierarchical priors are shown to facilitate the learning of disentangled representations. The regularization loss is unaffected by latent rotation when training with rotationally-invariant priors, and thus learning non-rotationally-invariant priors aids in capturing the properties of generative factors, improving disentanglement. Finally, it is shown that semi-supervised learning - accomplished by labeling a small number of samples ($O(1\%)$) - results in accurate disentangled latent representations that can be consistently learned.

翻译:以不受监督的方式从高维数据领域提取基因化参数的能力是计算物理学中一个非常可取但却没有实现的目标。 这项工作探索了使用变式自动电解码器(VAEs)来减少非线性维度,具体目的是保持高重建精确度。 低维潜伏变量的特征是围绕生成数据的独立物理参数。 分解是可解释的, 并可以转移到各种任务, 包括变异模型、 设计精确的标签缩放模型和精确的降序模型。 这项工作的主要重点是使用变式自动电解码器(VAEs)来描述分解情况, 同时尽可能地修改典型的 VAE损失函数(即证据低库), 以保持高重建精确度为具体目的。 损失环境的特征是过于常规化的本地微型, 围绕合适的解决方案。 我们通过对学习的隐性潜伏分布和在模型多孔流中的真正基因变异变因素进行对比。 高级前级前期显示有助于学习稳定性变换的变换, 正规性演算是前变变变的变变性, 。