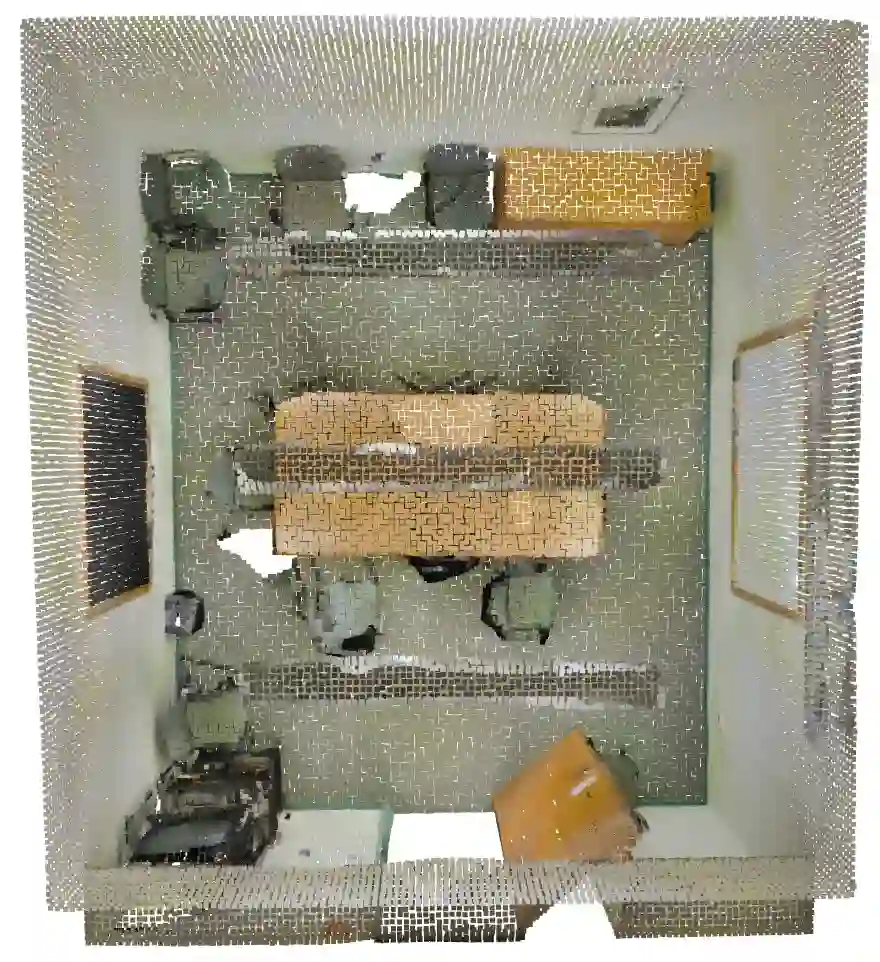

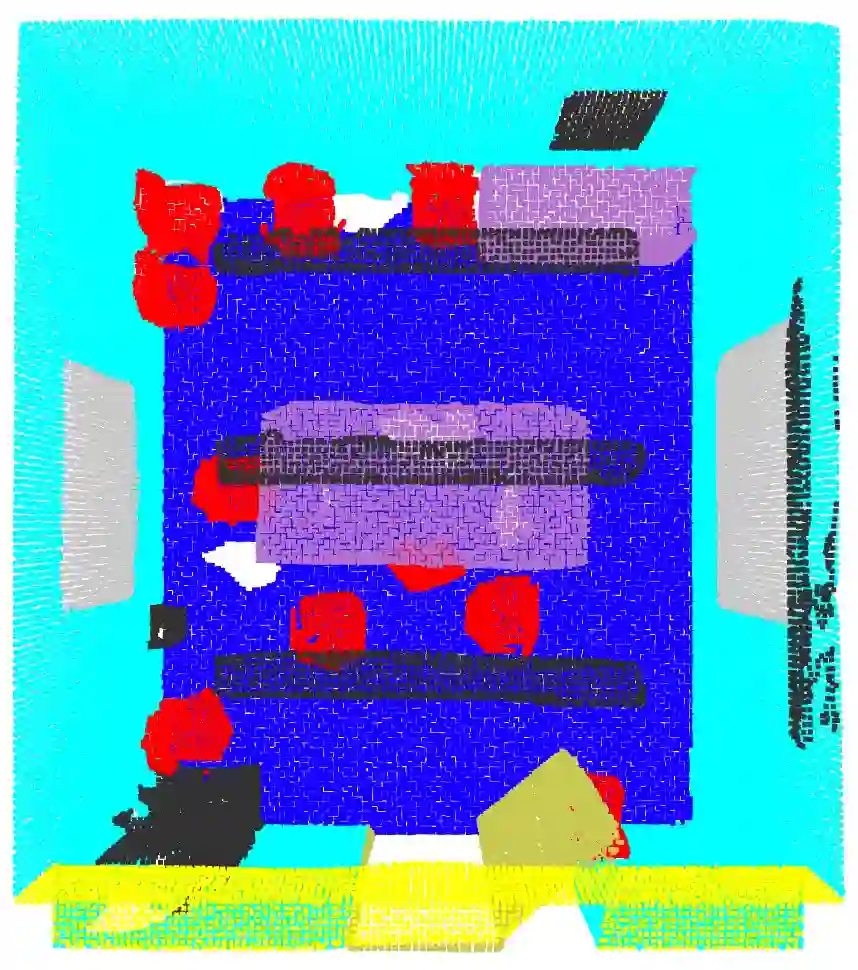

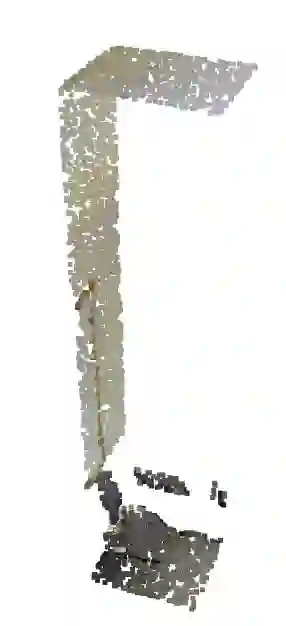

An efficient solution to semantic segmentation of large-scale indoor scene point clouds is proposed in this work. It is named GSIP (Green Segmentation of Indoor Point clouds) and its performance is evaluated on a representative large-scale benchmark -- the Stanford 3D Indoor Segmentation (S3DIS) dataset. GSIP has two novel components: 1) a room-style data pre-processing method that selects a proper subset of points for further processing, and 2) a new feature extractor which is extended from PointHop. For the former, sampled points of each room form an input unit. For the latter, the weaknesses of PointHop's feature extraction when extending it to large-scale point clouds are identified and fixed with a simpler processing pipeline. As compared with PointNet, which is a pioneering deep-learning-based solution, GSIP is green since it has significantly lower computational complexity and a much smaller model size. Furthermore, experiments show that GSIP outperforms PointNet in segmentation performance for the S3DIS dataset.

翻译:在这项工作中,提出了大规模室内场点云的语义分割的有效解决办法,称为GSIP(室内点云的绿色分割),其性能按照具有代表性的大型基准 -- -- 斯坦福 3D室内分割(S3DDIS)数据集(S3DIS))来评价。GSIP有两个新的组成部分:1) 一种室式数据预处理方法,选择一个适当的分数,供进一步处理;2) 一种从PointHop扩展的新地物提取器。对于前者,每个房间的抽样点组成一个输入器。对于后者,发现点Hop在将特征提取扩大到大型点云时的弱点,并用较简单的处理管道加以固定。与PointNet相比,GIIP是一个开拓性的深层次的解决方案,因为它的计算复杂性要低得多,模型规模要小得多。此外,实验表明,在S3DIS数据集的分解性能中,GIP在点网络上优于点网络。