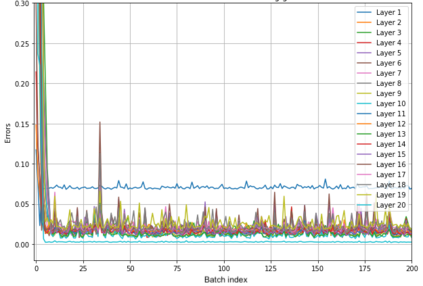

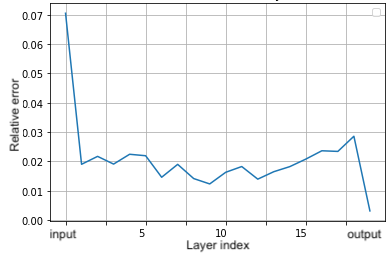

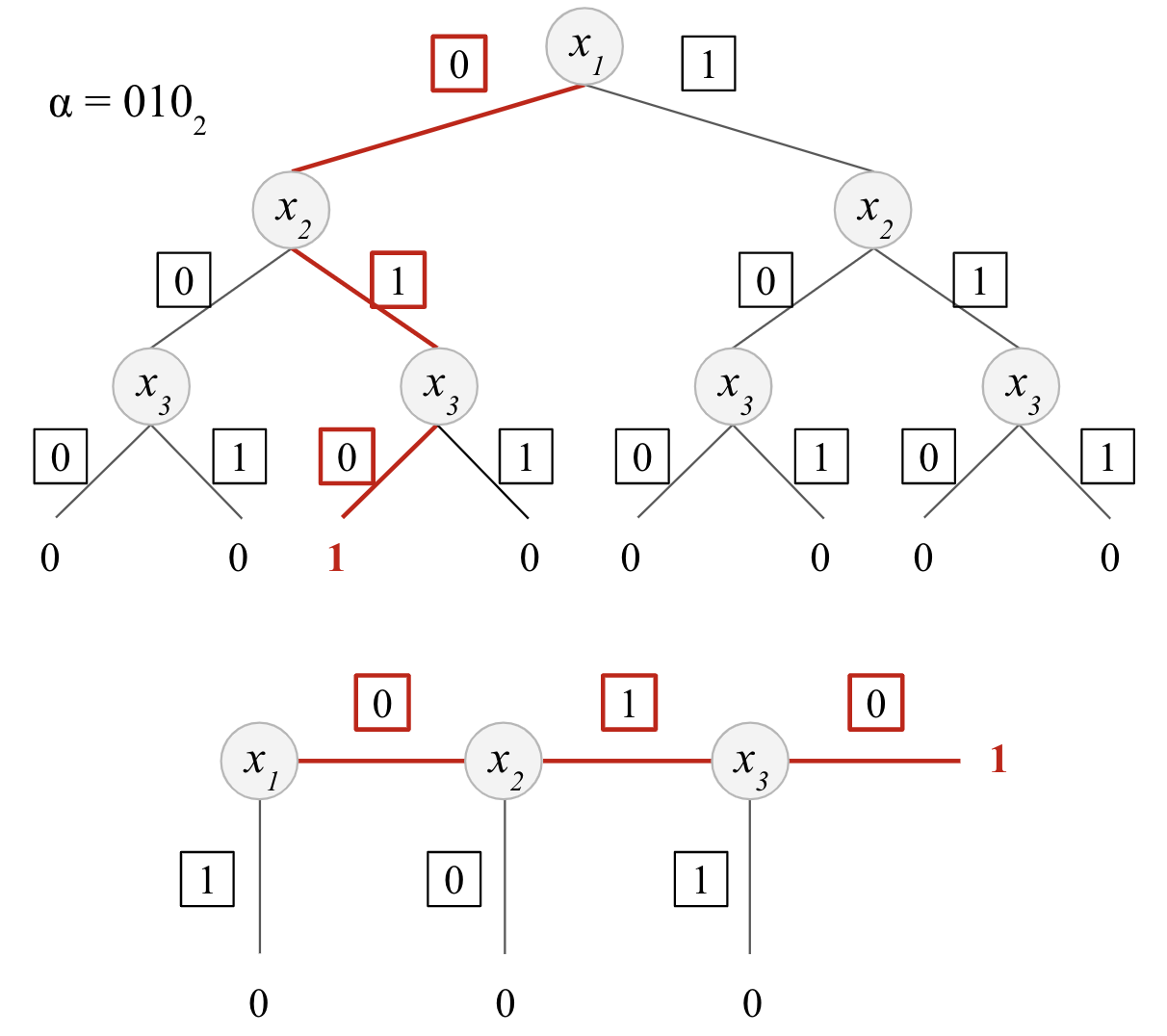

We propose AriaNN, a low-interaction privacy-preserving framework for private neural network training and inference on sensitive data. Our semi-honest 2-party computation protocol (with a trusted dealer) leverages function secret sharing, a recent lightweight cryptographic protocol that allows us to achieve an efficient online phase. We design optimized primitives for the building blocks of neural networks such as ReLU, MaxPool and BatchNorm. For instance, we perform private comparison for ReLU operations with a single message of the size of the input during the online phase, and with preprocessing keys close to 4X smaller than previous work. Last, we propose an extension to support n-party private federated learning. We implement our framework as an extensible system on top of PyTorch that leverages CPU and GPU hardware acceleration for cryptographic and machine learning operations. We evaluate our end-to-end system for private inference between distant servers on standard neural networks such as AlexNet, VGG16 or ResNet18, and for private training on smaller networks like LeNet. We show that computation rather than communication is the main bottleneck and that using GPUs together with reduced key size is a promising solution to overcome this barrier.

翻译:我们为私人神经网络培训和敏感数据的推断提出了低互动隐私保护框架AriANN。 我们的半诚实2党计算协议(与一个信任的经销商)的杠杆功能是秘密共享,这是最近一项让我们实现高效在线阶段的轻量加密协议。 我们设计了神经网络构件(如RELU、Max Pool和BatchNorm)的优化原始结构。 例如,我们对ReLU操作进行私人比较,提供在线阶段输入大小的单一信息,并使用接近4X的预处理密钥。 最后,我们提议扩大支持n党私人联合学习的功能。我们在PyTorrch顶部实施我们的框架,作为利用CPU和GPU硬件加速进行加密和机器学习操作的扩展系统。我们用亚历克斯Net、VGG16或ResNet18等标准神经网络的远程服务器进行私人推断的端对端至端系统,以及像LeNet这样的小型网络进行私人培训。 我们用一个有希望的屏障计算,而不是GPU, 将一个有希望的屏障和G的隔隔隔隔隔。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem