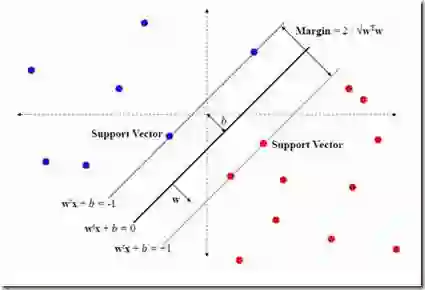

Objective: Classifier transfers usually come with dataset shifts. To overcome them, online strategies have to be applied. For practical applications, limitations in the computational resources for the adaptation of batch learning algorithms, like the SVM, have to be considered. Approach: We review and compare several strategies for online learning with SVMs. We focus on data selection strategies which limit the size of the stored training data [...] Main Results: For different data shifts, different criteria are appropriate. For the synthetic data, adding all samples to the pool of considered samples performs often significantly worse than other criteria. Especially, adding only misclassified samples performed astoundingly well. Here, balancing criteria were very important when the other criteria were not well chosen. For the transfer setups, the results show that the best strategy depends on the intensity of the drift during the transfer. Adding all and removing the oldest samples results in the best performance, whereas for smaller drifts, it can be sufficient to only add potential new support vectors of the SVM which reduces processing resources. Significance: For BCIs based on EEG models, trained on data from a calibration session, a previous recording session, or even from a recording session with one or several other subjects, are used. This transfer of the learned model usually decreases the performance and can therefore benefit from online learning which adapts the classifier like the established SVM. We show that by using the right combination of data selection criteria, it is possible to adapt the classifier and largely increase the performance. Furthermore, in some cases it is possible to speed up the processing and save computational by updating with a subset of special samples and keeping a small subset of samples for training the classifier.

翻译:目标 : 分类器传输通常伴随着数据集变化。 要克服这些变化, 就必须应用在线战略。 对于实际应用, 必须考虑批次学习算法(如SVM)的调整计算资源的限制。 方法 : 我们审查并比较若干在线学习战略, 与SVMs比较。 我们侧重于限制存储培训数据大小的数据选择战略 [.] 主要结果: 对于不同的数据变化, 不同的标准是合适的。 对于合成数据, 将所有样本都添加到考虑的样本库中, 其表现往往比其他标准差得多。 特别是, 仅添加错误分类样本, 效果非常好。 这里, 平衡标准对于其他标准没有很好地选择的时候非常重要。 对于传输设置, 结果是最佳战略取决于传输过程中的漂移强度。 添加和删除最老的样本结果, 以最佳的流来计算, 不同的数据转移, 可能只增加 SVM 潜在的新的支持矢量, 降低处理资源。 精度: 对于基于 EEG 模型的 BCI,, 从校准的校准操作中进行数据更新。 。 在校准的校正会中, 中, 前的计算过程会会显示一些测试, 。