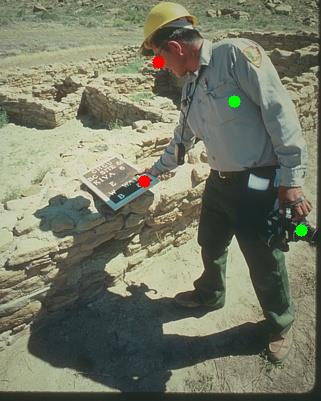

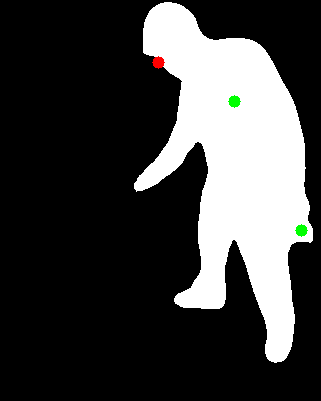

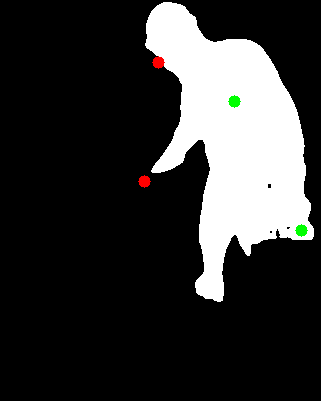

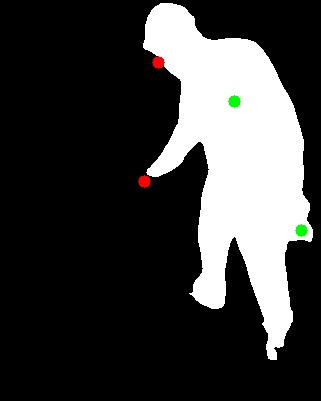

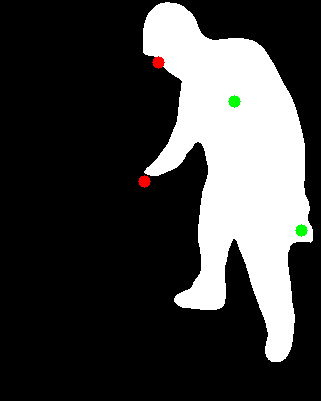

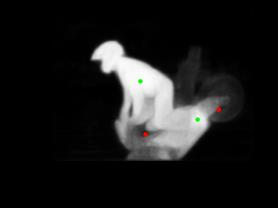

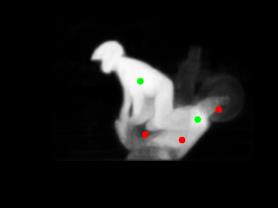

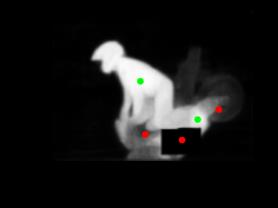

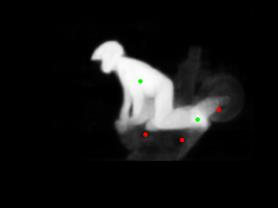

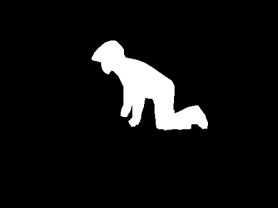

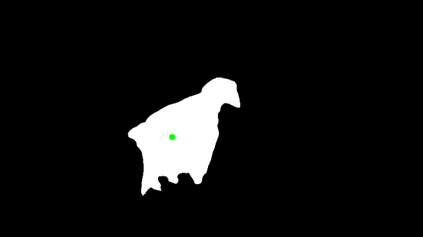

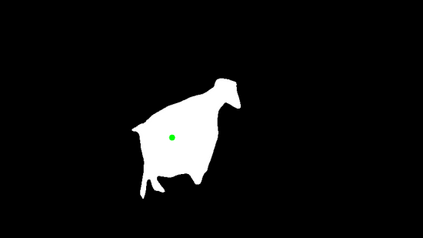

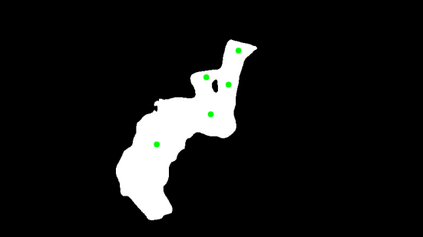

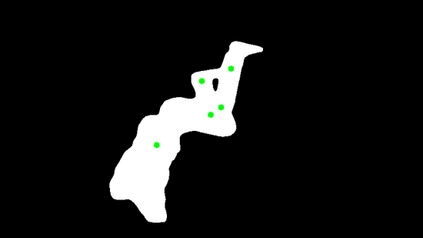

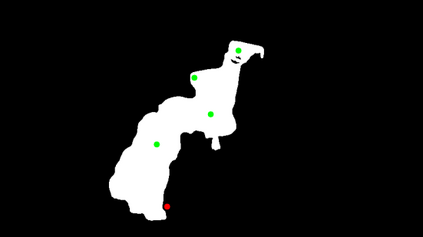

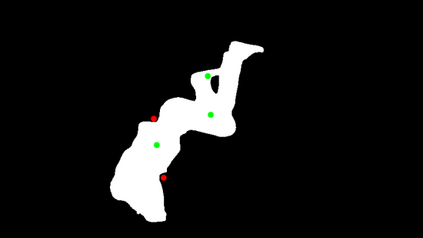

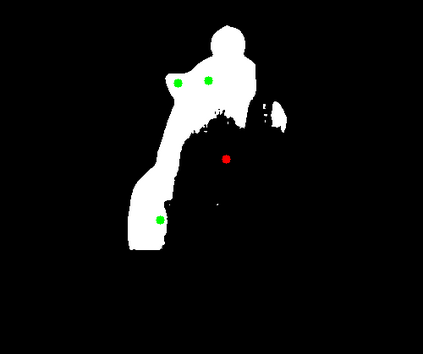

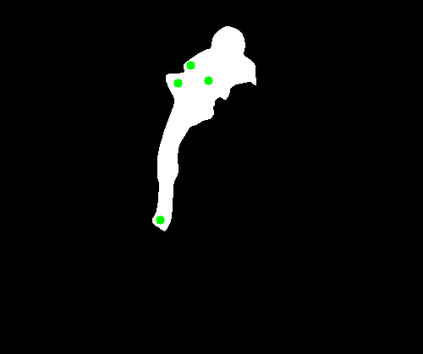

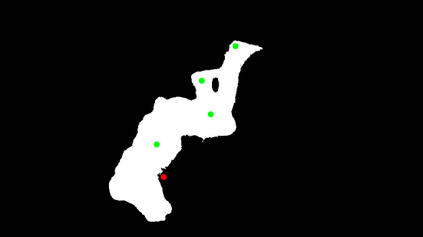

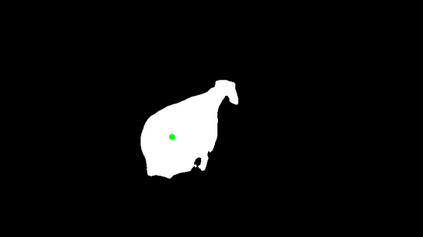

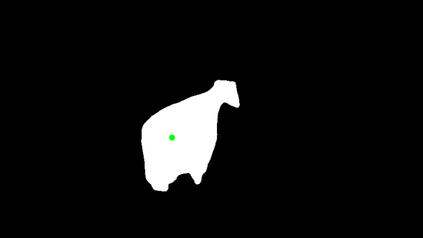

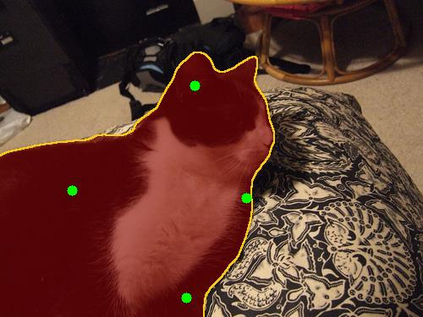

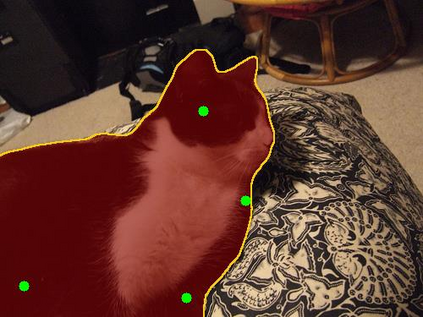

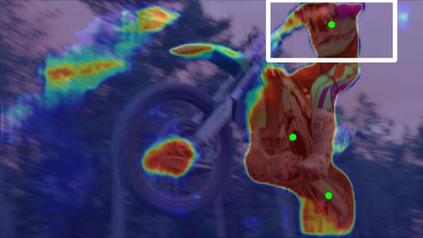

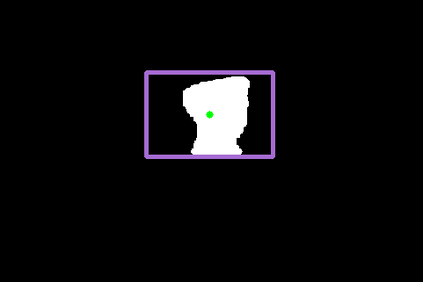

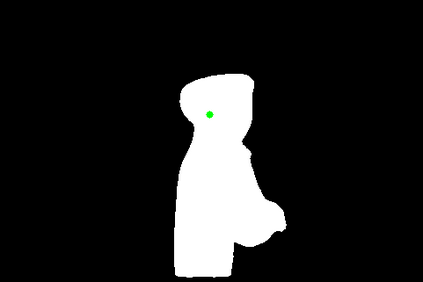

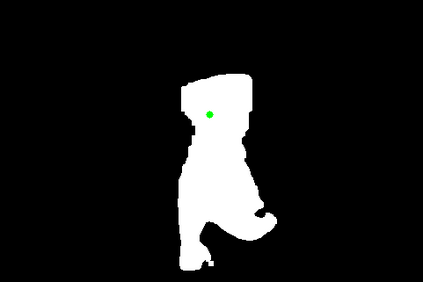

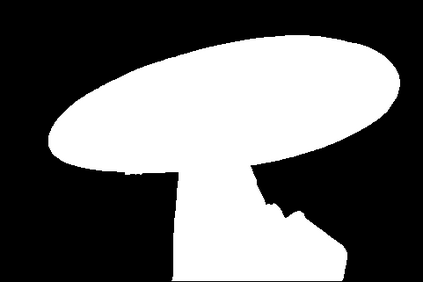

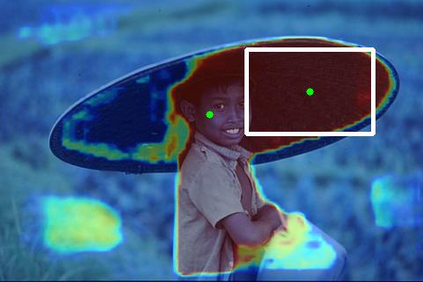

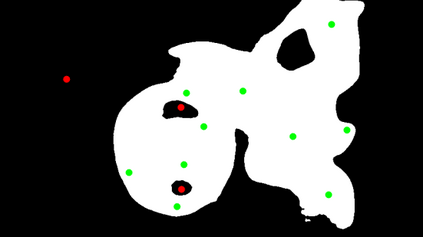

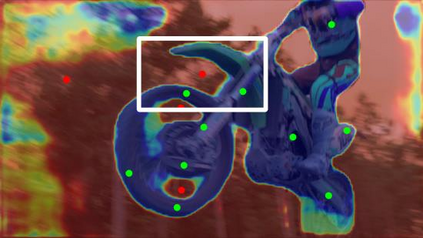

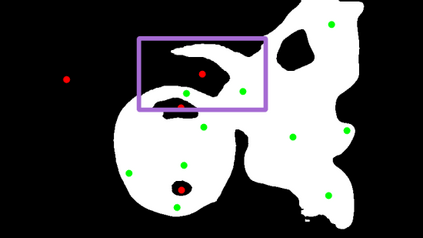

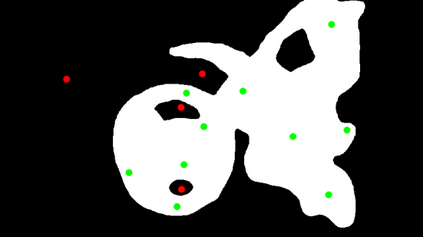

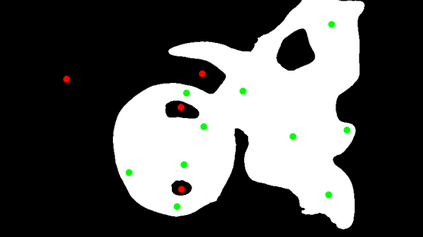

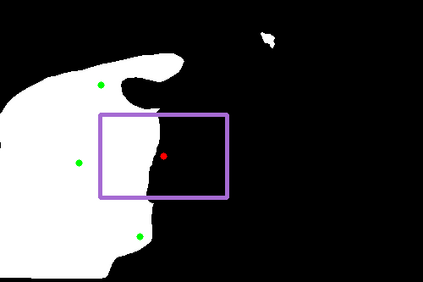

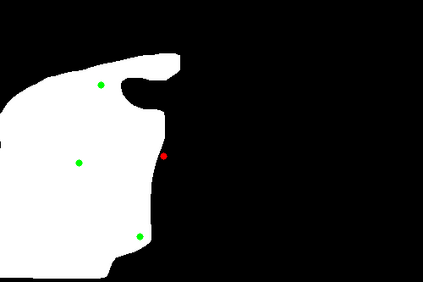

Interactive image segmentation aims at obtaining a segmentation mask for an image using simple user annotations. During each round of interaction, the segmentation result from the previous round serves as feedback to guide the user's annotation and provides dense prior information for the segmentation model, effectively acting as a bridge between interactions. Existing methods overlook the importance of feedback or simply concatenate it with the original input, leading to underutilization of feedback and an increase in the number of required annotations. To address this, we propose an approach called Focused and Collaborative Feedback Integration (FCFI) to fully exploit the feedback for click-based interactive image segmentation. FCFI first focuses on a local area around the new click and corrects the feedback based on the similarities of high-level features. It then alternately and collaboratively updates the feedback and deep features to integrate the feedback into the features. The efficacy and efficiency of FCFI were validated on four benchmarks, namely GrabCut, Berkeley, SBD, and DAVIS. Experimental results show that FCFI achieved new state-of-the-art performance with less computational overhead than previous methods. The source code is available at https://github.com/veizgyauzgyauz/FCFI.

翻译:交互式图像分割旨在使用简单的用户注释为图像获取一个分割掩码。在每轮交互中,上一轮的分割结果作为反馈来引导用户的注释,并为分割模型提供密集的先验信息,有效地作为交互之间的桥梁。现有方法忽视了反馈的重要性,或者简单地将其与原始输入连接起来,导致反馈被低效使用,需要更多的注释。为了解决这个问题,我们提出了一种名为“聚焦协作反馈集成(FCFI)”的方法,来充分利用用于基于点击的交互式图像分割的反馈。FCFI首先聚焦于新的点击周围的局部区域,并根据高级特征的相似性对反馈进行校正。然后轮流地协作更新反馈和深度特征,将反馈集成到特征中。FCFI在四个基准测试中进行了有效性和效率验证,分别是GrabCut、Berkeley、SBD和DAVIS。实验结果表明,FCFI实现了新的最佳性能,并且比以前的方法需要较少的计算开销。源代码可在https://github.com/veizgyauzgyauz/FCFI中找到。