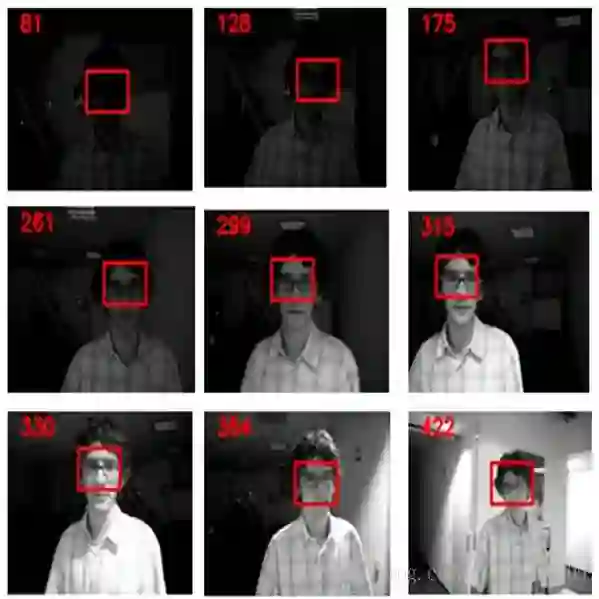

In this paper, we propose a new long video dataset (called Track Long and Prosper - TLP) and benchmark for visual object tracking. The dataset consists of 50 videos from real world scenarios, encompassing a duration of over 400 minutes (676K frames), making it more than 20 folds larger in average duration per sequence and more than 8 folds larger in terms of total covered duration, as compared to existing generic datasets for visual tracking. The proposed dataset paves a way to suitably assess long term tracking performance and train better deep learning architectures (avoiding/reducing augmentation, which may not reflect realistic real world behaviour). We benchmark the dataset on 17 state of the art trackers and rank them according to tracking accuracy and run time speeds. We further present thorough qualitative and quantitative evaluation highlighting the importance of long term aspect of tracking. Our most interesting observations are (a) existing short sequence benchmarks fail to bring out the inherent differences in tracking algorithms which widen up while tracking on long sequences and (b) the accuracy of most trackers abruptly drops on challenging long sequences, suggesting the potential need of research efforts in the direction of long term tracking.

翻译:在本文中,我们提出了一个新的长长的视频数据集(称为长跟踪和Prosper-TLP)和视觉物体跟踪基准。该数据集由来自真实世界情景的50个视频组成,涵盖时间超过400分钟(676K框架),使每个序列的平均时间长度超过20个折叠,总长度超过8折叠,而与现有的通用视觉跟踪数据集相比,覆盖的时间长度则大于20个折叠。与现有的通用数据集相比,拟议的数据集为适当评估长期跟踪性能和训练更好的深层次学习结构铺平了道路(避免/减少增长,这可能不反映现实的世界行为 ) 。我们把数据集以17个艺术追踪器状态作为基准,并根据跟踪准确性和运行时间速度对其进行排序。我们进一步提出全面的定性和定量评价,强调长期跟踪的重要性。我们最有趣的观察是:(a) 现有的短序列基准无法揭示跟踪算法的内在差异,这些算法在跟踪长序列的同时扩大,以及(b) 多数追踪器的精确度在具有挑战性的长序列上突然下降,表明长期追踪工作的潜在需要。