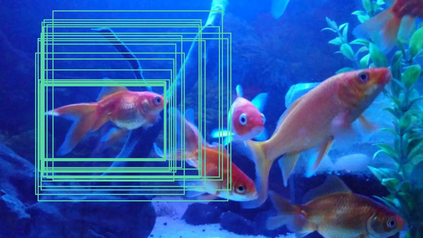

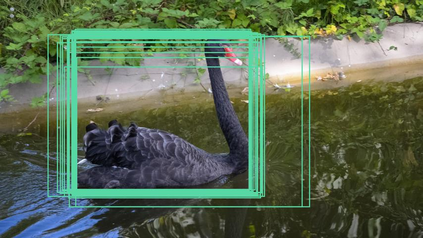

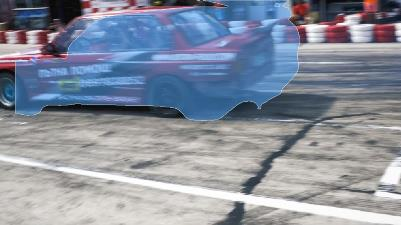

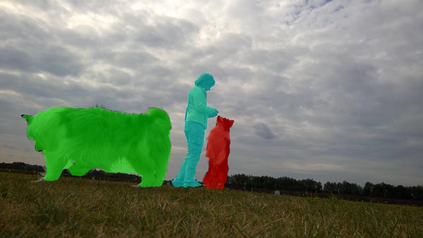

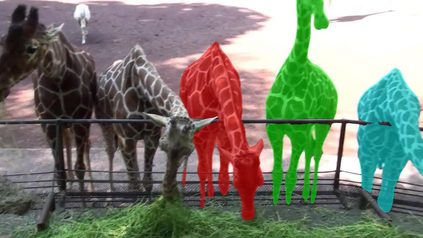

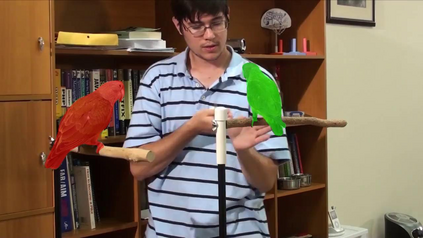

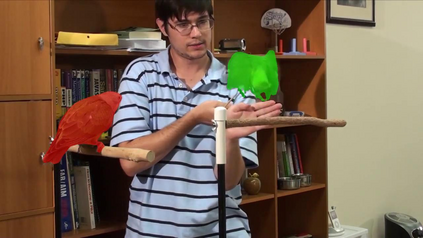

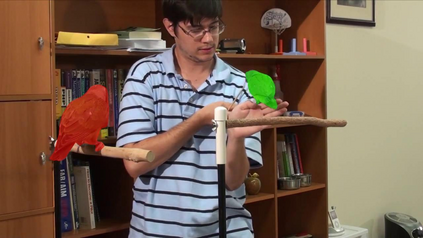

Video object segmentation (VOS) aims at pixel-level object tracking given only the annotations in the first frame. Due to the large visual variations of objects in video and the lack of training samples, it remains a difficult task despite the upsurging development of deep learning. Toward solving the VOS problem, we bring in several new insights by the proposed unified framework consisting of object proposal, tracking and segmentation components. The object proposal network transfers objectness information as generic knowledge into VOS; the tracking network identifies the target object from the proposals; and the segmentation network is performed based on the tracking results with a novel dynamic-reference based model adaptation scheme. Extensive experiments have been conducted on the DAVIS'17 dataset and the YouTube-VOS dataset, our method achieves the state-of-the-art performance on several video object segmentation benchmarks. We make the code publicly available at https://github.com/sydney0zq/PTSNet.

翻译:视频对象分解(VOS)的目的是,只对第一个框架的说明进行像素级物体跟踪。由于视频中对象的视觉变化巨大,而且缺乏培训样本,尽管深层学习的发展在不断扩展,这仍然是一项艰巨的任务。为了解决VOS问题,我们带来了由物体提议、跟踪和分解等组成部分组成的拟议统一框架的若干新见解。目标提议网络将目标性质信息作为一般知识传送到VOS;跟踪网络从建议中确定目标对象;分解网络根据基于新颖动态参考模型适应计划的跟踪结果进行。对DAVIS'17数据集和YouTube-VOS数据集进行了广泛的实验,我们的方法在若干视频对象分解基准上实现了最先进的表现。我们在https://github.com/sydney0q/PTSNet上公布了代码。我们将在https://github.sydney0q/PTSNet上公布。