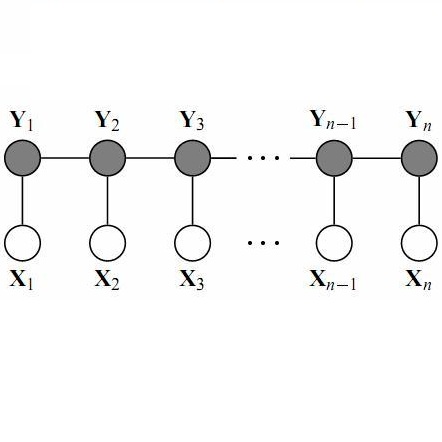

We investigate the problem of Chinese Grammatical Error Correction (CGEC) and present a new framework named Tail-to-Tail (\textbf{TtT}) non-autoregressive sequence prediction to address the deep issues hidden in CGEC. Considering that most tokens are correct and can be conveyed directly from source to target, and the error positions can be estimated and corrected based on the bidirectional context information, thus we employ a BERT-initialized Transformer Encoder as the backbone model to conduct information modeling and conveying. Considering that only relying on the same position substitution cannot handle the variable-length correction cases, various operations such substitution, deletion, insertion, and local paraphrasing are required jointly. Therefore, a Conditional Random Fields (CRF) layer is stacked on the up tail to conduct non-autoregressive sequence prediction by modeling the token dependencies. Since most tokens are correct and easily to be predicted/conveyed to the target, then the models may suffer from a severe class imbalance issue. To alleviate this problem, focal loss penalty strategies are integrated into the loss functions. Moreover, besides the typical fix-length error correction datasets, we also construct a variable-length corpus to conduct experiments. Experimental results on standard datasets, especially on the variable-length datasets, demonstrate the effectiveness of TtT in terms of sentence-level Accuracy, Precision, Recall, and F1-Measure on tasks of error Detection and Correction.

翻译:我们调查中国语言错误校正(CGEC)问题,并提出一个新的框架,名为“尾对尾(textbf{TTT})”的非反向序列预测,以解决CCC中隐藏的深层问题。考虑到大多数象征是正确的,可以从源头直接传递到目标,而错误位置可以根据双向背景信息估算和纠正,因此我们使用BERT初始变异器作为信息建模和传播的主模型。考虑到仅依靠同一位置替代无法处理变长校正案例,需要共同使用各种操作,例如替换、删除、插入和本地参数。因此,多数定型随机字段(CRFRF)层堆积在尾部上,以便根据双向背景信息进行非偏移序列预测和纠正,因此,我们使用一种最正确且容易预测/准确到目标,因此模型可能因严重的阶级失衡问题而受到影响。为了缓解这个问题,核心损失处罚战略,例如替换、删除、插入和本地参数等,还要根据典型的实验性判错率来构建一个变量数据。此外,我们还要根据典型的判错中的数据,还要测量一个可变的校正。