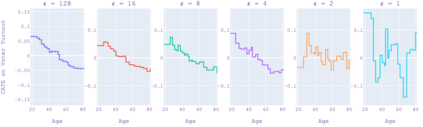

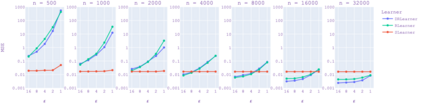

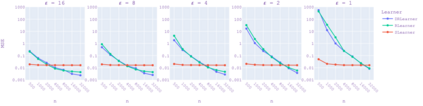

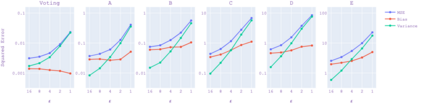

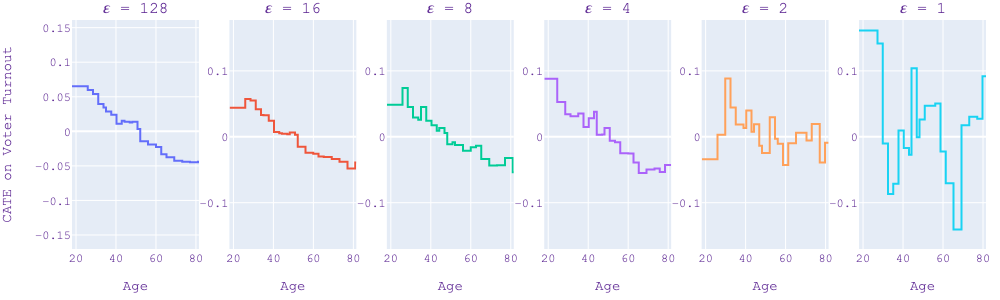

Estimating heterogeneous treatment effects in domains such as healthcare or social science often involves sensitive data where protecting privacy is important. We introduce a general meta-algorithm for estimating conditional average treatment effects (CATE) with differential privacy (DP) guarantees. Our meta-algorithm can work with simple, single-stage CATE estimators such as S-learner and more complex multi-stage estimators such as DR and R-learner. We perform a tight privacy analysis by taking advantage of sample splitting in our meta-algorithm and the parallel composition property of differential privacy. In this paper, we implement our approach using DP-EBMs as the base learner. DP-EBMs are interpretable, high-accuracy models with privacy guarantees, which allow us to directly observe the impact of DP noise on the learned causal model. Our experiments show that multi-stage CATE estimators incur larger accuracy loss than single-stage CATE or ATE estimators and that most of the accuracy loss from differential privacy is due to an increase in variance, not biased estimates of treatment effects.

翻译:估计保健或社会科学等领域的不同待遇影响往往涉及保护隐私十分重要的敏感数据。我们引入了一种通用的元数据,用于估算有条件平均治疗效果(CATE),并有不同的隐私保障。我们的元数据可以使用简单、单阶段的CATE估计器(如S-learner)和更为复杂的多阶段估计器(如DR和R-learner)发挥作用。我们利用我们元数据与差异隐私的平行构成属性的样本分割,进行严格的隐私分析。我们在本文中采用使用DP-EBMS作为基本学习者的方法。DP-EBMS是可解释的,具有隐私保障的高准确性模型,使我们能够直接观察DP噪音对已知因果模型的影响。我们的实验表明,多阶段CATE估计器的准确性损失大于单阶段CATE或ATE-learner,而差异隐私的准确性损失大多是由于差异性评估结果的增加,而不是有偏差的估计数。