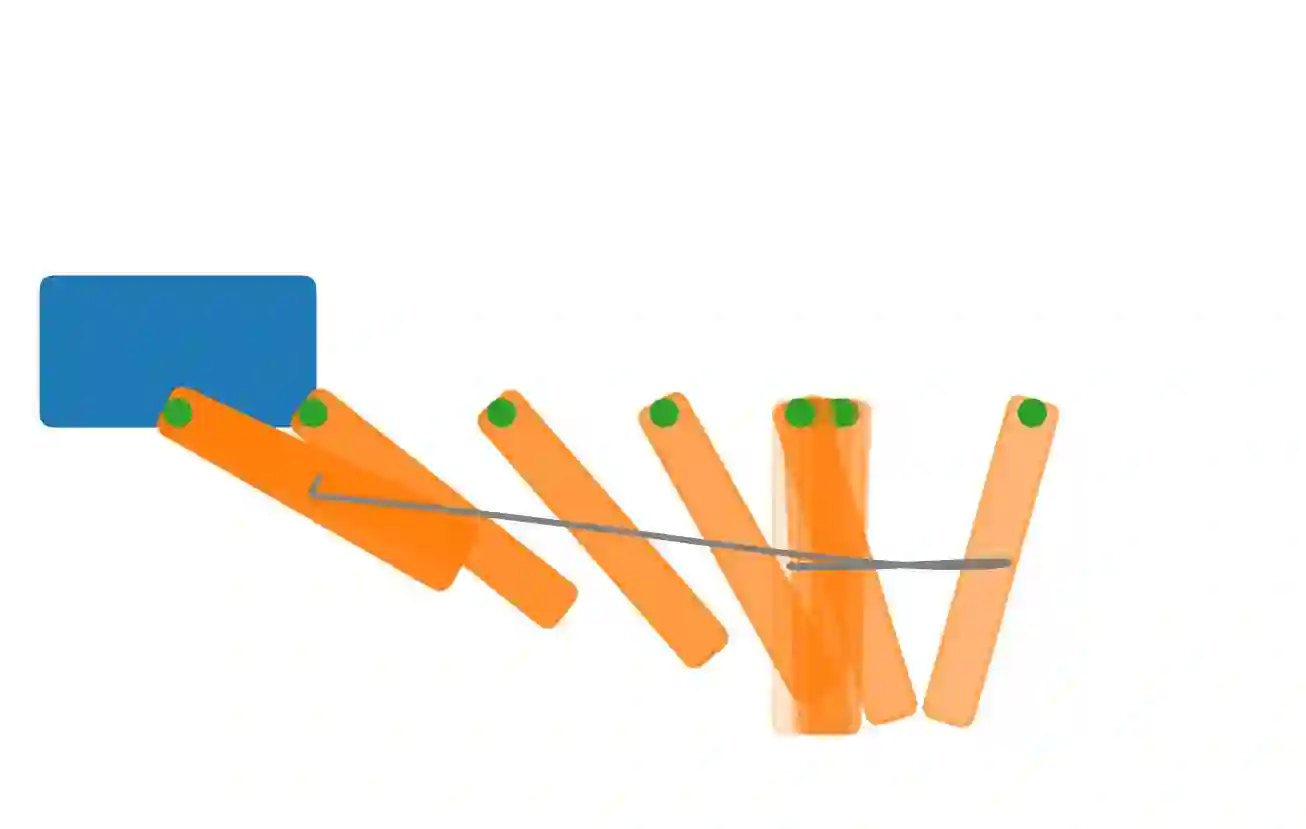

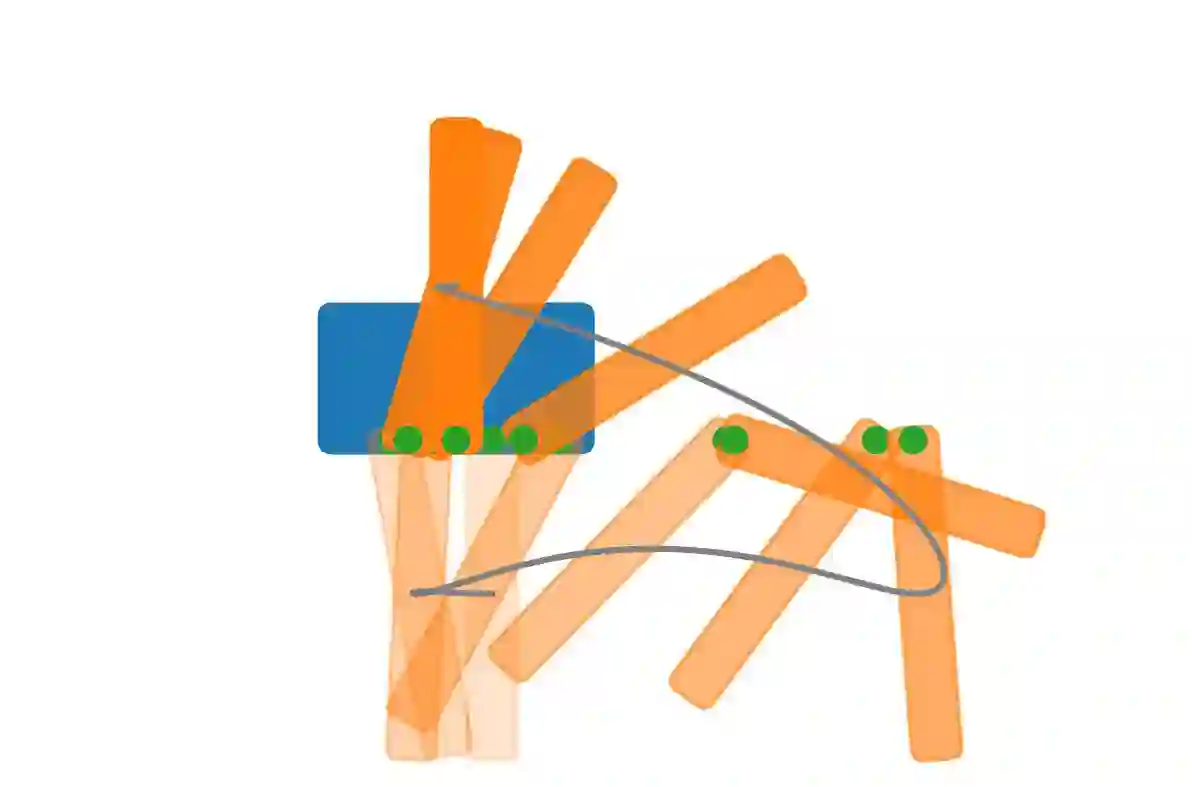

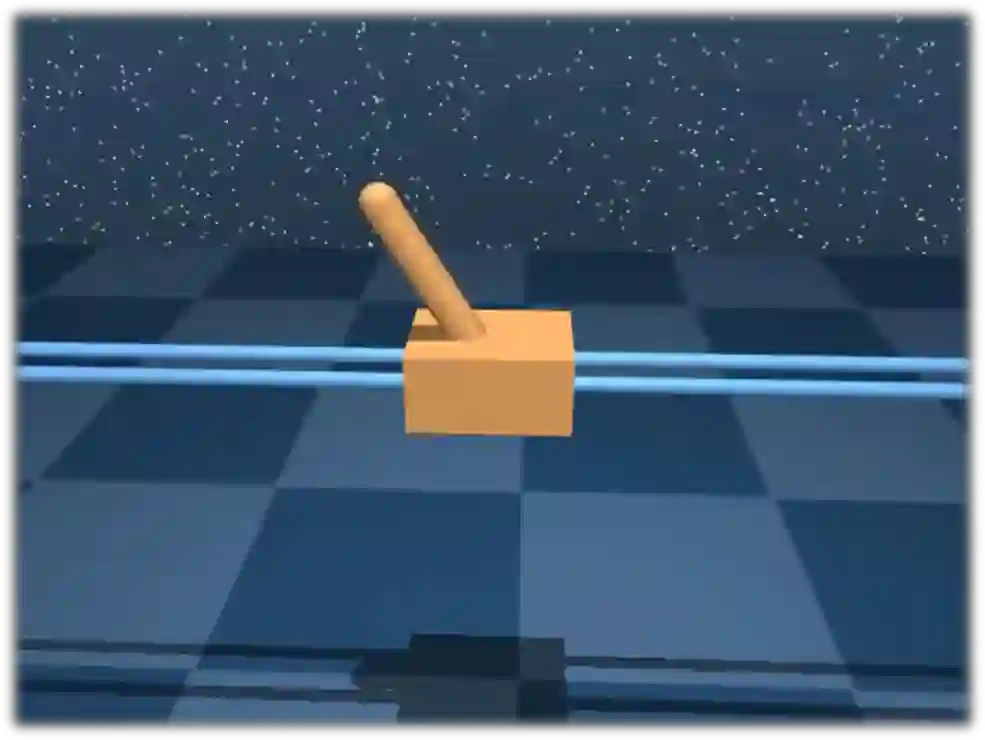

Iterative linear quadratic regulator (iLQR) has gained wide popularity in addressing trajectory optimization problems with nonlinear system models. However, as a model-based shooting method, it relies heavily on an accurate system model to update the optimal control actions and the trajectory determined with forward integration, thus becoming vulnerable to inevitable model inaccuracies. Recently, substantial research efforts in learning-based methods for optimal control problems have been progressing significantly in addressing unknown system models, particularly when the system has complex interactions with the environment. Yet a deep neural network is normally required to fit substantial scale of sampling data. In this work, we present Neural-iLQR, a learning-aided shooting method over the unconstrained control space, in which a neural network with a simple structure is used to represent the local system model. In this framework, the trajectory optimization task is achieved with simultaneous refinement of the optimal policy and the neural network iteratively, without relying on the prior knowledge of the system model. Through comprehensive evaluations on two illustrative control tasks, the proposed method is shown to outperform the conventional iLQR significantly in the presence of inaccuracies in system models.

翻译:在解决与非线性系统模型的轨迹优化问题方面,线性线性二次调节器(iLQR)在解决非线性系统模型的轨迹优化问题方面已获得广泛欢迎,然而,作为一种基于模型的射击方法,它严重依赖一个精确的系统模型来更新最佳控制行动和与前方整合所决定的轨迹,从而容易出现无法避免的模式不准确的情况。最近,对基于学习的最佳控制问题最佳控制方法的大量研究工作在解决未知系统模型方面取得了显著进展,特别是在系统与环境有复杂互动的情况下。然而,通常需要一个深层的神经网络来适应大量抽样数据。在这项工作中,我们提出了神经-iLQR,这是一种在未受控制的控制空间上学习辅助的射击方法,其中使用一个结构简单的神经网络来代表当地系统模型。在这一框架中,轨迹优化任务是通过同时完善最佳政策和神经网络而实现的迭接式,同时不依赖系统模型的先前知识。通过对两个示例控制任务进行全面评价,所拟议的方法显示,在系统模型中存在不准确性的情况下大大超越常规的 iLQR。