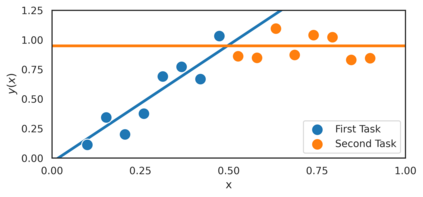

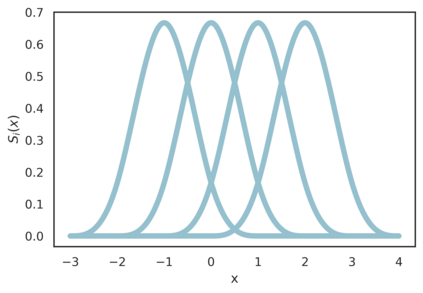

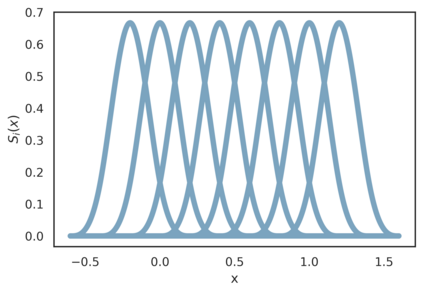

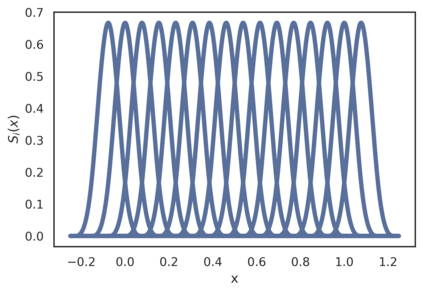

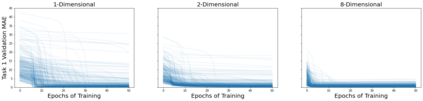

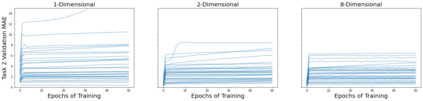

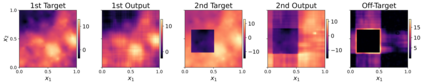

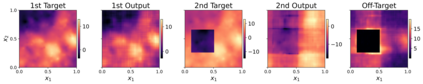

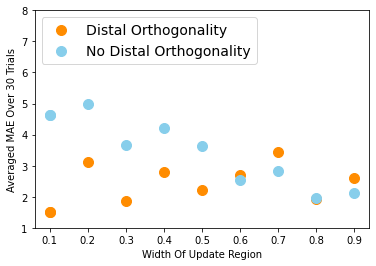

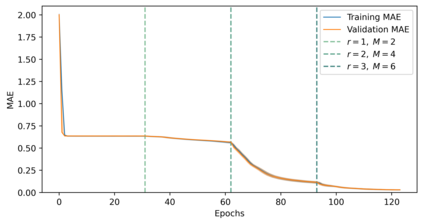

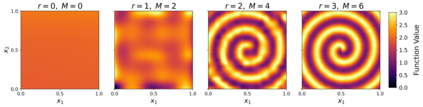

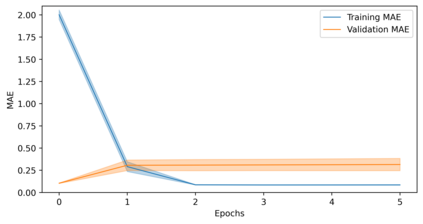

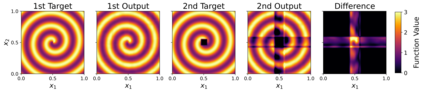

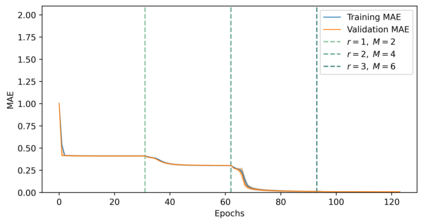

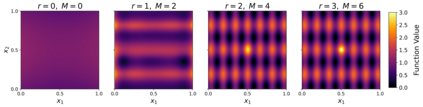

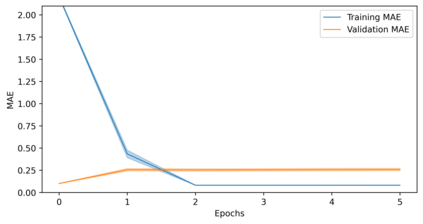

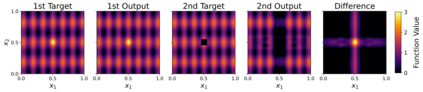

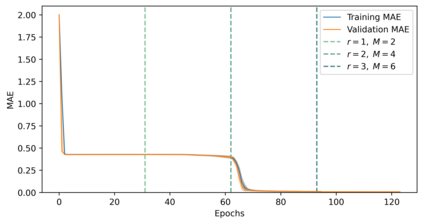

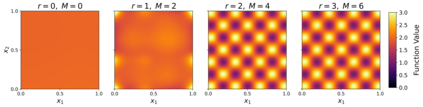

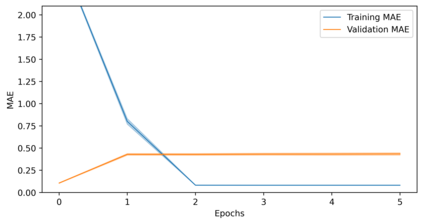

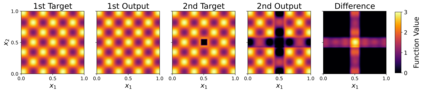

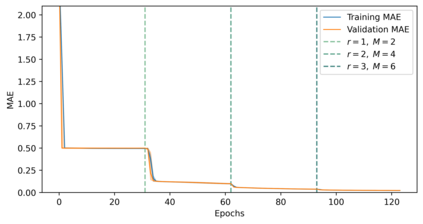

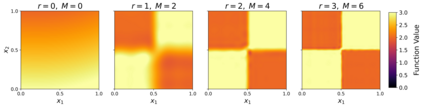

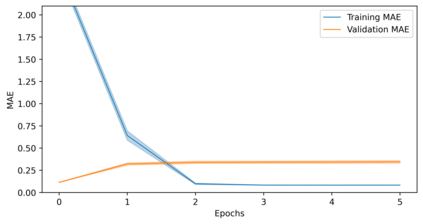

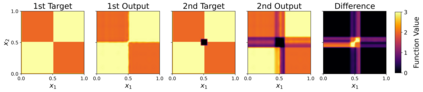

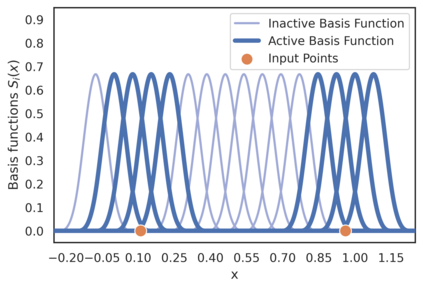

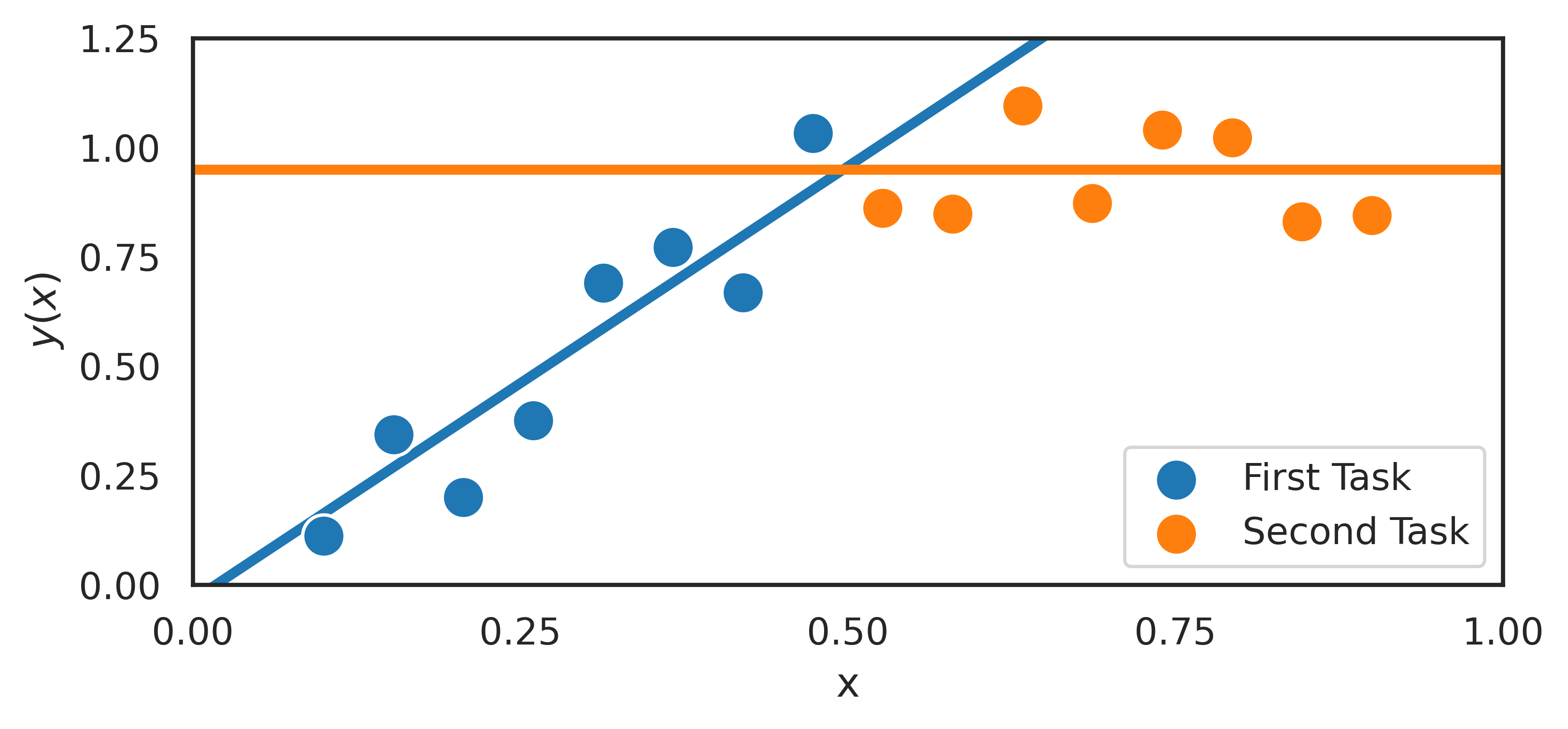

Artificial neural networks (ANNs), despite their universal function approximation capability and practical success, are subject to catastrophic forgetting. Catastrophic forgetting refers to the abrupt unlearning of a previous task when a new task is learned. It is an emergent phenomenon that hinders continual learning. Existing universal function approximation theorems for ANNs guarantee function approximation ability, but do not predict catastrophic forgetting. This paper presents a novel universal approximation theorem for multi-variable functions using only single-variable functions and exponential functions. Furthermore, we present ATLAS: a novel ANN architecture based on the new theorem. It is shown that ATLAS is a universal function approximator capable of some memory retention, and continual learning. The memory of ATLAS is imperfect, with some off-target effects during continual learning, but it is well-behaved and predictable. An efficient implementation of ATLAS is provided. Experiments are conducted to evaluate both the function approximation and memory retention capabilities of ATLAS.

翻译:人工神经网络尽管具有普遍功能近似能力和实际成功,但仍有可能被灾难性地遗忘。灾难性的忘记是指在学习新任务时突然忘记了先前的任务。这是一个阻碍持续学习的突发现象。现有的通用功能近似非本国神经网络的理论,保证了近近效能力,但并不预测灾难性的遗忘。本文仅使用单变量功能和指数函数,为多种变量功能提供了一个全新的通用近似理论。此外,我们介绍了ATLAS:基于新理论的新颖的ANN结构。它表明ATLAS是一个通用功能近似工具,能够保留一些记忆并不断学习。ATLAS的记忆不完善,在持续学习期间有一些离目标效果,但这是很好的和可预测的。提供了高效的ATLAS。我们进行了实验,以评价ATLAS的功能近似和记忆留存能力。