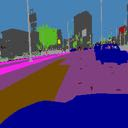

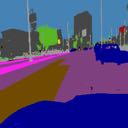

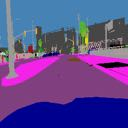

We introduce a data-driven approach for unsupervised video retargeting that translates content from one domain to another while preserving the style native to a domain, i.e., if contents of John Oliver's speech were to be transferred to Stephen Colbert, then the generated content/speech should be in Stephen Colbert's style. Our approach combines both spatial and temporal information along with adversarial losses for content translation and style preservation. In this work, we first study the advantages of using spatiotemporal constraints over spatial constraints for effective retargeting. We then demonstrate the proposed approach for the problems where information in both space and time matters such as face-to-face translation, flower-to-flower, wind and cloud synthesis, sunrise and sunset.

翻译:我们采用了一种数据驱动方法,用于未经监督的视频重新定位,将内容从一个领域翻译到另一个领域,同时保留原创的风格,即如果将约翰·奥利弗讲话的内容转移到斯蒂芬·科尔伯特的风格,那么所产生的内容/声音应采用斯蒂芬·科尔伯特的风格。我们的方法结合了空间和时间信息以及内容翻译和风格保存方面的对抗性损失。我们首先研究了利用空间时空限制相对于空间限制的优势,以便有效地重新定位。我们随后展示了在时空两方面信息的拟议方法,如面对面翻译、花花对花、风和云合成、日出和日落等问题。