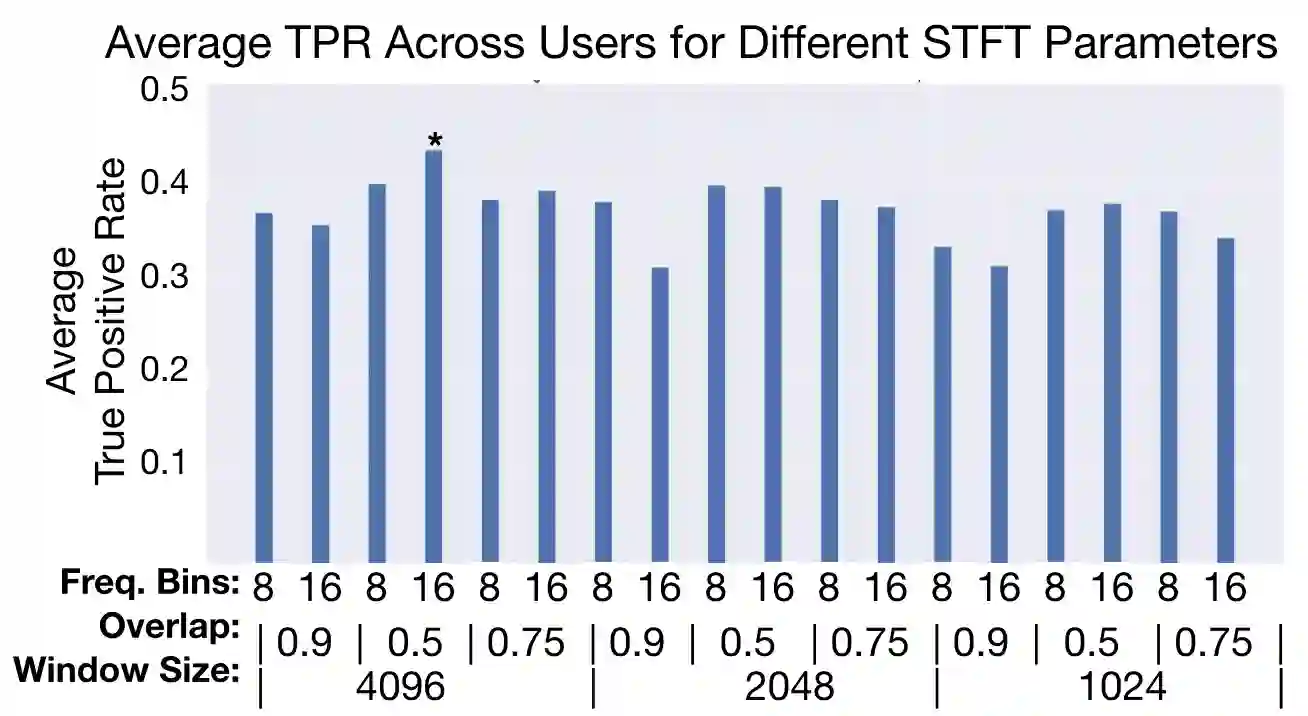

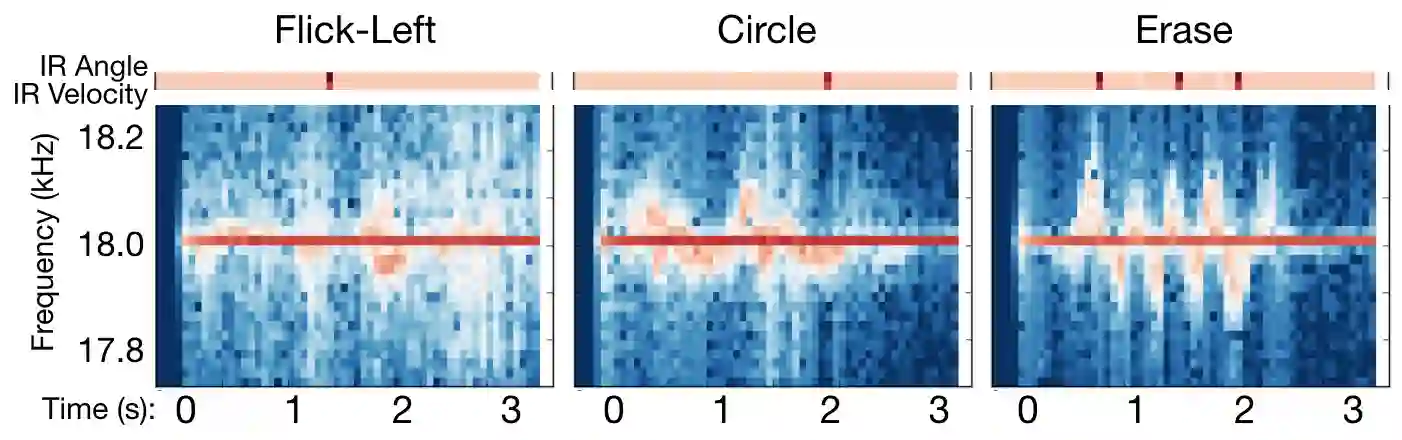

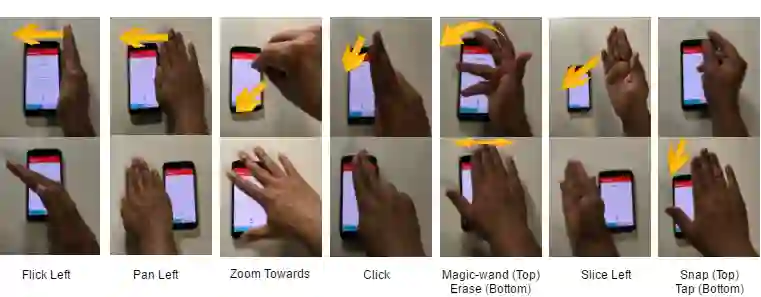

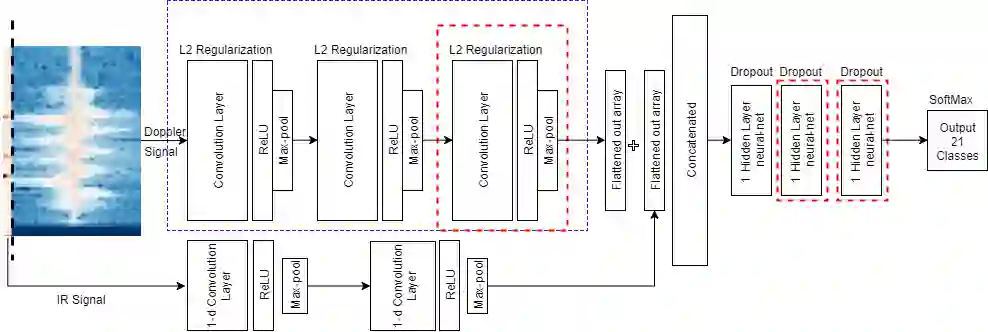

We introduce AirWare, an in-air hand-gesture recognition system that uses the already embedded speaker and microphone in most electronic devices, together with embedded infrared proximity sensors. Gestures identified by AirWare are performed in the air above a touchscreen or a mobile phone. AirWare utilizes convolutional neural networks to classify a large vocabulary of hand gestures using multi-modal audio Doppler signatures and infrared (IR) sensor information. As opposed to other systems which use high frequency Doppler radars or depth cameras to uniquely identify in-air gestures, AirWare does not require any external sensors. In our analysis, we use openly available APIs to interface with the Samsung Galaxy S5 audio and proximity sensors for data collection. We find that AirWare is not reliable enough for a deployable interaction system when trying to classify a gesture set of 21 gestures, with an average true positive rate of only 50.5% per gesture. To improve performance, we train AirWare to identify subsets of the 21 gestures vocabulary based on possible usage scenarios. We find that AirWare can identify three gesture sets with average true positive rate greater than 80% using 4--7 gestures per set, which comprises a vocabulary of 16 unique in-air gestures.

翻译:我们引入了AirWare, 这是一种在大多数电子设备中使用已经嵌入的扬声器和麦克风的空中手动识别系统, 以及嵌入式红外近距离传感器。 AirWare在触摸屏或移动电话之上的空气中进行所识别的手势。 AirWare使用多式音频多普勒签名和红外(IR)传感器信息,对手势的大型词汇进行分类。 与使用高频多普勒雷达或深水照相机来独特识别空气内手势的其他系统相比, AirWare并不需要任何外部传感器。 在我们的分析中, 我们发现AirWare使用公开可用的APIs与三星银河S5音频和近距离传感器接口进行数据采集。 我们发现, AirWare在试图对21个手势组合进行分类时, 使用多调多调多调多调和红外(IR) 传感器, 平均正率仅为50.5% 。 为了提高性能, 我们培训AirWarere(AirWare) 能够识别三个手势阵列阵列, 包括平均 80以上的16个正势。