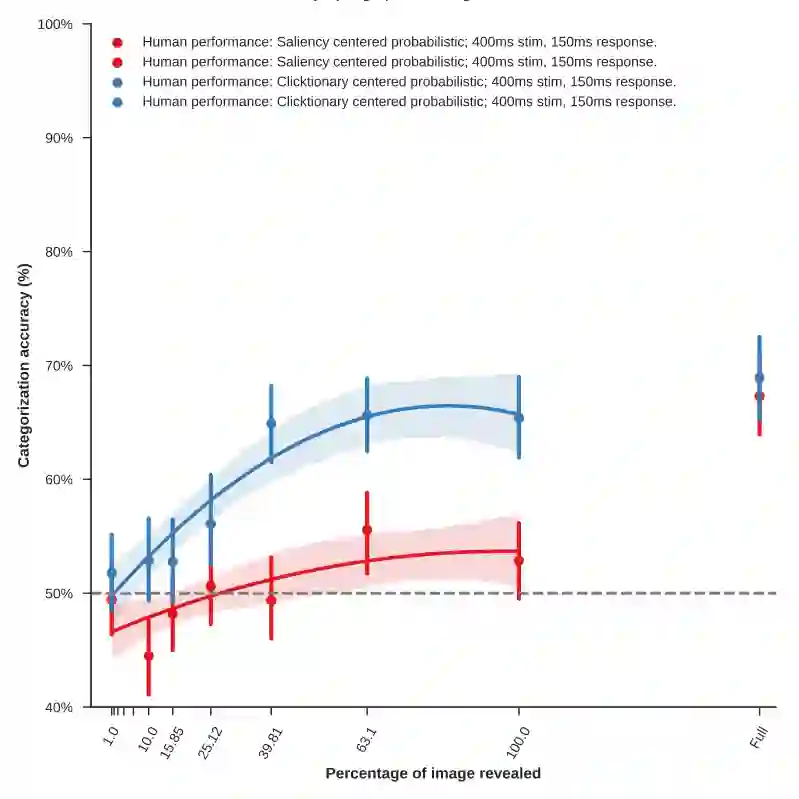

State-of-the-art deep convolutional networks (DCNs) such as squeeze-and- excitation (SE) residual networks implement a form of attention, also known as contextual guidance, which is derived from global image features. Here, we explore a complementary form of attention, known as visual saliency, which is derived from local image features. We extend the SE module with a novel global-and-local attention (GALA) module which combines both forms of attention -- resulting in state-of-the-art accuracy on ILSVRC. We further describe ClickMe.ai, a large-scale online experiment designed for human participants to identify diagnostic image regions to co-train a GALA network. Adding humans-in-the-loop is shown to significantly improve network accuracy, while also yielding visual features that are more interpretable and more similar to those used by human observers.

翻译:诸如挤压和引力(SE)残余网络等最先进的深层连锁网络(DCNs)实施一种关注形式,也称为背景指导,它源于全球图像特征。在这里,我们探索一种互补的关注形式,即视觉特征,它来自当地图像特征。我们扩展了SE模块,采用了一种新的全球和地方关注模块,将两种关注形式结合起来,从而导致LISVRC的最新准确性。我们进一步描述了ClickMe.ai,这是为人类参与者设计的一个大规模在线实验,旨在识别诊断图像区域,共同管理GALA网络。增加“流动中人”显示显著提高网络的准确性,同时产生更可解释的视觉特征,与人类观察者使用的特征更为相似。