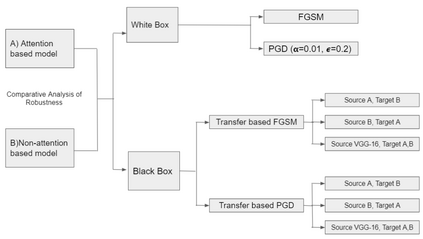

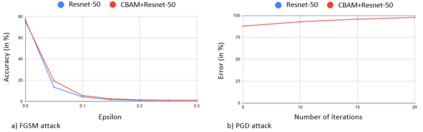

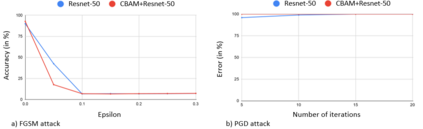

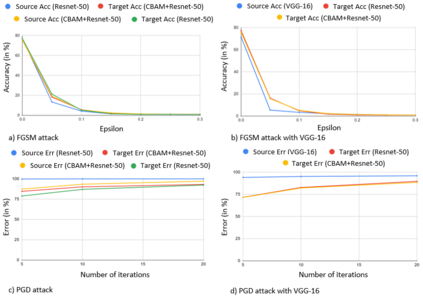

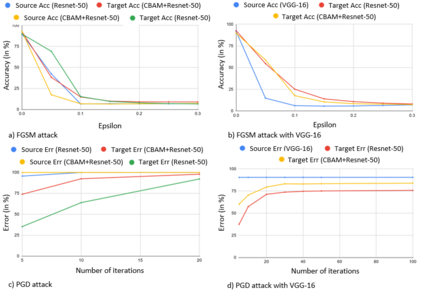

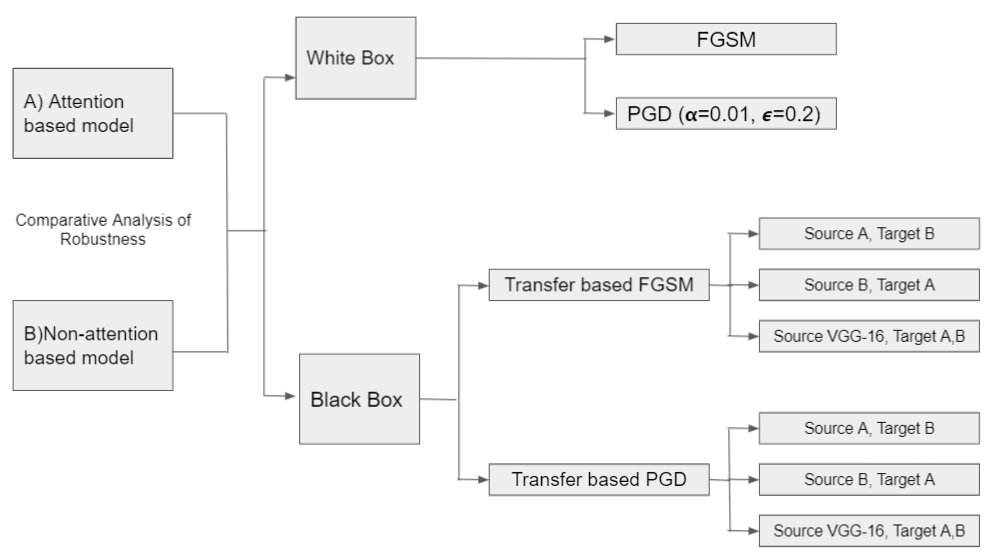

Adversarial attacks against deep learning models have gained significant attention and recent works have proposed explanations for the existence of adversarial examples and techniques to defend the models against these attacks. Attention in computer vision has been used to incorporate focused learning of important features and has led to improved accuracy. Recently, models with attention mechanisms have been proposed to enhance adversarial robustness. Following this context, this work aims at a general understanding of the impact of attention on adversarial robustness. This work presents a comparative study of adversarial robustness of non-attention and attention based image classification models trained on CIFAR-10, CIFAR-100 and Fashion MNIST datasets under the popular white box and black box attacks. The experimental results show that the robustness of attention based models may be dependent on the datasets used i.e. the number of classes involved in the classification. In contrast to the datasets with less number of classes, attention based models are observed to show better robustness towards classification.

翻译:对深层次学习模式的反面攻击引起了人们的极大关注,最近的工作对是否存在对抗性例子和技术以防御这些攻击的模式提出了解释,计算机视野中的注意被用来纳入对重要特征的有重点的学习,并提高了准确性;最近,提出了有注意机制的模型,以加强对抗性强力;在此背景下,这项工作旨在普遍了解注意对对抗性强力的影响;这项工作是对在广受欢迎的白盒和黑盒攻击下训练的CIFAR-10、CIFAR-100和Fashon MNIST数据集的不注意和关注性图像分类模型的对抗性强性进行比较研究;实验结果显示,基于注意的模型的稳性可能取决于所使用的数据集,即分类所涉及的类别数目;与分类较少的数据集相比,注意到基于模型的注意性更强,以显示对分类的稳健性。