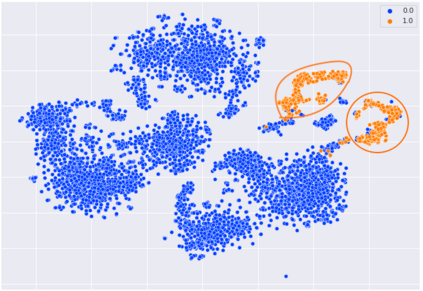

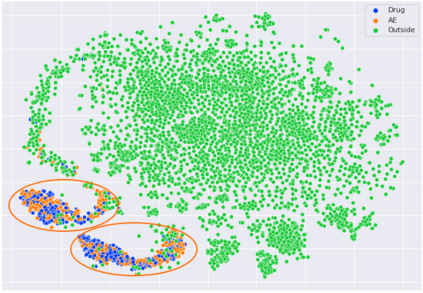

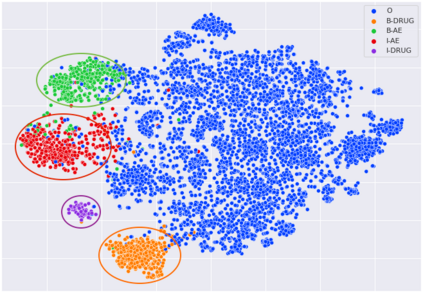

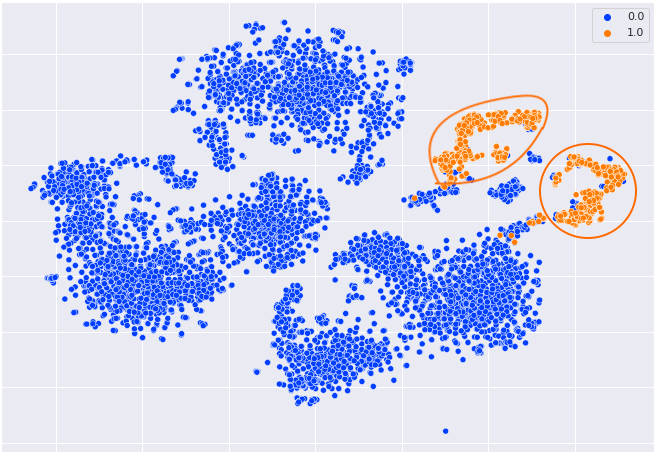

Though language model text embeddings have revolutionized NLP research, their ability to capture high-level semantic information, such as relations between entities in text, is limited. In this paper, we propose a novel contrastive learning framework that trains sentence embeddings to encode the relations in a graph structure. Given a sentence (unstructured text) and its graph, we use contrastive learning to impose relation-related structure on the token-level representations of the sentence obtained with a CharacterBERT (El Boukkouri et al.,2020) model. The resulting relation-aware sentence embeddings achieve state-of-the-art results on the relation extraction task using only a simple KNN classifier, thereby demonstrating the success of the proposed method. Additional visualization by a tSNE analysis shows the effectiveness of the learned representation space compared to baselines. Furthermore, we show that we can learn a different space for named entity recognition, again using a contrastive learning objective, and demonstrate how to successfully combine both representation spaces in an entity-relation task.

翻译:虽然语言示范文本嵌入使NLP的研究发生了革命性的变化,但是它们捕捉高层次语义信息(如文本中实体之间的关系)的能力是有限的。在本文中,我们提议了一个全新的对比学习框架,用于训练句子嵌入以图形结构编码关系。鉴于一个句子(非结构化文本)及其图表,我们用对比学习将相关结构强加在与字符BERT(El Boukkouri等人,202020年)模型相比的句子象征性的表示式上。由此形成的关联认知句嵌入仅使用简单的 KNNN分类器就关系提取任务取得了最先进的结果,从而展示了拟议方法的成功。通过 TSNE分析的额外视觉化展示了与基线相比所学过的代表空间的有效性。此外,我们还可以利用对比性学习目标来学习一个不同的实体识别空间,并展示如何成功地将两个代表空间结合到实体关系任务。