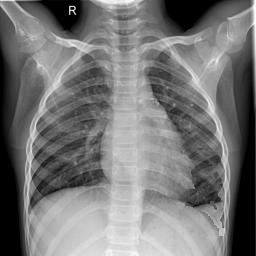

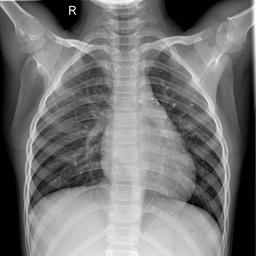

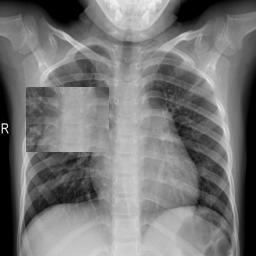

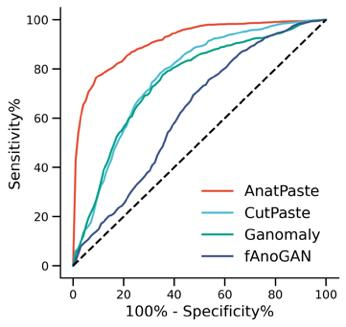

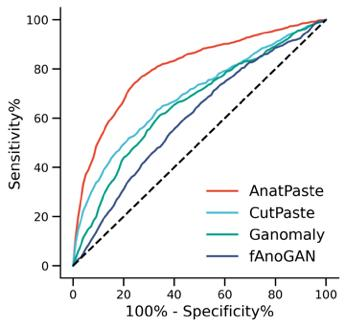

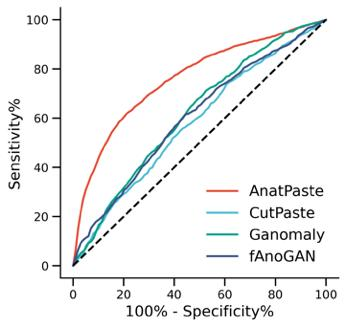

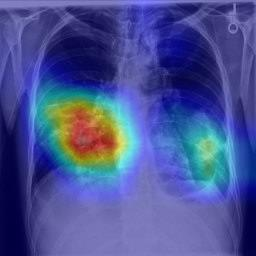

Large numbers of labeled medical images are essential for the accurate detection of anomalies, but manual annotation is labor-intensive and time-consuming. Self-supervised learning (SSL) is a training method to learn data-specific features without manual annotation. Several SSL-based models have been employed in medical image anomaly detection. These SSL methods effectively learn representations in several field-specific images, such as natural and industrial product images. However, owing to the requirement of medical expertise, typical SSL-based models are inefficient in medical image anomaly detection. We present an SSL-based model that enables anatomical structure-based unsupervised anomaly detection (UAD). The model employs the anatomy-aware pasting (AnatPaste) augmentation tool. AnatPaste employs a threshold-based lung segmentation pretext task to create anomalies in normal chest radiographs, which are used for model pretraining. These anomalies are similar to real anomalies and help the model recognize them. We evaluate our model on three opensource chest radiograph datasets. Our model exhibit area under curves (AUC) of 92.1%, 78.7%, and 81.9%, which are the highest among existing UAD models. This is the first SSL model to employ anatomical information as a pretext task. AnatPaste can be applied in various deep learning models and downstream tasks. It can be employed for other modalities by fixing appropriate segmentation. Our code is publicly available at: https://github.com/jun-sato/AnatPaste.

翻译:大量贴有标签的医学图像对于准确检测异常现象至关重要,但人工注解是劳动密集型和耗费时间的。自监学习(SSL)是一种培训方法,用于在不人工注解的情况下学习特定数据特征。一些基于SSL的模型被用于医学图像异常现象的检测。这些SSL方法有效地学习了多个特定领域图像的表解,如自然和工业产品图像。然而,由于医学专业知识的要求,基于SSL的典型模型在医学图像异常现象检测方面效率低下。我们展示了一个基于SSL的模型,可以进行基于解剖结构的、不受监督的异常现象检测(UAAD)。该模型使用解剖-觉粘贴(AnatPaste)增强工具。基于SSL的几种模型用于医学图像异常现象。这些异常现象与真正的异常相似,有助于模型识别这些异常现象。我们在三种开源的胸腔辐射数据集中可以使用模型,在基于解剖面结构结构的曲线下(AUSC),在92.1号中使用解析(Anal-SL)粘度图解为最高格式。