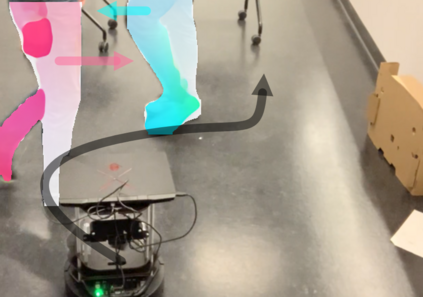

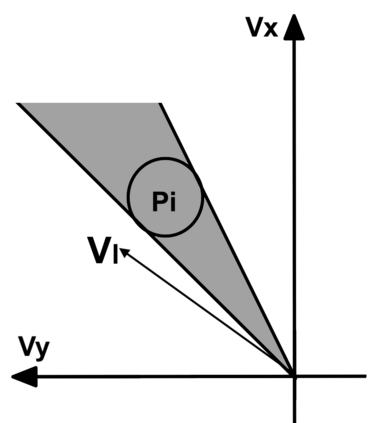

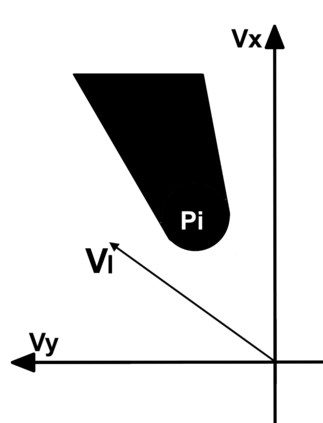

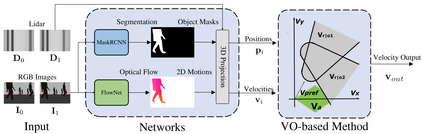

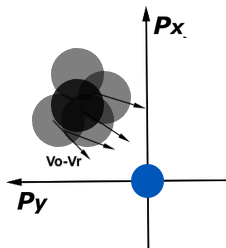

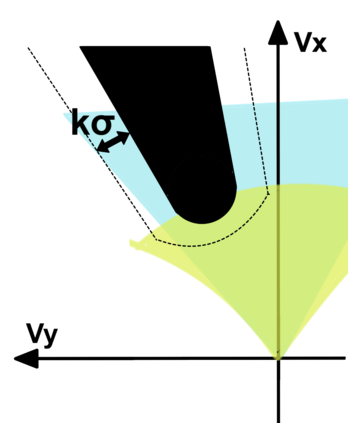

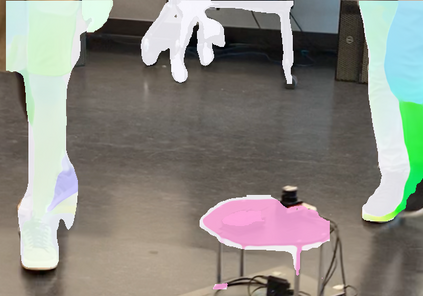

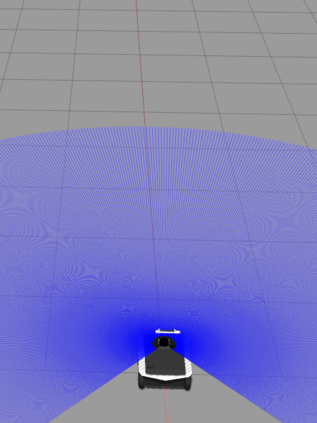

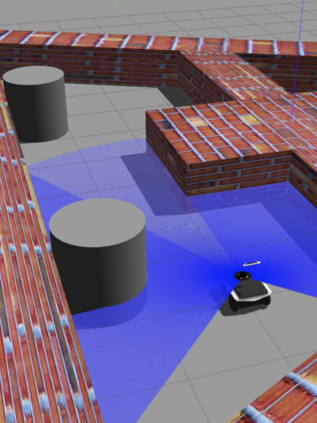

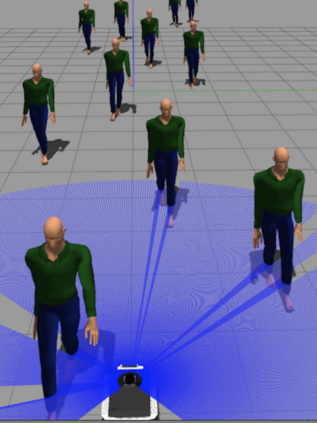

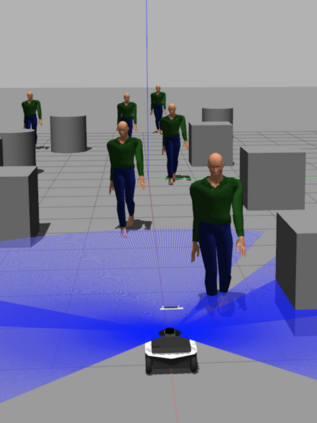

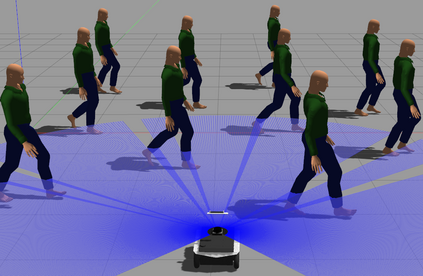

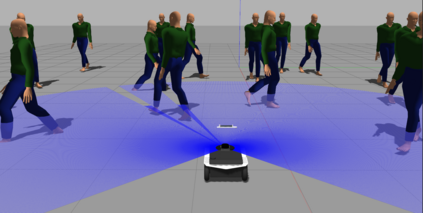

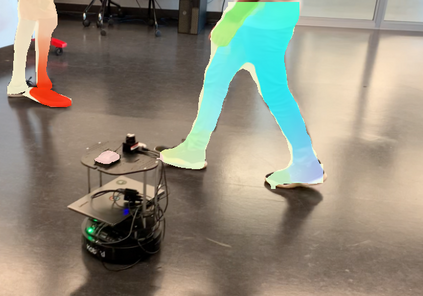

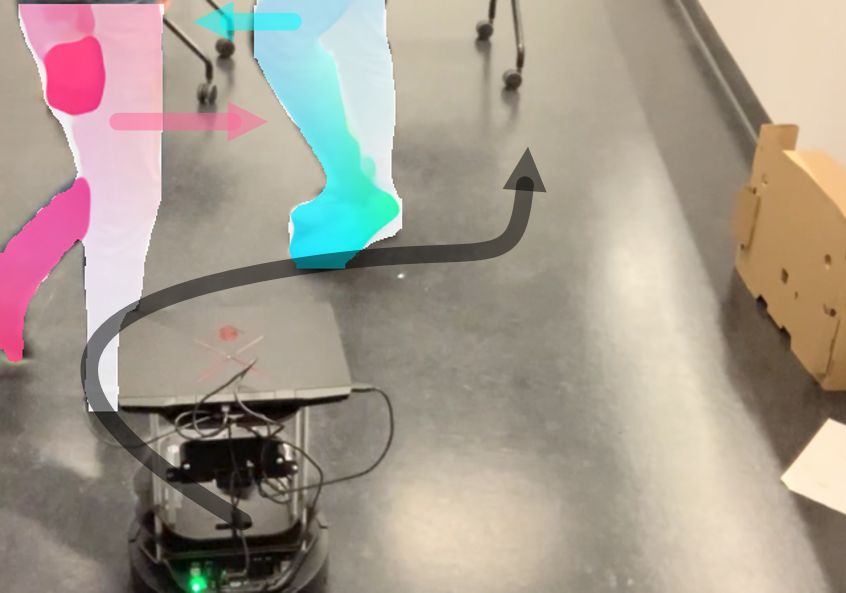

We present a modified velocity-obstacle (VO) algorithm that uses probabilistic partial observations of the environment to compute velocities and navigate a robot to a target. Our system uses commodity visual sensors, including a mono-camera and a 2D Lidar, to explicitly predict the velocities and positions of surrounding obstacles through optical flow estimation, object detection, and sensor fusion. A key aspect of our work is coupling the perception (OF: optical flow) and planning (VO) components for reliable navigation. Overall, our OF-VO algorithm using learning-based perception and model-based planning methods offers better performance than prior algorithms in terms of navigation time and success rate of collision avoidance. Our method also provides bounds on the probabilistic collision avoidance algorithm. We highlight the realtime performance of OF-VO on a Turtlebot navigating among pedestrians in both simulated and real-world scenes. A demo video is available at https://gamma.umd.edu/ofvo

翻译:我们提出了一个经过修改的高速测速器算法(VO),该算法使用对环境的概率部分观测来计算速度并将机器人引导到目标。我们的系统使用商品视觉传感器,包括单相机和2D利达尔,以通过光学流量估计、物体探测和感知聚合明确预测周围障碍的速度和位置。我们工作的一个重要方面是将感知(OF:光学流动)和规划(VO)组成部分结合起来,以便进行可靠的导航。总体而言,我们的基于学习的认知和模型规划方法的OV算法在航行时间和避免碰撞的成功率方面比以前的算法表现更好。我们的方法还提供了概率性避免碰撞算法的界限。我们强调模拟和现实世界场景中行人对海龟机器人航行的实时性表现。一个演示视频可在 https://gamma.umd.edu/ofvo上查阅。