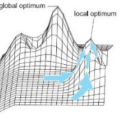

The Metropolis algorithm (MA) is a classic stochastic local search heuristic. It avoids getting stuck in local optima by occasionally accepting inferior solutions. To better and in a rigorous manner understand this ability, we conduct a mathematical runtime analysis of the MA on the CLIFF benchmark. Apart from one local optimum, cliff functions are monotonically increasing towards the global optimum. Consequently, to optimize a cliff function, the MA only once needs to accept an inferior solution. Despite seemingly being an ideal benchmark for the MA to profit from its main working principle, our mathematical runtime analysis shows that this hope does not come true. Even with the optimal temperature (the only parameter of the MA), the MA optimizes most cliff functions less efficiently than simple elitist evolutionary algorithms (EAs), which can only leave the local optimum by generating a superior solution possibly far away. This result suggests that our understanding of why the MA is often very successful in practice is not yet complete. Our work also suggests to equip the MA with global mutation operators, an idea supported by our preliminary experiments.

翻译:Metropolis算法 (MA) 是一种经典的随机局部搜索启发式算法。它通过偶尔接受次优解来避免陷入局部最优解。为了更好地并以严格的方式理解这种能力,我们对CLIFF基准上的MA进行了数学运行时间分析。除了一个局部最优解,cliff函数朝向全局最优解单调递增。因此,要优化cliff函数,MA只需要接受一次较差的解。尽管这似乎是MA从其主要工作原理中获益的理想基准,但我们的数学运行时间分析显示,即使具有最佳温度(MA的唯一参数),MA优化大多数cliff函数的效率也不如简单的精英遗传算法(EAs),EAs只能通过产生可能远离的更优解来离开局部最优解。该结果表明,我们对MA为什么在实践中经常非常成功的理解还不完全。我们的工作还建议为MA配备全局变异算子,这个想法得到了我们的初步实验的支持。