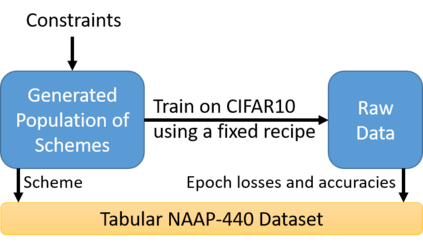

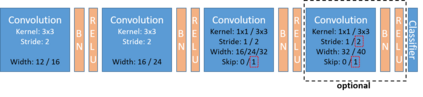

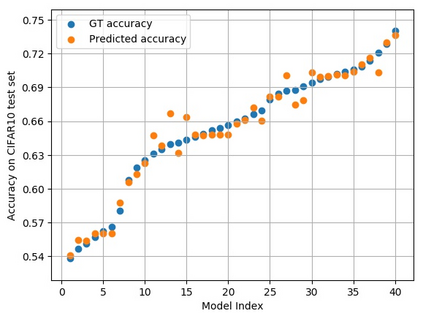

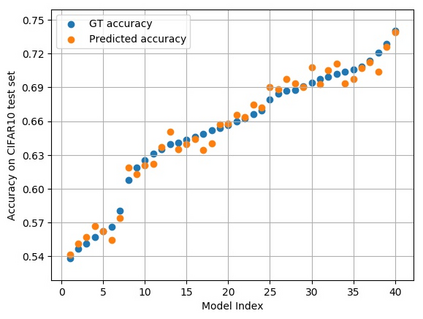

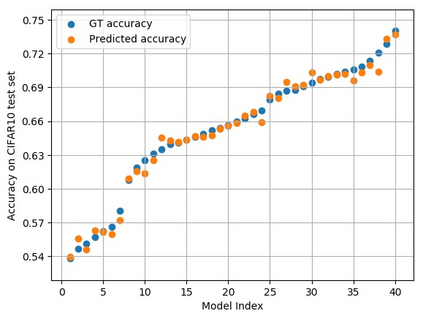

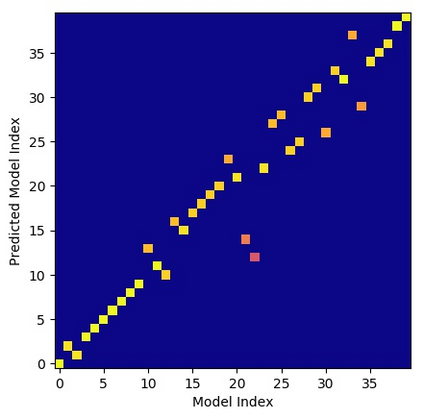

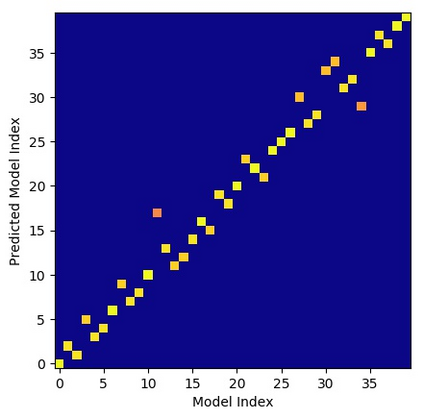

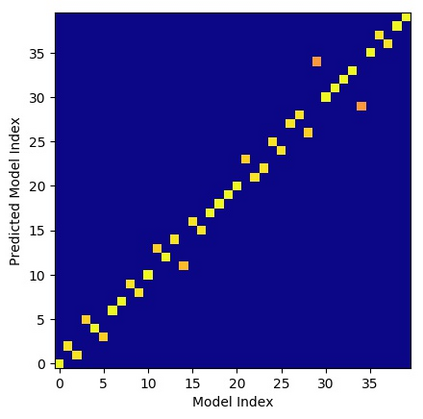

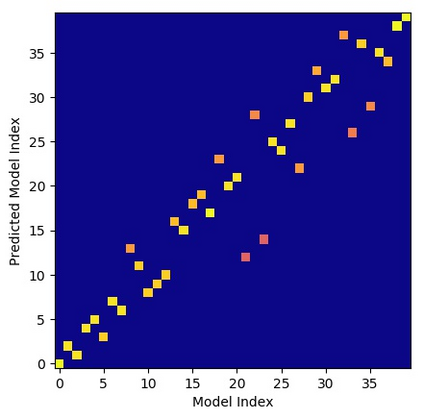

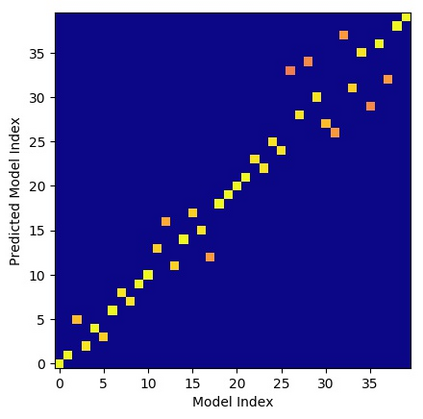

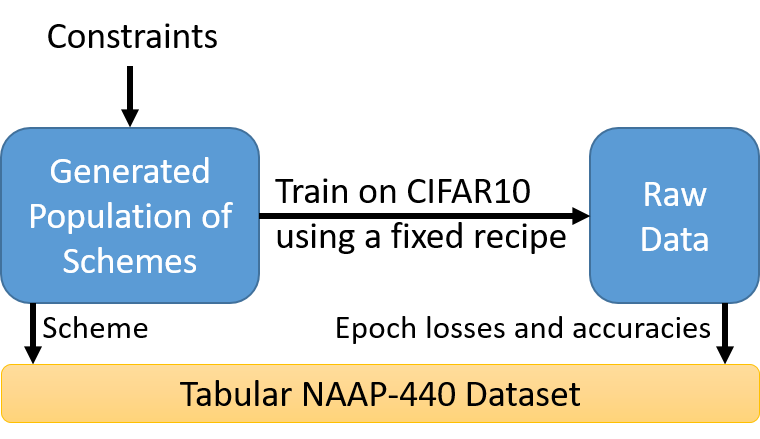

Neural architecture search (NAS) has become a common approach to developing and discovering new neural architectures for different target platforms and purposes. However, scanning the search space is comprised of long training processes of many candidate architectures, which is costly in terms of computational resources and time. Regression algorithms are a common tool to predicting a candidate architecture's accuracy, which can dramatically accelerate the search procedure. We aim at proposing a new baseline that will support the development of regression algorithms that can predict an architecture's accuracy just from its scheme, or by only training it for a minimal number of epochs. Therefore, we introduce the NAAP-440 dataset of 440 neural architectures, which were trained on CIFAR10 using a fixed recipe. Our experiments indicate that by using off-the-shelf regression algorithms and running up to 10% of the training process, not only is it possible to predict an architecture's accuracy rather precisely, but that the values predicted for the architectures also maintain their accuracy order with a minimal number of monotonicity violations. This approach may serve as a powerful tool for accelerating NAS-based studies and thus dramatically increase their efficiency. The dataset and code used in the study have been made public.

翻译:神经结构搜索(NAS)已成为开发和发现用于不同目标平台和目的的新神经结构的常见方法。 但是,扫描搜索空间是由许多候选建筑的长期培训过程组成的,在计算资源和时间方面费用昂贵。回归算法是预测候选建筑准确性的共同工具,可以大大加快搜索程序。我们的目标是提出一个新的基线,支持发展回归算法,该算法能够仅从其计划中预测一个建筑的准确性,或者仅对它进行最低限度的单一度破坏。因此,我们引入了由440个神经结构组成的NAAP-440数据集,该数据集是使用固定的食谱在CIFAR10上培训的。我们的实验表明,通过使用现成的回归算法和将培训过程的10%运行,不仅有可能预测一个建筑的准确性,而且为建筑预测的价值也能够保持其准确性秩序,其单一度被破坏的次数也很少。这一方法可以作为加速以NAS为基础的研究的强大工具,从而大大提高了数据的效率。