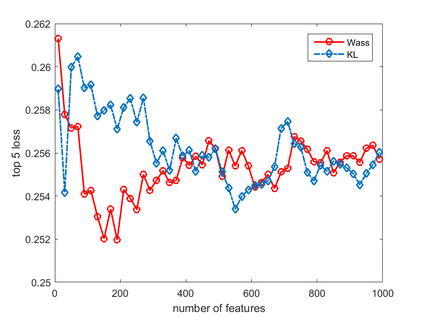

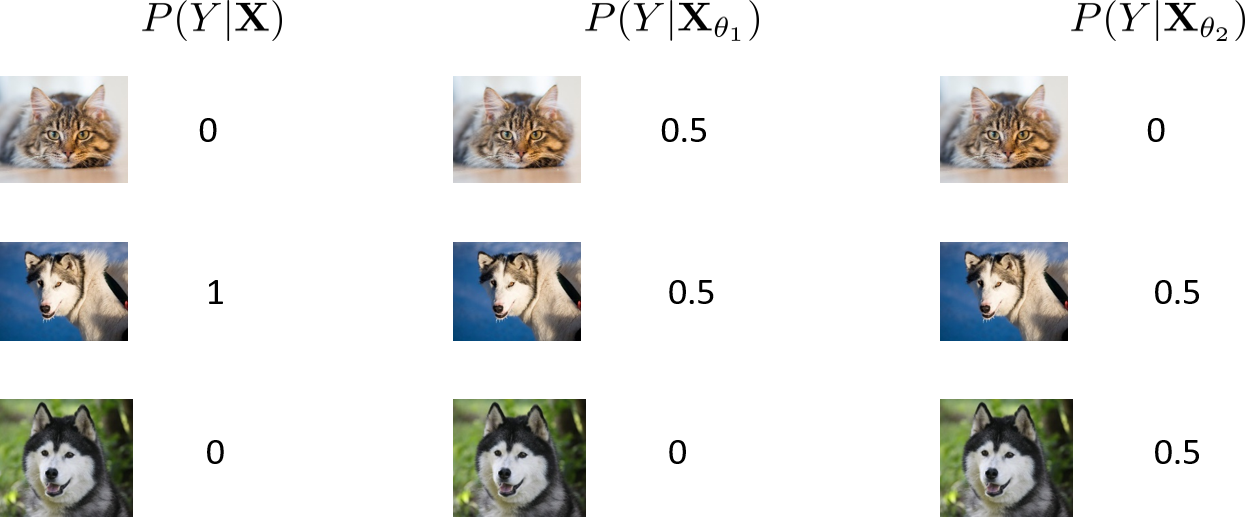

This paper presents a novel feature selection method leveraging the Wasserstein distance to improve feature selection in machine learning. Unlike traditional methods based on correlation or Kullback-Leibler (KL) divergence, our approach uses the Wasserstein distance to assess feature similarity, inherently capturing class relationships and making it robust to noisy labels. We introduce a Markov blanket-based feature selection algorithm and demonstrate its effectiveness. Our analysis shows that the Wasserstein distance-based feature selection method effectively reduces the impact of noisy labels without relying on specific noise models. We provide a lower bound on its effectiveness, which remains meaningful even in the presence of noise. Experimental results across multiple datasets demonstrate that our approach consistently outperforms traditional methods, particularly in noisy settings.

翻译:暂无翻译