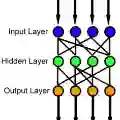

Current neural networks architectures are many times harder to train because of the increasing size and complexity of the used datasets. Our objective is to design more efficient training algorithms utilizing causal relationships inferred from neural networks. The transfer entropy (TE) was initially introduced as an information transfer measure used to quantify the statistical coherence between events (time series). Later, it was related to causality, even if they are not the same. There are only few papers reporting applications of causality or TE in neural networks. Our contribution is an information-theoretical method for analyzing information transfer between the nodes of feedforward neural networks. The information transfer is measured by the TE of feedback neural connections. Intuitively, TE measures the relevance of a connection in the network and the feedback amplifies this connection. We introduce a backpropagation type training algorithm that uses TE feedback connections to improve its performance.

翻译:由于使用过的数据集的大小和复杂性不断增加,目前的神经网络结构培训难度比现在大很多倍。我们的目标是利用从神经网络中推断的因果关系来设计更有效的培训算法。传输权(TE)最初作为一种信息传输措施被引入,用于量化事件(时间序列)之间的统计一致性。后来,它与因果关系有关,即使它们并不相同。在神经网络中,报告因果关系应用或TE的文件很少。我们的贡献是一种信息理论方法,用来分析进料神经网络节点之间的信息传输。信息传输用反馈神经连接的TE衡量。直接衡量网络连接的相关性,反馈权则放大这一连接。我们引入了一种反向调整型培训算法,使用TE反馈连接来改进网络的性能。