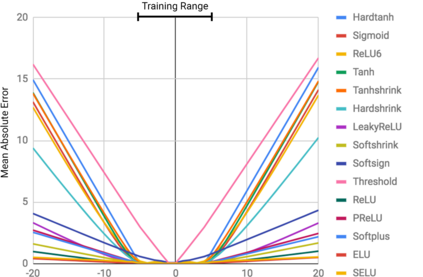

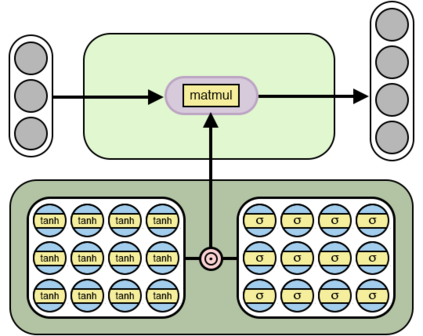

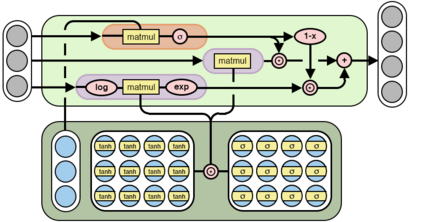

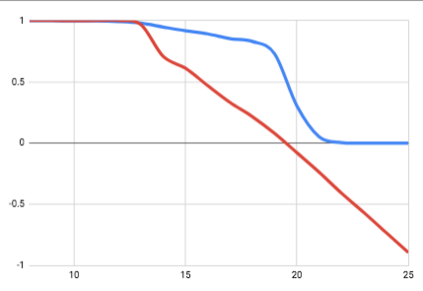

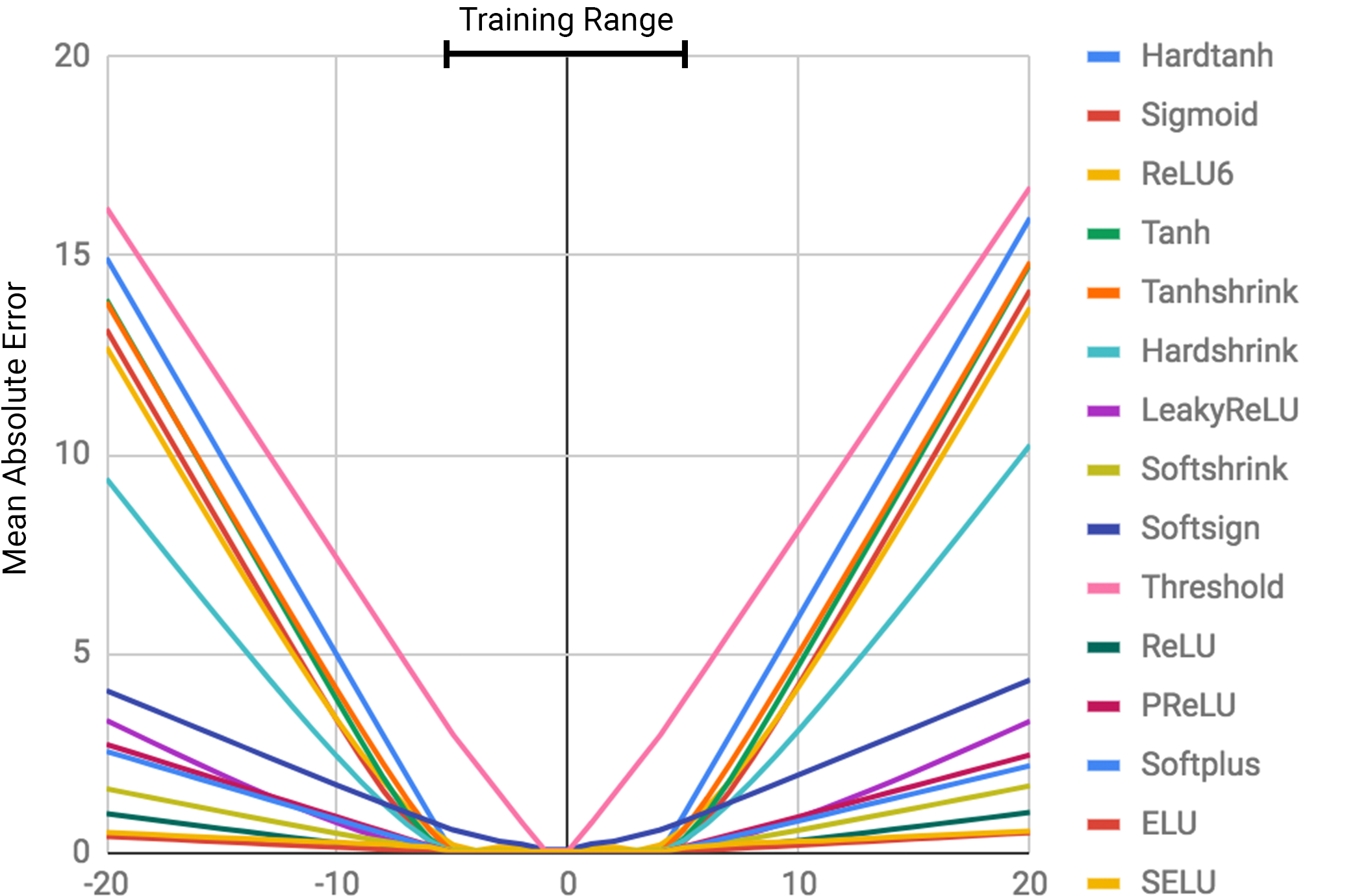

Neural networks can learn to represent and manipulate numerical information, but they seldom generalize well outside of the range of numerical values encountered during training. To encourage more systematic numerical extrapolation, we propose an architecture that represents numerical quantities as linear activations which are manipulated using primitive arithmetic operators, controlled by learned gates. We call this module a neural arithmetic logic unit (NALU), by analogy to the arithmetic logic unit in traditional processors. Experiments show that NALU-enhanced neural networks can learn to track time, perform arithmetic over images of numbers, translate numerical language into real-valued scalars, execute computer code, and count objects in images. In contrast to conventional architectures, we obtain substantially better generalization both inside and outside of the range of numerical values encountered during training, often extrapolating orders of magnitude beyond trained numerical ranges.

翻译:神经网络可以学习如何代表并操作数字信息, 但是它们很少在培训期间遇到的数字值范围之外进行概括化。 为了鼓励更系统化的数值外推, 我们建议一个结构, 以线性激活形式代表数量, 由原始算术操作者操纵, 由有学识的门加以控制。 我们称这个模块为神经算算逻辑单位( NALU), 与传统处理器中的算术逻辑单位类似 。 实验显示, NALU 增强的神经网络可以学习跟踪时间, 对数字图像进行算术, 将数字语言转换为实际价值的标语, 执行计算机代码, 和在图像中计数对象 。 与常规结构不同, 我们得到了在培训期间遇到的数字范围内外大大改进的概括化, 常常将数量级的外推出超过经过训练的数字范围 。