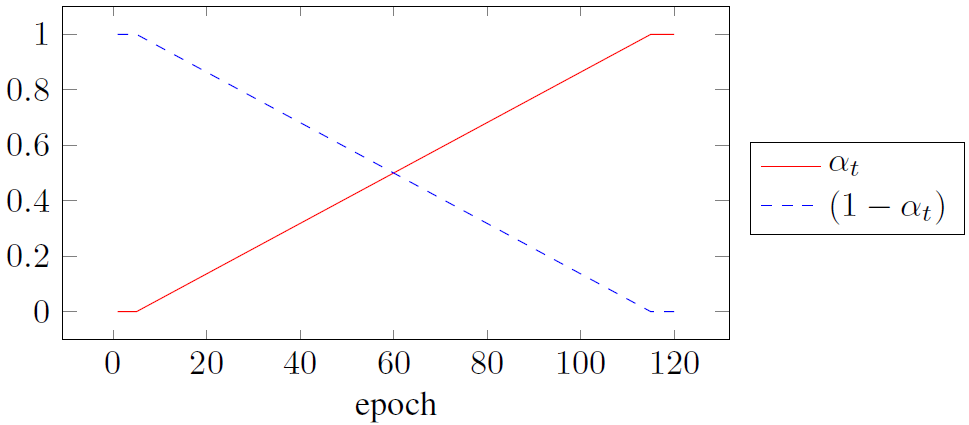

In recent years novel activation functions have been proposed to improve the performance of neural networks, and they show superior performance compared to the ReLU counterpart. However, there are environments, where the availability of complex activations is limited, and usually only the ReLU is supported. In this paper we propose methods that can be used to improve the performance of ReLU networks by using these efficient novel activations during model training. More specifically, we propose ensemble activations that are composed of the ReLU and one of these novel activations. Furthermore, the coefficients of the ensemble are neither fixed nor learned, but are progressively updated during the training process in a way that by the end of the training only the ReLU activations remain active in the network and the other activations can be removed. This means that in inference time the network contains ReLU activations only. We perform extensive evaluations on the ImageNet classification task using various compact network architectures and various novel activation functions. Results show 0.2-0.8% top-1 accuracy gain, which confirms the applicability of the proposed methods. Furthermore, we demonstrate the proposed methods on semantic segmentation and we boost the performance of a compact segmentation network by 0.34% mIOU on the Cityscapes dataset.

翻译:近些年来,提出了新的激活功能,以改善神经网络的性能,这些功能与RELU相对应的神经网络相比表现优异。然而,有些环境,复杂激活的可用性有限,通常只有RELU得到支持。在本文中,我们提出一些方法,可以通过在模型培训中使用这些高效的新激活来改进RELU网络的性能。更具体地说,我们提议采用由ReLU和其中一种新激活组成的混合激活。此外,合用词的系数既不固定也不学习,但在培训过程中正在逐步更新,在培训结束时,只有RELU的启动在网络中仍然活跃,而其他激活可以取消。这意味着在推断中,网络只包含RELU的激活。我们使用各种压缩网络架构和各种新激活功能对图像网络分类任务进行了广泛的评价。结果显示0.2-0.8%的上一精度增率,这证实了拟议方法的适用性。此外,我们展示了在培训结束时,只有RELU的激活活动在网络中仍然活跃,而其他的激活活动则可以取消。这意味着在推断时间里,网络只包含RELU的激活。我们利用各种压缩网络的对图像进行广泛的评价。我们用0.3的运行网络的功能的功能进行了广泛的评价。我们用0.3 。我们展示了拟议的方法来提升了对图像网络的功能。