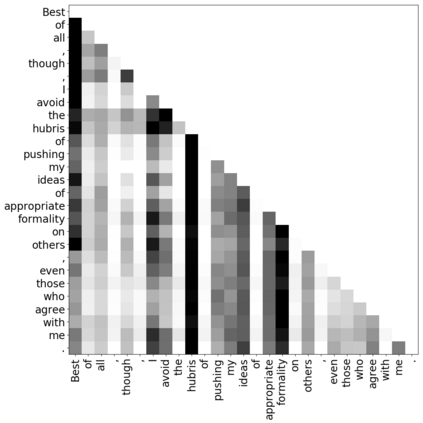

Modern deep transfer learning approaches have mainly focused on learning generic feature vectors from one task that are transferable to other tasks, such as word embeddings in language and pretrained convolutional features in vision. However, these approaches usually transfer unary features and largely ignore more structured graphical representations. This work explores the possibility of learning generic latent relational graphs that capture dependencies between pairs of data units (e.g., words or pixels) from large-scale unlabeled data and transferring the graphs to downstream tasks. Our proposed transfer learning framework improves performance on various tasks including question answering, natural language inference, sentiment analysis, and image classification. We also show that the learned graphs are generic enough to be transferred to different embeddings on which the graphs have not been trained (including GloVe embeddings, ELMo embeddings, and task-specific RNN hidden unit), or embedding-free units such as image pixels.

翻译:现代深层传输学习方法主要侧重于从一个任务中学习可转让到其他任务的通用特性矢量,例如语言中的单词嵌入和视觉中经过预先训练的进化特征。但是,这些方法通常转移单词特征,并基本上忽略结构化的图形表达方式。这项工作探索了学习通用潜在关系图的可能性,这些图解从大型无标签数据对配数据(如单词或像素)中捕捉成,并将图表传输到下游任务。我们提议的转移学习框架提高了各种任务的业绩,包括问题回答、自然语言推断、情绪分析和图像分类。我们还表明,所学的图表足够通用,足以被转移到没有经过培训的不同嵌入点(包括GloVe嵌入、ELMo嵌入和特定任务 RNN 隐藏单元),或图像像像像像像素等不嵌入单位。