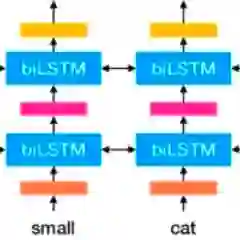

We introduce a new type of deep contextualized word representation that models both (1) complex characteristics of word use (e.g., syntax and semantics), and (2) how these uses vary across linguistic contexts (i.e., to model polysemy). Our word vectors are learned functions of the internal states of a deep bidirectional language model (biLM), which is pre-trained on a large text corpus. We show that these representations can be easily added to existing models and significantly improve the state of the art across six challenging NLP problems, including question answering, textual entailment and sentiment analysis. We also present an analysis showing that exposing the deep internals of the pre-trained network is crucial, allowing downstream models to mix different types of semi-supervision signals.

翻译:我们引入了一种新型的深层次背景化的字义表达方式,这种表达方式可以建模:(1) 词汇使用(例如语法和语义学)的复杂特征,以及(2) 这些使用方式在语言背景上如何不同(例如建模多语系)。我们的文字矢量是深双向语言模式(BILM)内部状态的学习功能,该模式在大量文本材料上经过预先培训。我们表明,这些表达方式可以很容易地添加到现有的模型中,并大大改善在六个具有挑战性的NLP问题中的艺术状态,包括问题回答、文字要求和情绪分析。我们还提出分析,表明暴露预先培训的网络的深层内部至关重要,允许下游模式混合不同类型的半视信号。