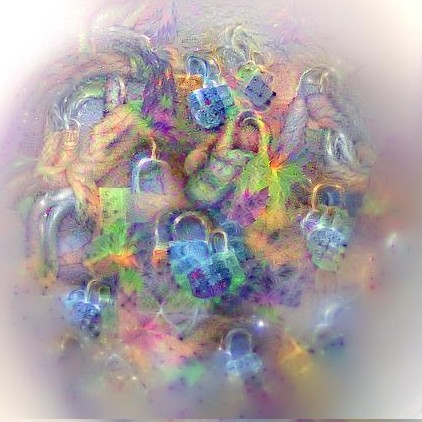

Visual interpretability of Convolutional Neural Networks (CNNs) has gained significant popularity because of the great challenges that CNN complexity imposes to understanding their inner workings. Although many techniques have been proposed to visualize class features of CNNs, most of them do not provide a correspondence between inputs and the extracted features in specific layers. This prevents the discovery of stimuli that each layer responds better to. We propose an approach to visually interpret CNN features given a set of images by creating corresponding images that depict the most informative features of a specific layer. Exploring features in this class-agnostic manner allows for a greater focus on the feature extractor of CNNs. Our method uses a dual-objective activation maximization and distance minimization loss, without requiring a generator network nor modifications to the original model. This limits the number of FLOPs to that of the original network. We demonstrate the visualization quality on widely-used architectures.

翻译:由于CNN复杂程度给理解其内部功能带来的巨大挑战,革命神经网络(CNN)的视觉可解释性已变得相当受欢迎。虽然提出了许多技术来直观CNN的类特征,但大多数技术并不提供输入和特定层中提取的特征之间的对应关系。这妨碍了发现每一层更能响应的刺激因素。我们建议了一种方法,通过制作描述特定层最丰富特征的相貌图像,对CNN的特征进行视觉解释。这种类的不可知性特征的探索使得人们能够更加注重CNN的特征提取器。我们的方法采用双重目标激活最大化和距离最小化损失,而不需要发电机网络或原始模型的修改。这限制了FLOP的数量与原始网络的数量。我们展示了广泛使用的建筑的视觉质量。