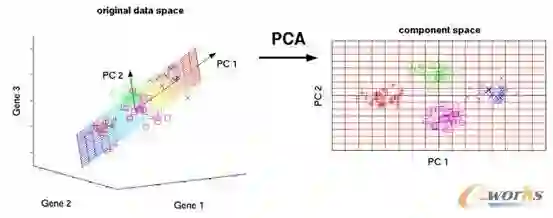

In a high-dimensional regression framework, we study consequences of the naive two-step procedure where first the dimension of the input variables is reduced and second, the reduced input variables are used to predict the output variable with kernel regression. In order to analyze the resulting regression errors, a novel stability result for kernel regression with respect to the Wasserstein distance is derived. This allows us to bound errors that occur when perturbed input data is used to fit the regression function. We apply the general stability result to principal component analysis (PCA). Exploiting known estimates from the literature on both principal component analysis and kernel regression, we deduce convergence rates for the two-step procedure. The latter turns out to be particularly useful in a semi-supervised setting.

翻译:在高维回归框架下,我们研究了简单的两步法的后果,首先将输入变量的维数减少,然后使用减少的输入变量进行核回归来预测输出变量。为了分析得到的回归误差,我们推导了一种新的核回归稳定性结果,该结果采用Wasserstein距离来衡量回归结果的稳定性。这使我们能够限制使用扰动的输入数据拟合回归函数时出现的误差。我们将这种新的稳定性结果应用于主成分分析(PCA)。利用主成分分析和核回归文献中已知的估计,我们推导了两步法的收敛速率。后者在半监督设置中特别有用。