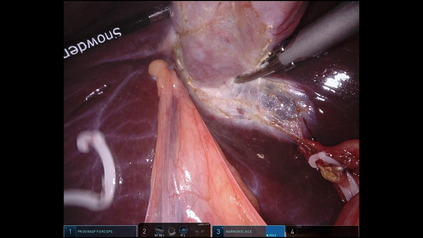

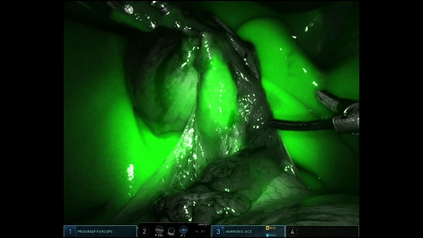

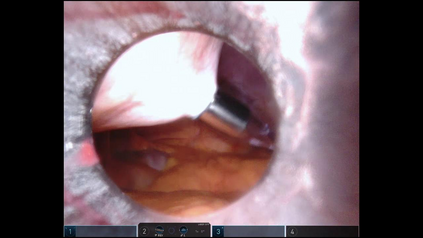

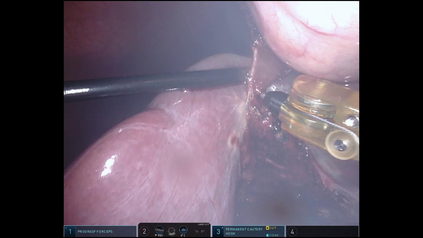

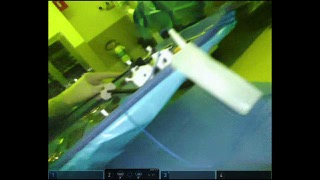

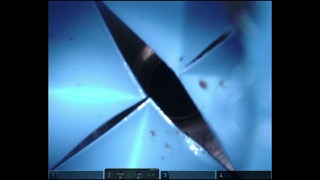

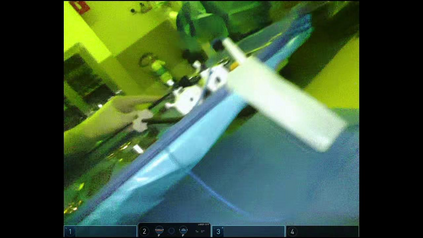

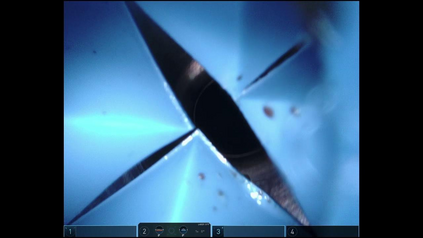

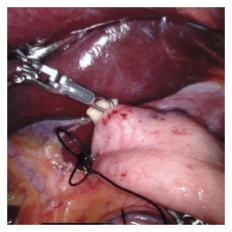

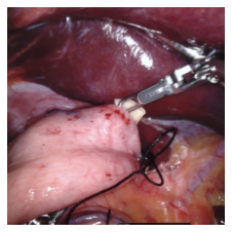

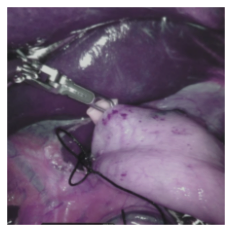

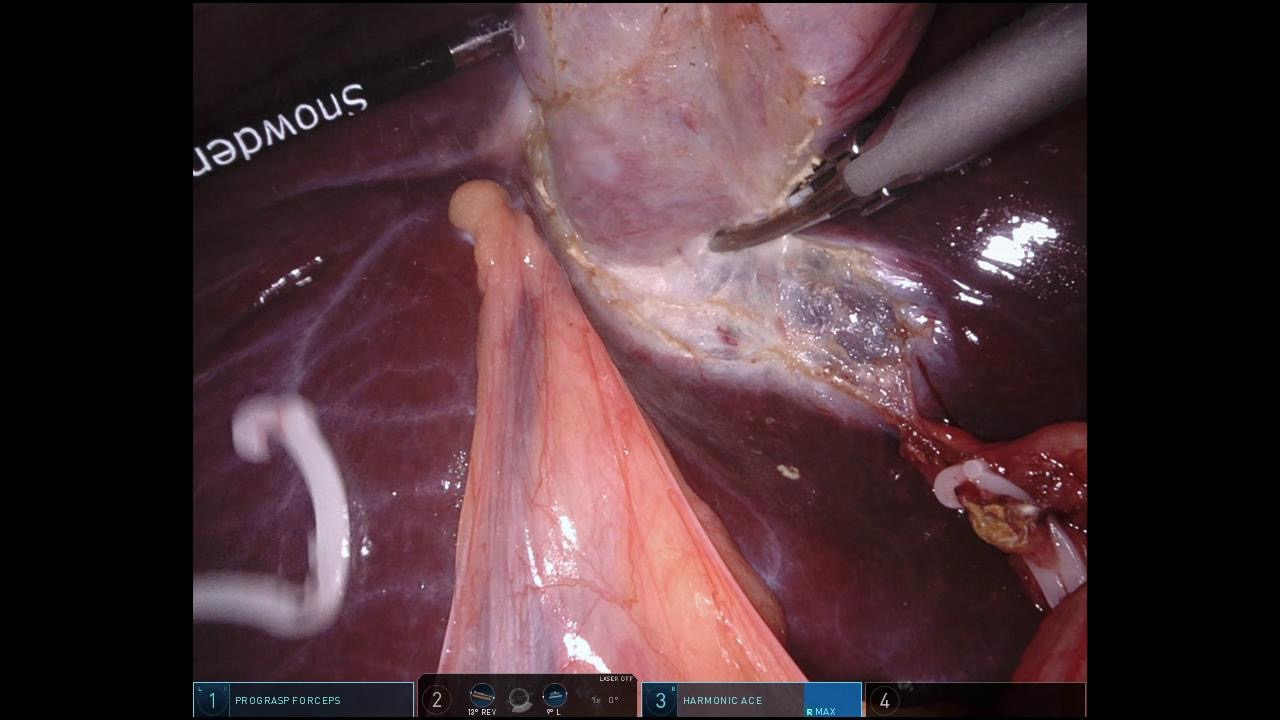

Endoscopic video recordings are widely used in minimally invasive robot-assisted surgery, but when the endoscope is outside the patient's body, it can capture irrelevant segments that may contain sensitive information. To address this, we propose a framework that accurately detects out-of-body frames in surgical videos by leveraging self-supervision with minimal data labels. We use a massive amount of unlabeled endoscopic images to learn meaningful representations in a self-supervised manner. Our approach, which involves pre-training on an auxiliary task and fine-tuning with limited supervision, outperforms previous methods for detecting out-of-body frames in surgical videos captured from da Vinci X and Xi surgical systems. The average F1 scores range from 96.00 to 98.02. Remarkably, using only 5% of the training labels, our approach still maintains an average F1 score performance above 97, outperforming fully-supervised methods with 95% fewer labels. These results demonstrate the potential of our framework to facilitate the safe handling of surgical video recordings and enhance data privacy protection in minimally invasive surgery.

翻译:内窥镜视频记录在微创机器人辅助手术中广泛使用,但当内窥镜在患者体外时,它可能捕捉到包含敏感信息的无关片段。为了解决这个问题,我们提出了一个框架,通过利用少量数据标签的自我监督准确检测手术视频中的身体外帧。我们使用大量未标记的内窥镜图像以自监督方式学习有意义的表示。我们的方法包括在辅助任务上进行预训练并在有限的监督下进行微调,因此与以前的手术视频中检测身体外帧的方法相比表现更好。平均F1分数在96.00到98.02之间。值得注意的是,仅使用5%的训练标签,我们的方法仍可保持平均F1分数在97以上的性能,在使用较少标签的情况下优于全监督方法,标签数量减少了95%。这些结果证明了我们框架促进手术视频记录的安全处理,提高微创手术中数据隐私保护的潜力。