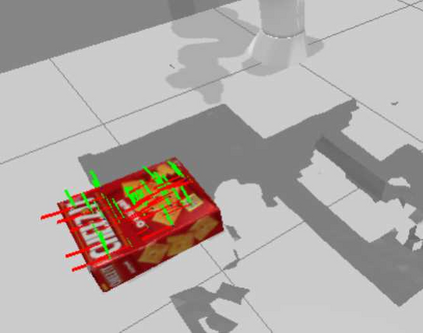

Robots need the capability of placing objects in arbitrary, specific poses to rearrange the world and achieve various valuable tasks. Object reorientation plays a crucial role in this as objects may not initially be oriented such that the robot can grasp and then immediately place them in a specific goal pose. In this work, we present a vision-based manipulation system, ReorientBot, which consists of 1) visual scene understanding with pose estimation and volumetric reconstruction using an onboard RGB-D camera; 2) learned waypoint selection for successful and efficient motion generation for reorientation; 3) traditional motion planning to generate a collision-free trajectory from the selected waypoints. We evaluate our method using the YCB objects in both simulation and the real world, achieving 93% overall success, 81% improvement in success rate, and 22% improvement in execution time compared to a heuristic approach. We demonstrate extended multi-object rearrangement showing the general capability of the system.

翻译:机器人需要将物体放置在任意的、特定的外形中的能力,以重新排列世界并完成各种有价值的任务。 物体方向调整在这方面发挥着关键的作用, 因为物体最初的方向可能不是机器人能够掌握的, 然后将物体立即置于特定的目标之下。 在这项工作中,我们提出了一个基于视觉的操纵系统ReorientBot, 其中包括:(1) 利用在RGB- D 相机上安装的 RGB- D 相机进行视觉场景了解,并进行配置估计和体积重建;(2) 学会选择成功和高效的动作生成方向,以便调整方向;(3) 传统运动规划,以便从选定的路径点产生无碰撞的轨迹。 我们评估我们在模拟和实际世界使用YCB天体物体的方法,实现93%的总体成功率,81%的成功率提高,与超常方法相比,执行时间提高22%。 我们展示了显示系统总体能力的扩展多点调整。