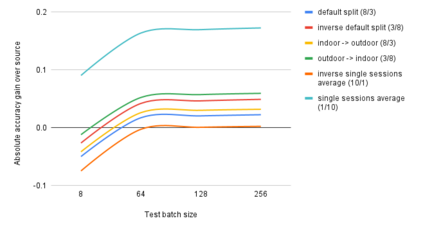

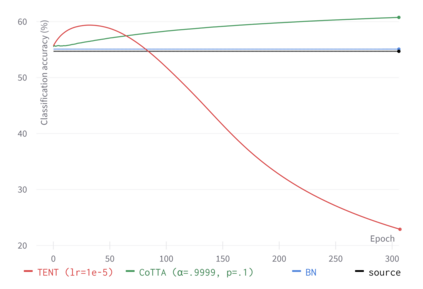

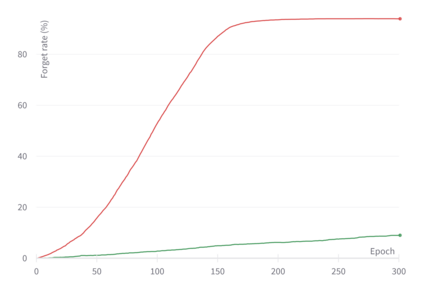

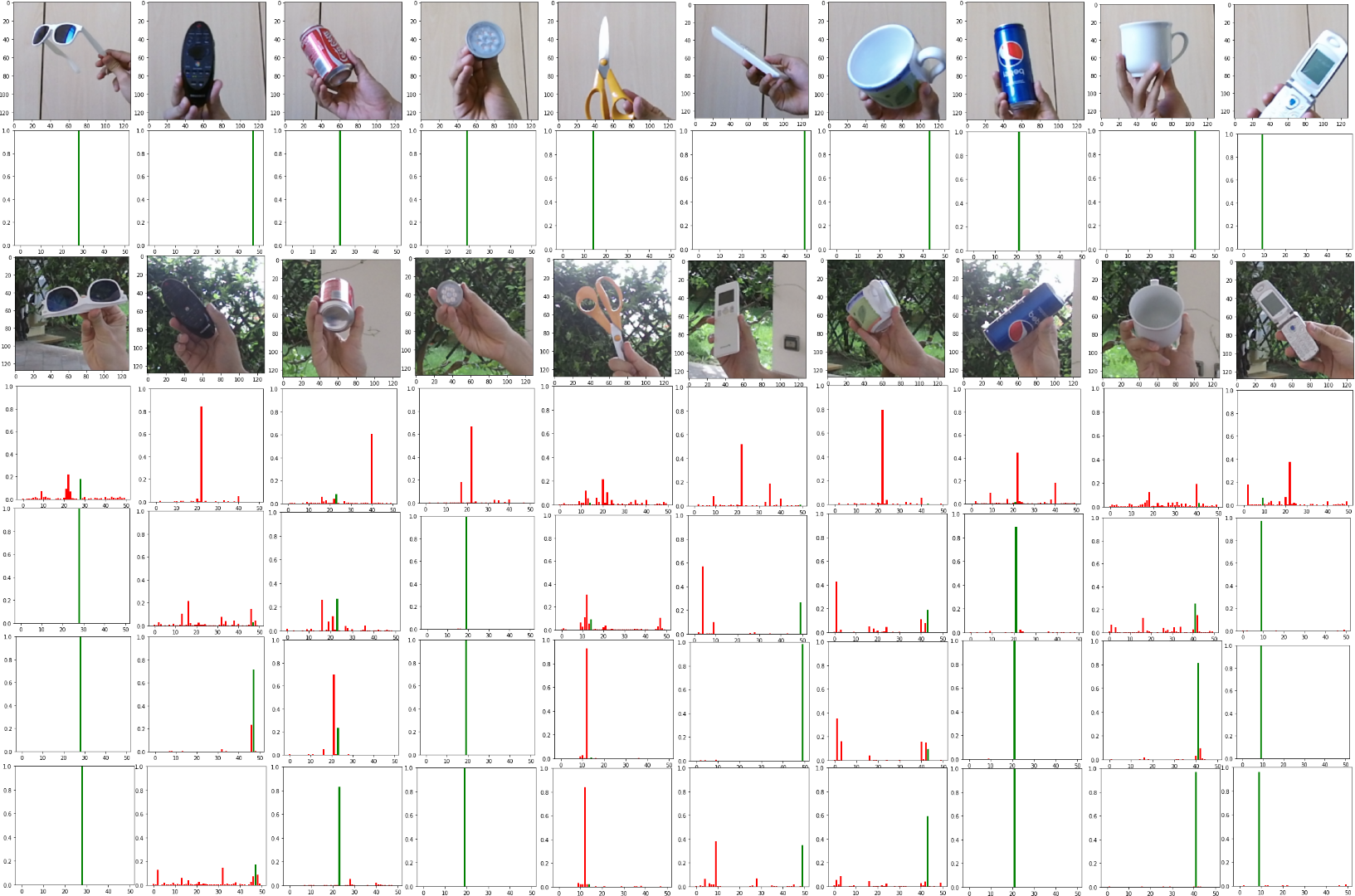

In this paper, our goal is to adapt a pre-trained Convolutional Neural Network to domain shifts at test time. We do so continually with the incoming stream of test batches, without labels. Existing literature mostly operates on artificial shifts obtained via adversarial perturbations of a test image. Motivated by this, we evaluate the state of the art on two realistic and challenging sources of domain shifts, namely contextual and semantic shifts. Contextual shifts correspond to the environment types, for example a model pre-trained on indoor context has to adapt to the outdoor context on CORe-50 [7]. Semantic shifts correspond to the capture types, for example a model pre-trained on natural images has to adapt to cliparts, sketches and paintings on DomainNet [10]. We include in our analysis recent techniques such as Prediction-Time Batch Normalization (BN) [8], Test Entropy Minimization (TENT) [16] and Continual Test-Time Adaptation (CoTTA) [17]. Our findings are three-fold: i) Test-time adaptation methods perform better and forget less on contextual shifts compared to semantic shifts, ii) TENT outperforms other methods on short-term adaptation, whereas CoTTA outpeforms other methods on long-term adaptation, iii) BN is most reliable and robust.

翻译:在本文中,我们的目标是使经过预先训练的革命神经网络适应测试时间的场外变化。我们通过测试批量的流入,在没有标签的情况下不断这样做。现有的文献大多使用通过测试图像的对抗性扰动获得的人工转移。为此,我们评估了两种现实和具有挑战性的域转移来源,即上下文和语义转移的先进技术现状。环境变化与环境类型相对应,例如,在室内背景上经过预先训练的模型必须适应CORE-50[7]的室外环境环境环境。根据捕获类型,我们不断这样做。对于自然图像进行预先训练的模型必须适应DomainNet上的剪辑、素描和绘画[10]。我们的分析包括了两种最新技术,例如:预测-时间批发正常化(BN) [8]、测试最小化(TENT) [16]和持续试验-时间适应(CotTATA) [17]。我们的调查结果有三重:测试-时间适应方法比最长期适应方法要更好和较少忘记背景变化,而长期调整是其他方法。

相关内容

Source: Apple - iOS 8