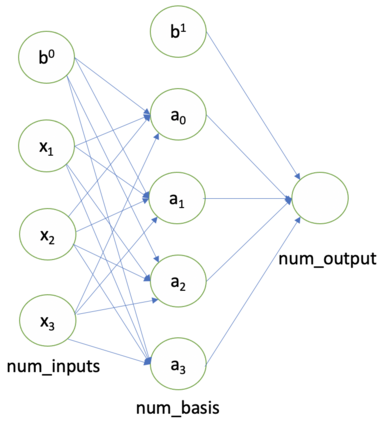

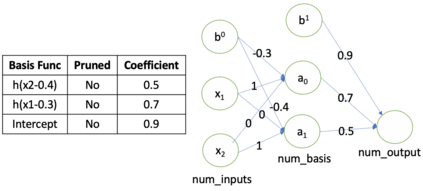

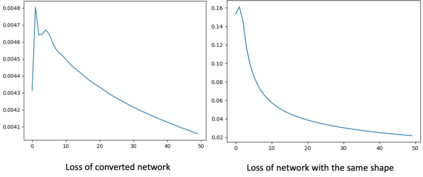

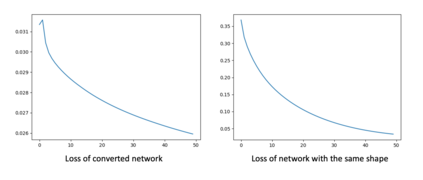

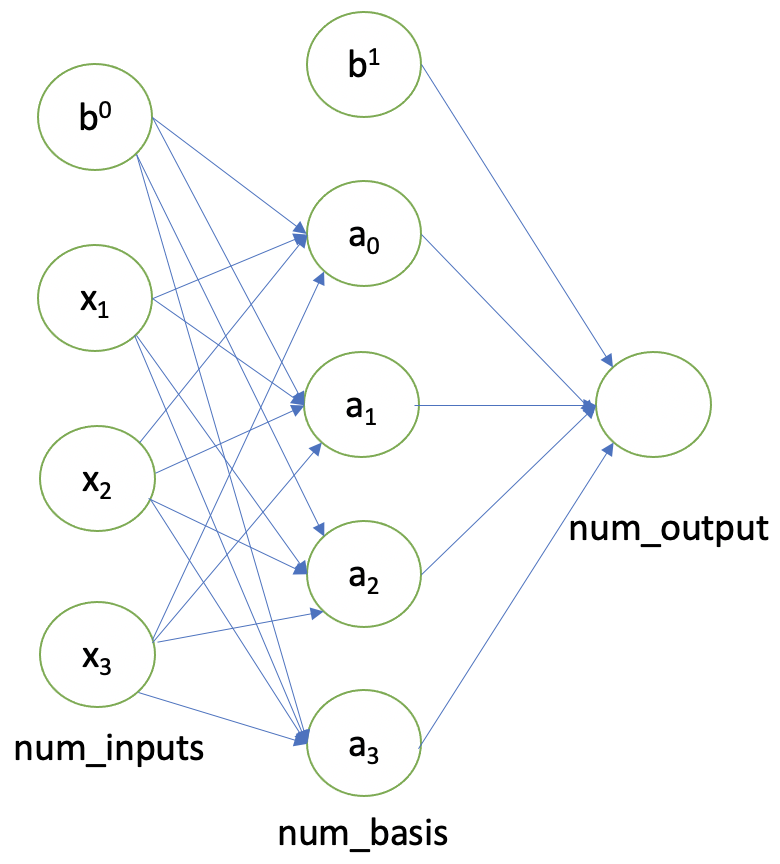

The fully connected (FC) layer, one of the most fundamental modules in artificial neural networks (ANN), is often considered difficult and inefficient to train due to issues including the risk of overfitting caused by its large amount of parameters. Based on previous work studying ANN from linear spline perspectives, we propose a spline-based approach that eases the difficulty of training FC layers. Given some dataset, we first obtain a continuous piece-wise linear (CPWL) fit through spline methods such as multivariate adaptive regression spline (MARS). Next, we construct an ANN model from the linear spline model and continue to train the ANN model on the dataset using gradient descent optimization algorithms. Our experimental results and theoretical analysis show that our approach reduces the computational cost, accelerates the convergence of FC layers, and significantly increases the interpretability of the resulting model (FC layers) compared with standard ANN training with random parameter initialization followed by gradient descent optimizations.

翻译:完全连接(FC)层是人工神经网络(ANN)中最基本的模块之一,通常被认为是培训困难和低效的,因为问题包括其大量参数造成的超编风险。根据以前从线性样条角度对ANN进行的研究,我们提出了一个基于样板的方法,以减轻培训FC层的困难。根据一些数据集,我们首先通过多变量适应性回归样条等样条方法获得连续的片断线性线性(CPWL)。接下来,我们从线性样条模型中建立一个ANN模型,并继续利用梯度下降优化算法对ANN模型进行数据集培训。我们的实验结果和理论分析表明,我们的方法降低了计算成本,加快了FC层的趋同,并大大提高了由此产生的模型(FC层)与随机参数初始化和梯度下降优化的标准培训的可解释性。