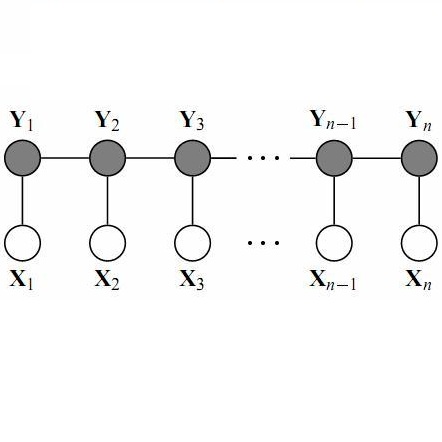

We propose a novel method for predicting image labels by fusing image content descriptors with the social media context of each image. An image uploaded to a social media site such as Flickr often has meaningful, associated information, such as comments and other images the user has uploaded, that is complementary to pixel content and helpful in predicting labels. Prediction challenges such as ImageNet~\cite{imagenet_cvpr09} and MSCOCO~\cite{LinMBHPRDZ:ECCV14} use only pixels, while other methods make predictions purely from social media context \cite{McAuleyECCV12}. Our method is based on a novel fully connected Conditional Random Field (CRF) framework, where each node is an image, and consists of two deep Convolutional Neural Networks (CNN) and one Recurrent Neural Network (RNN) that model both textual and visual node/image information. The edge weights of the CRF graph represent textual similarity and link-based metadata such as user sets and image groups. We model the CRF as an RNN for both learning and inference, and incorporate the weighted ranking loss and cross entropy loss into the CRF parameter optimization to handle the training data imbalance issue. Our proposed approach is evaluated on the MIR-9K dataset and experimentally outperforms current state-of-the-art approaches.

翻译:我们提出一种新的方法来预测图像标签, 将图像内容描述符与每张图像的社交媒体背景混在一起。 上传到Flickr等社交媒体网站的图像通常拥有有意义的相关信息, 如评论和用户上传的其他图像, 这与像素内容是互补的, 有助于预测标签。 预测挑战, 如图像Net ⁇ cite{imagenet_ cvpr09} 和 MSCOCO ⁇ cite{LinMBHPRDZ:ECCV14} 仅使用像素, 而其他方法则纯粹从社交媒体背景做出预测 \ cite{McAuleyECCV12}。 我们的方法基于一个全连通的有条件随机字段(CRF)框架, 其中每个节点都是图像的补充, 包括两个深层进化神经网络(CNNN)和一个常规神经网络(RNNNN), 用来模拟文字和视觉节点/图像信息。 通用报告格式的边缘重量代表文本相似性和基于链接的元元元元元数据, 如用户设置和图像组, 我们的模型和图像模型的模型, 将模型的模型的模型 升级的模型的模型到模型的模缩缩缩缩压数据纳入。