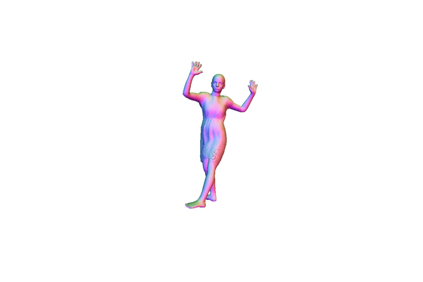

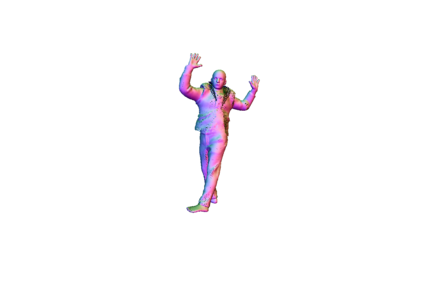

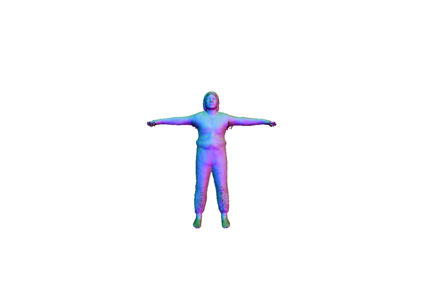

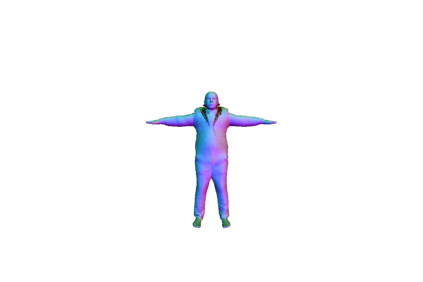

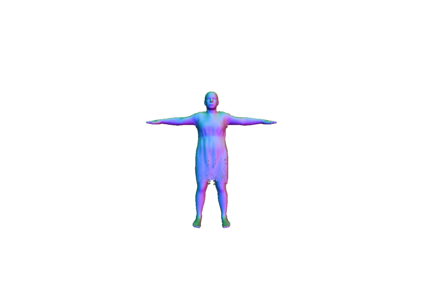

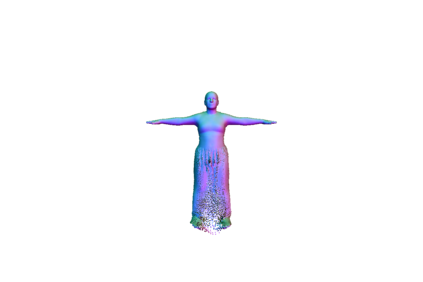

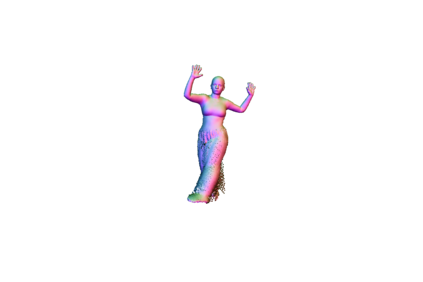

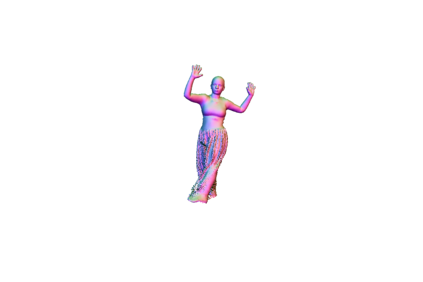

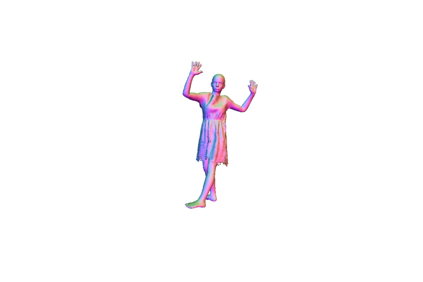

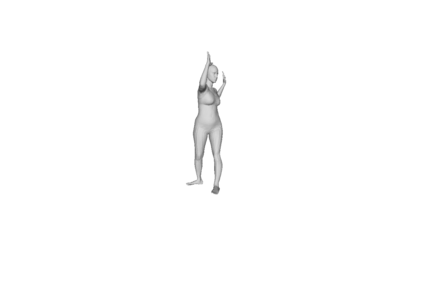

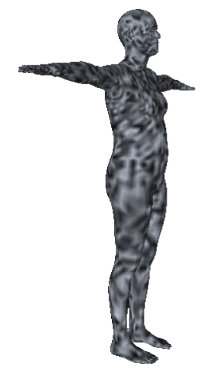

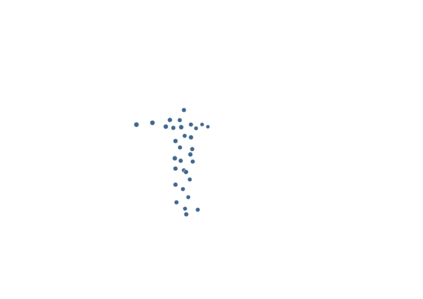

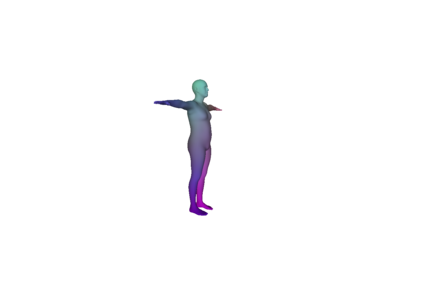

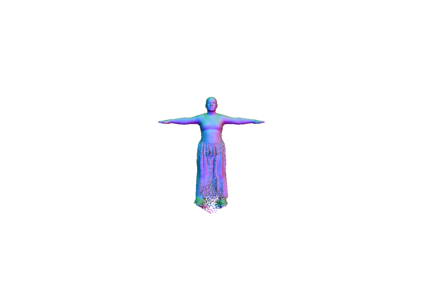

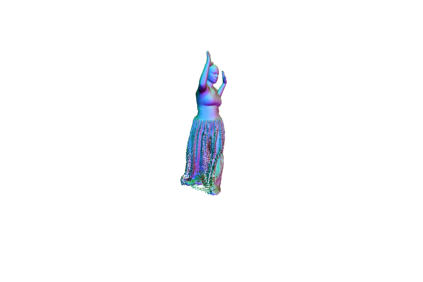

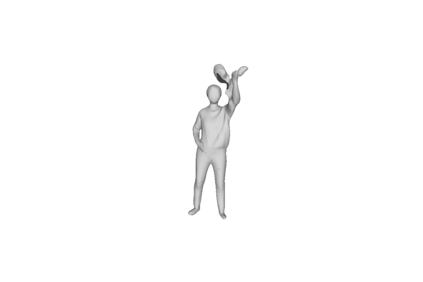

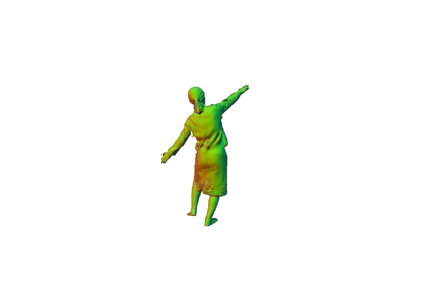

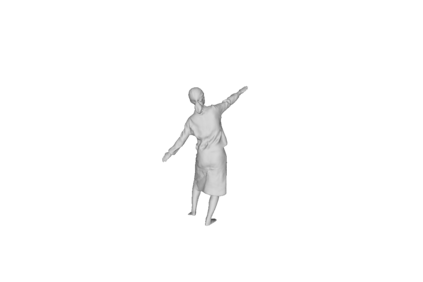

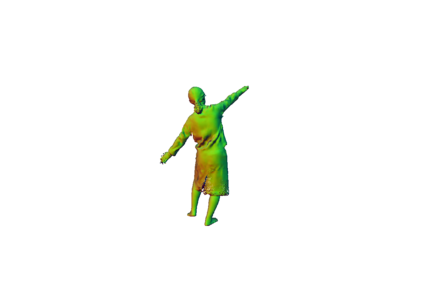

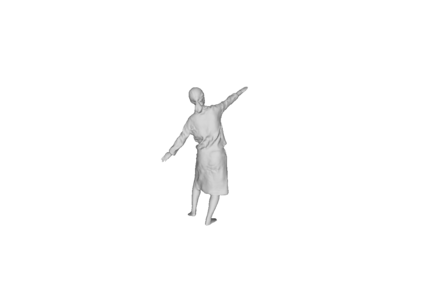

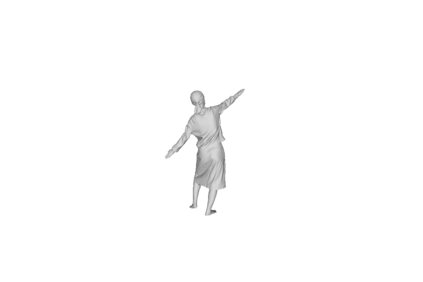

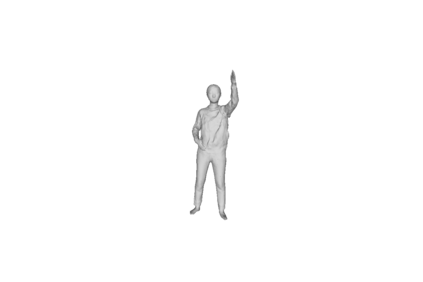

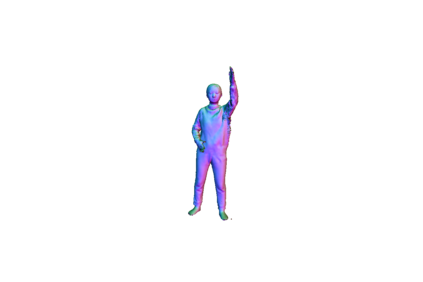

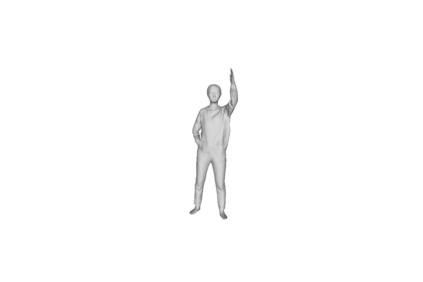

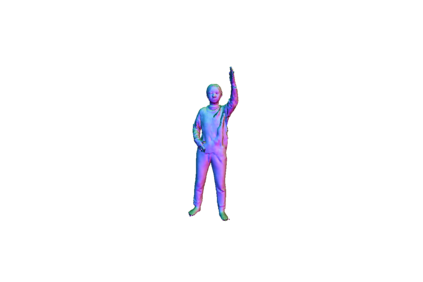

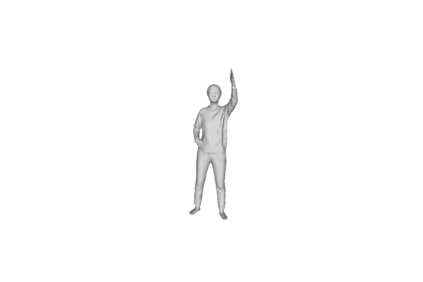

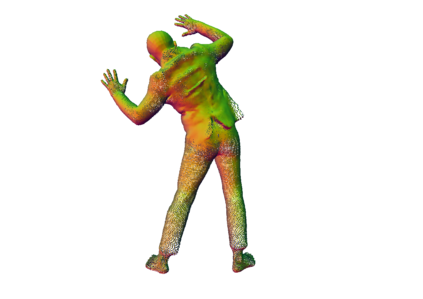

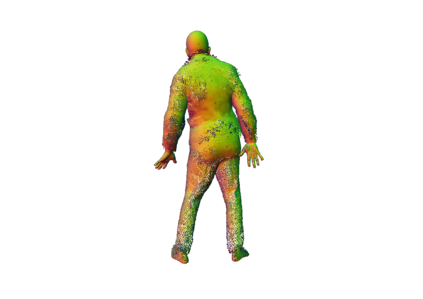

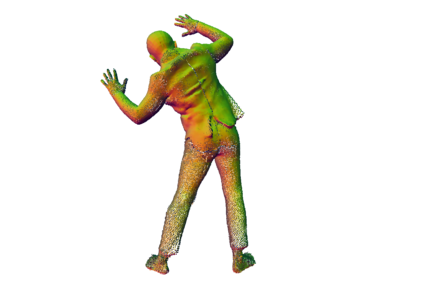

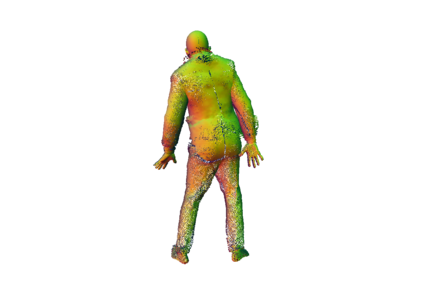

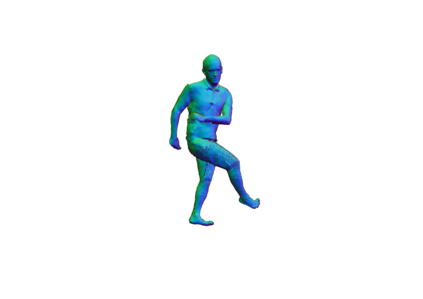

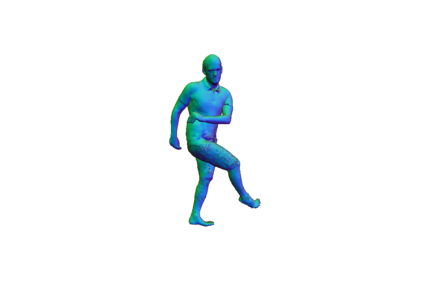

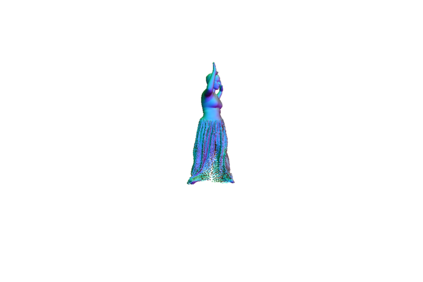

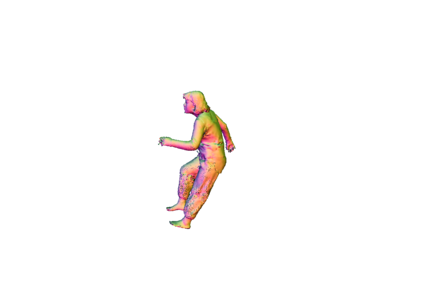

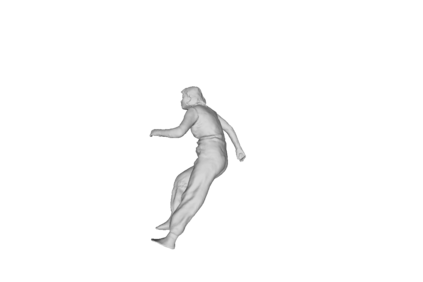

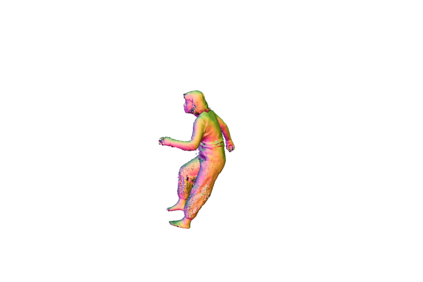

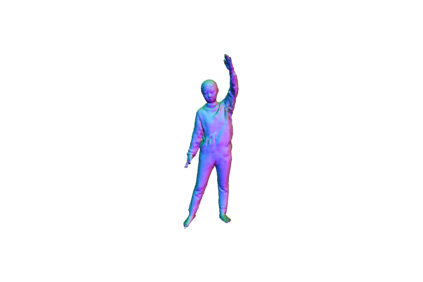

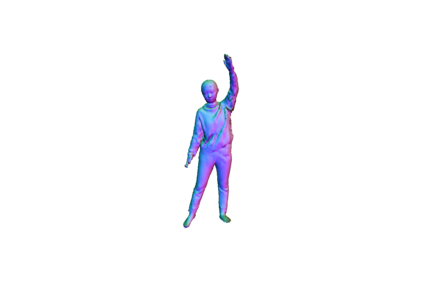

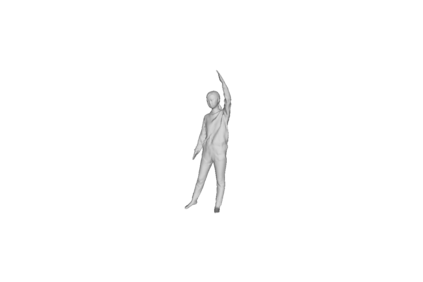

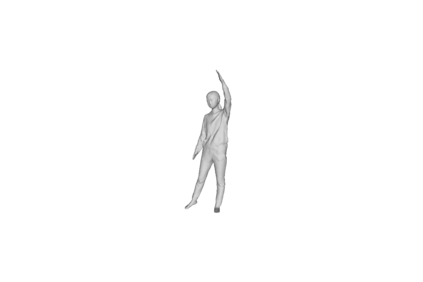

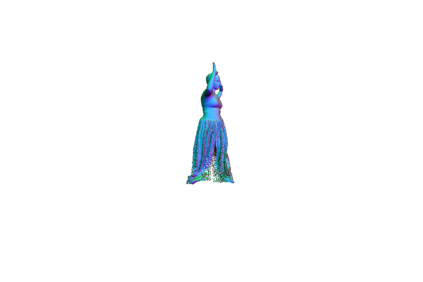

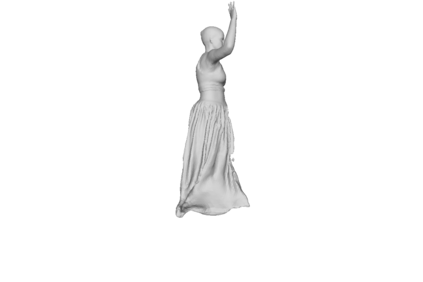

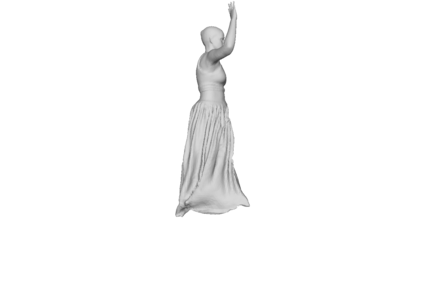

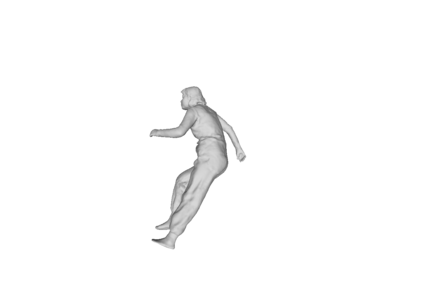

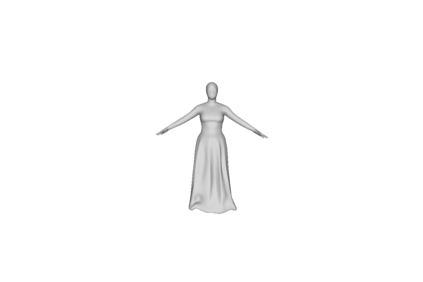

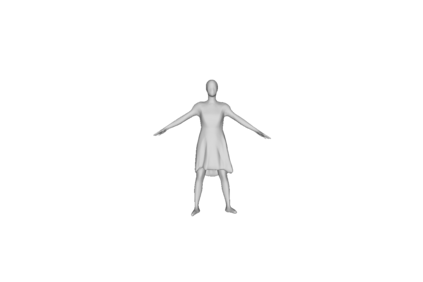

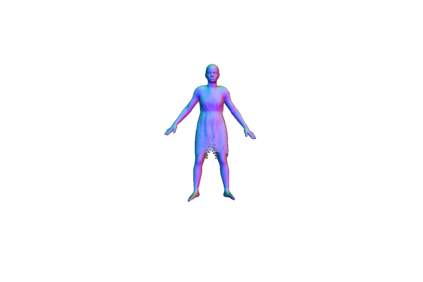

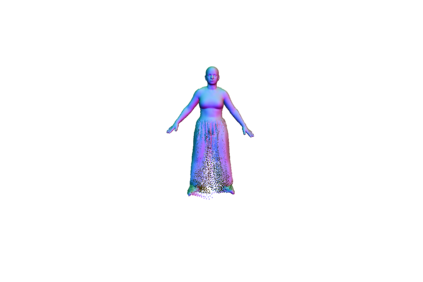

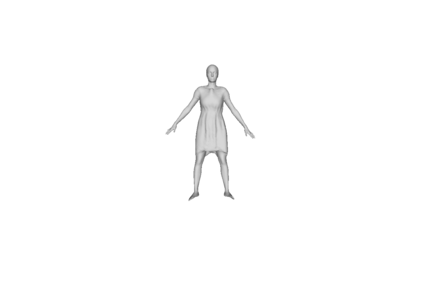

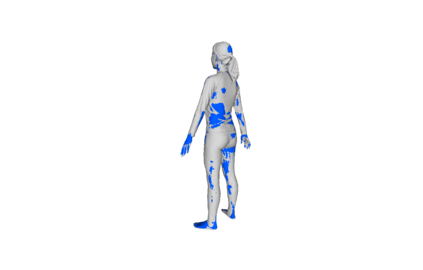

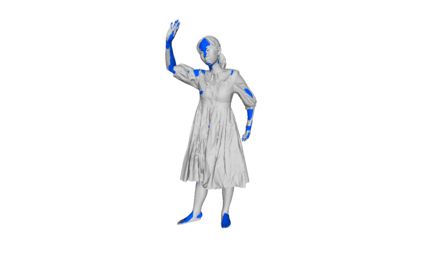

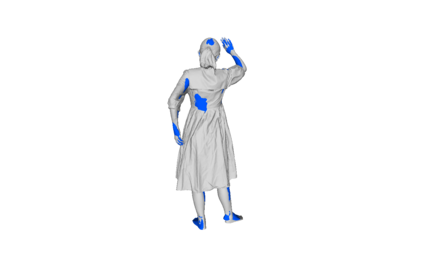

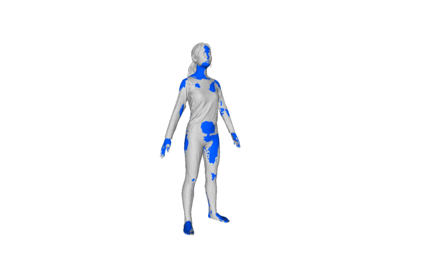

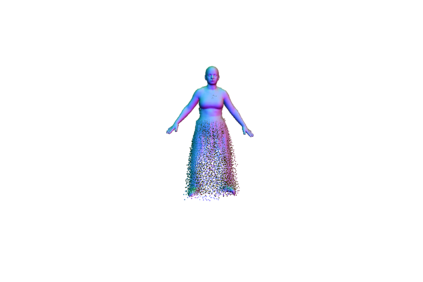

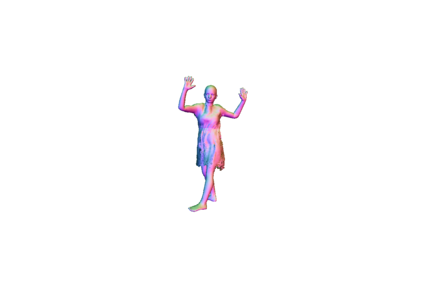

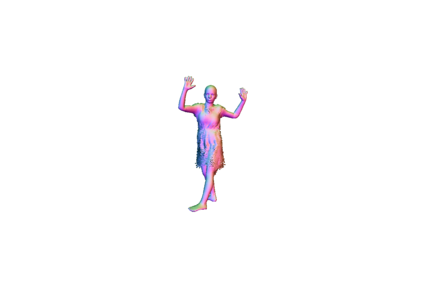

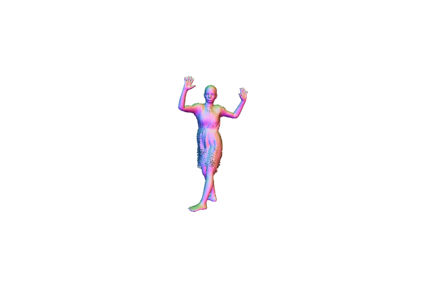

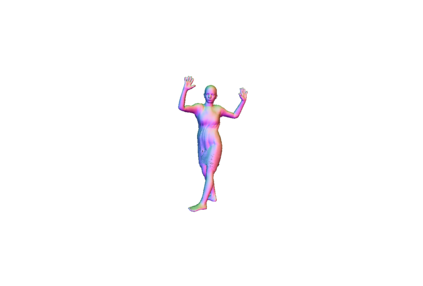

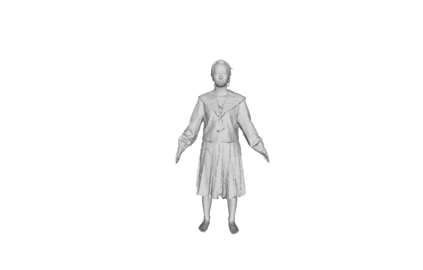

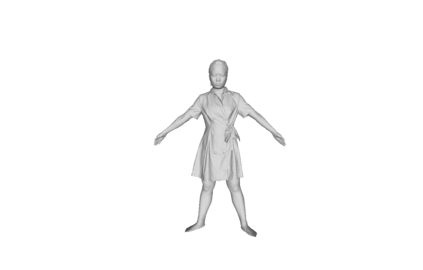

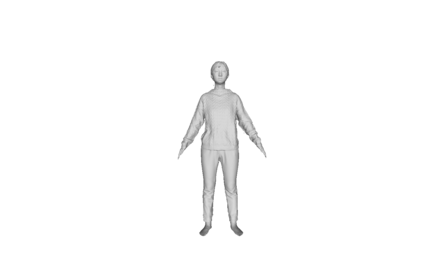

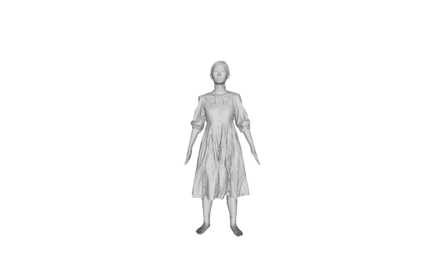

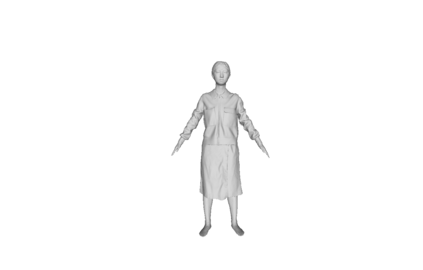

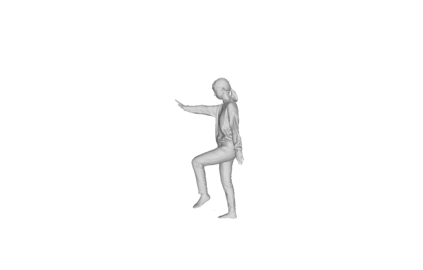

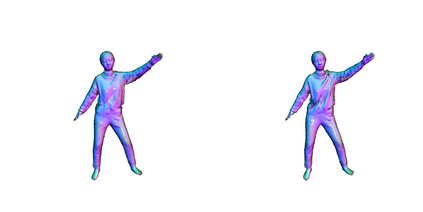

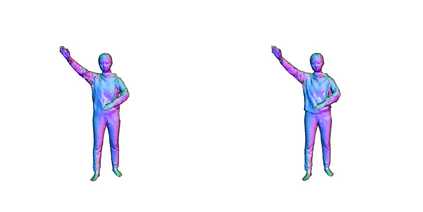

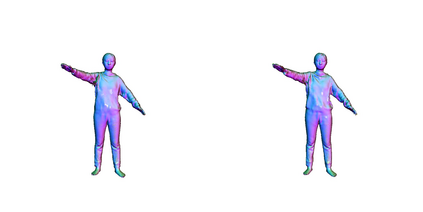

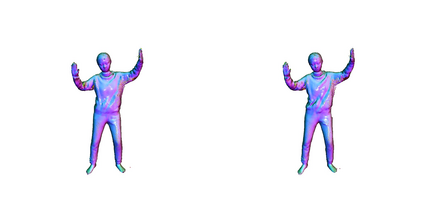

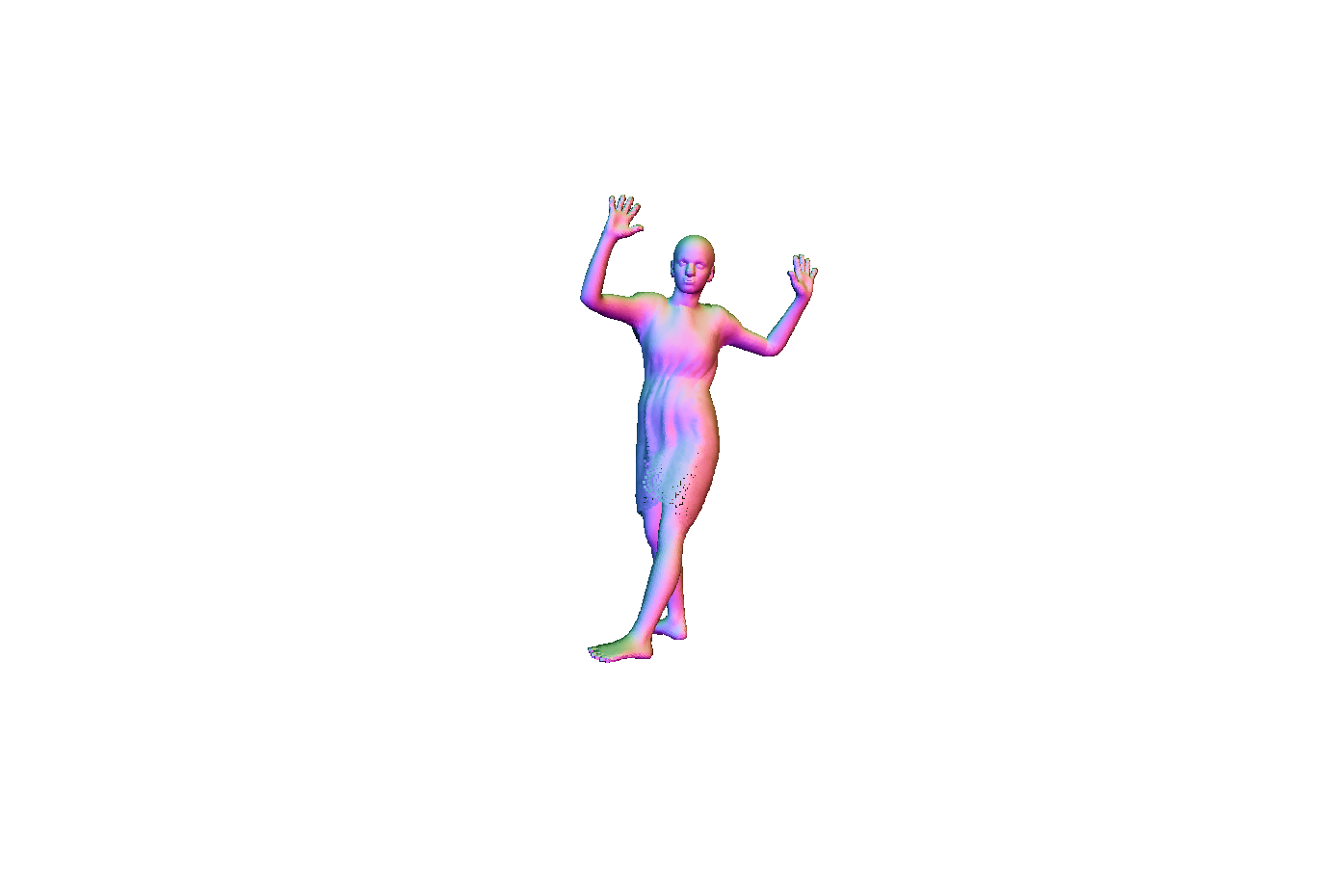

Creating animatable avatars from static scans requires the modeling of clothing deformations in different poses. Existing learning-based methods typically add pose-dependent deformations upon a minimally-clothed mesh template or a learned implicit template, which have limitations in capturing details or hinder end-to-end learning. In this paper, we revisit point-based solutions and propose to decompose explicit garment-related templates and then add pose-dependent wrinkles to them. In this way, the clothing deformations are disentangled such that the pose-dependent wrinkles can be better learned and applied to unseen poses. Additionally, to tackle the seam artifact issues in recent state-of-the-art point-based methods, we propose to learn point features on a body surface, which establishes a continuous and compact feature space to capture the fine-grained and pose-dependent clothing geometry. To facilitate the research in this field, we also introduce a high-quality scan dataset of humans in real-world clothing. Our approach is validated on two existing datasets and our newly introduced dataset, showing better clothing deformation results in unseen poses. The project page with code and dataset can be found at https://www.liuyebin.com/closet.

翻译:从静态扫描创建可动化的角色需要在不同动作中建模服装变形。现有的基于学习的方法通常在一个最小衣物网格模板或学习的隐式模板上添加动作相关变形,这些方法在捕捉细节方面存在局限性或者会阻碍端到端的学习。本文重新审视基于点的解决方案,并提出分解明确的与服装相关的模板,然后向其中添加动作相关的皱纹。这样可以将服装变形分离,使动作相关的皱纹可以更好地学习并应用于未见过的动作。另外,为了解决最近最先进的基于点的方法中的接缝问题,我们建议在身体表面上学习点特征,这建立了一个连续和紧凑的特征空间,以捕捉精细的和动作相关的服装几何。为了促进这个领域的研究,我们还介绍了一个高质量的人体扫描数据集,其中包含真实的穿着。我们的方法在两个现有数据集和我们新引入的数据集上得到了验证,展示了在未见过的动作中更好的服装变形结果。该项目页面包含代码和数据集,可在https://www.liuyebin.com/closet找到。