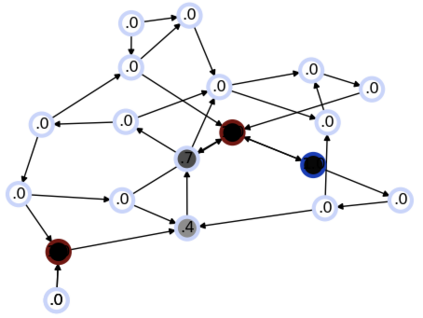

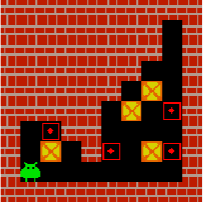

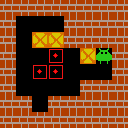

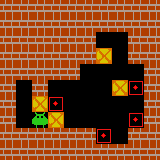

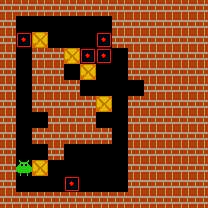

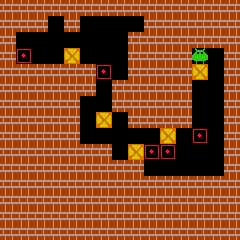

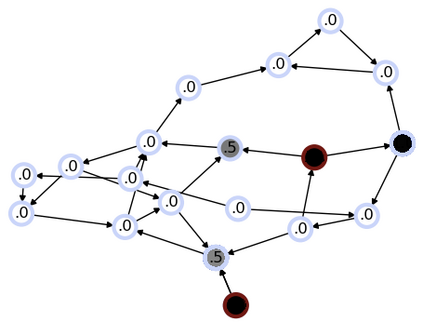

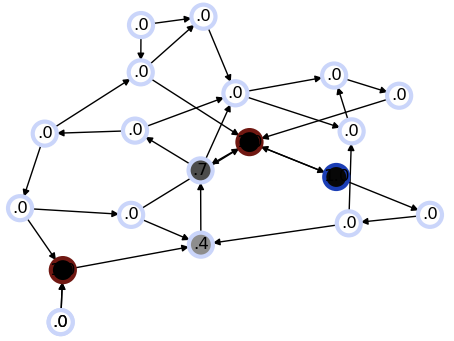

We focus on reinforcement learning (RL) in relational problems that are naturally defined in terms of objects, their relations, and manipulations. These problems are characterized by variable state and action spaces, and finding a fixed-length representation, required by most existing RL methods, is difficult, if not impossible. We present a deep RL framework based on graph neural networks and auto-regressive policy decomposition that naturally works with these problems and is completely domain-independent. We demonstrate the framework in three very distinct domains and we report the method's competitive performance and impressive zero-shot generalization over different problem sizes. In goal-oriented BlockWorld, we demonstrate multi-parameter actions with pre-conditions. In SysAdmin, we show how to select multiple objects simultaneously. In the classical planning domain of Sokoban, the method trained exclusively on 10x10 problems with three boxes solves 89% of 15x15 problems with five boxes.

翻译:我们注重强化学习(RL)问题,这些问题在物体、关系和操纵方面都是自然定义的。这些问题的特点是状态和行动空间各异,而且很难(甚至不可能)找到大多数现有RL方法所要求的固定长度代表。我们提出了一个基于图形神经网络和自动反向政策分解的深度RL框架,这个框架自然与这些问题有关,并且完全独立于域。我们在三个截然不同的领域展示了这个框架。我们报告了该方法的竞争性性能和对不同问题大小的令人印象深刻的零光化。在面向目标的BlockWorld中,我们展示了多参数行动,并预设了条件。在SysAdmin中,我们展示了如何同时选择多个对象的方法。在SysAdmin的经典规划领域,对10x10问题进行了专门培训的方法,三个盒子用5箱解决了15x15问题的89%。