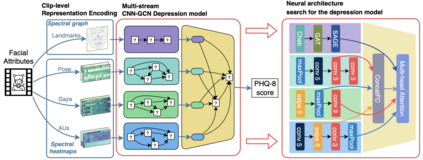

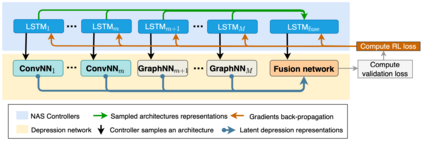

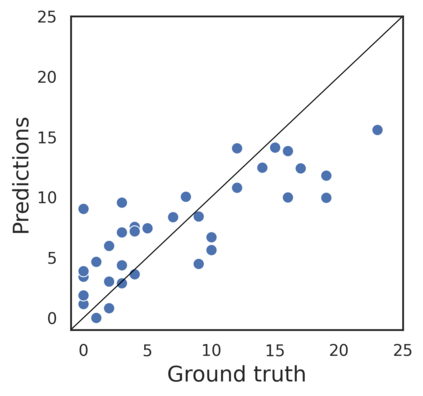

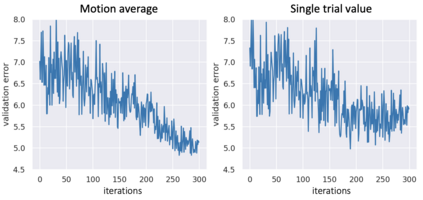

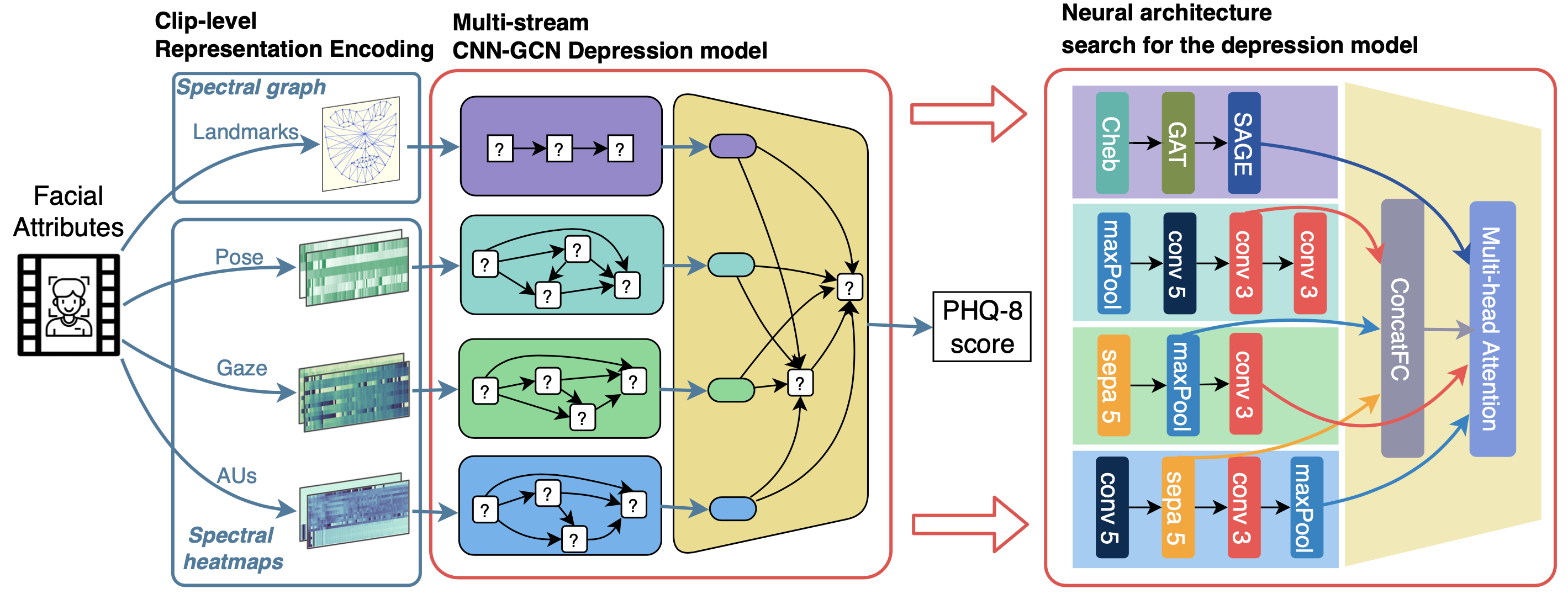

Recent studies show that depression can be partially reflected from human facial attributes. Since facial attributes have various data structure and carry different information, existing approaches fail to specifically consider the optimal way to extract depression-related features from each of them, as well as investigates the best fusion strategy. In this paper, we propose to extend Neural Architecture Search (NAS) technique for designing an optimal model for multiple facial attributes-based depression recognition, which can be efficiently and robustly implemented in a small dataset. Our approach first conducts a warmer up step to the feature extractor of each facial attribute, aiming to largely reduce the search space and providing customized architecture, where each feature extractor can be either a Convolution Neural Networks (CNN) or Graph Neural Networks (GNN). Then, we conduct an end-to-end architecture search for all feature extractors and the fusion network, allowing the complementary depression cues to be optimally combined with less redundancy. The experimental results on AVEC 2016 dataset show that the model explored by our approach achieves breakthrough performance with 27\% and 30\% RMSE and MAE improvements over the existing state-of-the-art. In light of these findings, this paper provides solid evidences and a strong baseline for applying NAS to time-series data-based mental health analysis.

翻译:最近的研究显示,抑郁症可以部分地从人的面部特征中反映出来。由于面部特征具有不同的数据结构,并包含不同的信息,现有方法未能具体考虑从其中每个特征中提取抑郁症相关特征的最佳方法,也没有调查最佳聚合战略。在本文件中,我们提议扩大神经结构搜索技术,以设计多种面部特征抑郁症识别的最佳模型,这可以在一个小数据集中高效和有力地实施。我们的方法首先对每个面部属性的特征提取器进行更暖化的一步,目的是大量减少搜索空间,并提供定制结构,使每个特征提取器都可以成为聚合神经网络(CNN)或图形神经网络(GNNN)的最佳方法。然后,我们对所有特征提取器和聚合网络进行端到端搜索,使补充性抑郁信号能够以最优的方式与较少的冗余程度相结合。AVec2016年的实验结果显示,我们的方法探索的模式在27 ⁇ 和30 ⁇ RME和MAE改进了突破性功能,使每个特征提取器可以成为聚合神经网络(GNNNN)或图形神经网络(GNNNNNNNNN)的最佳方法。然后,我们对现有状态和基于坚实的智能分析提供了坚实的基线数据分析。