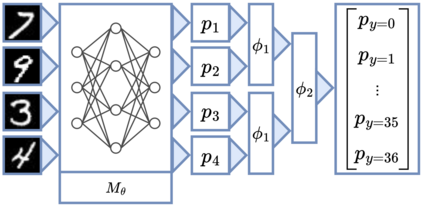

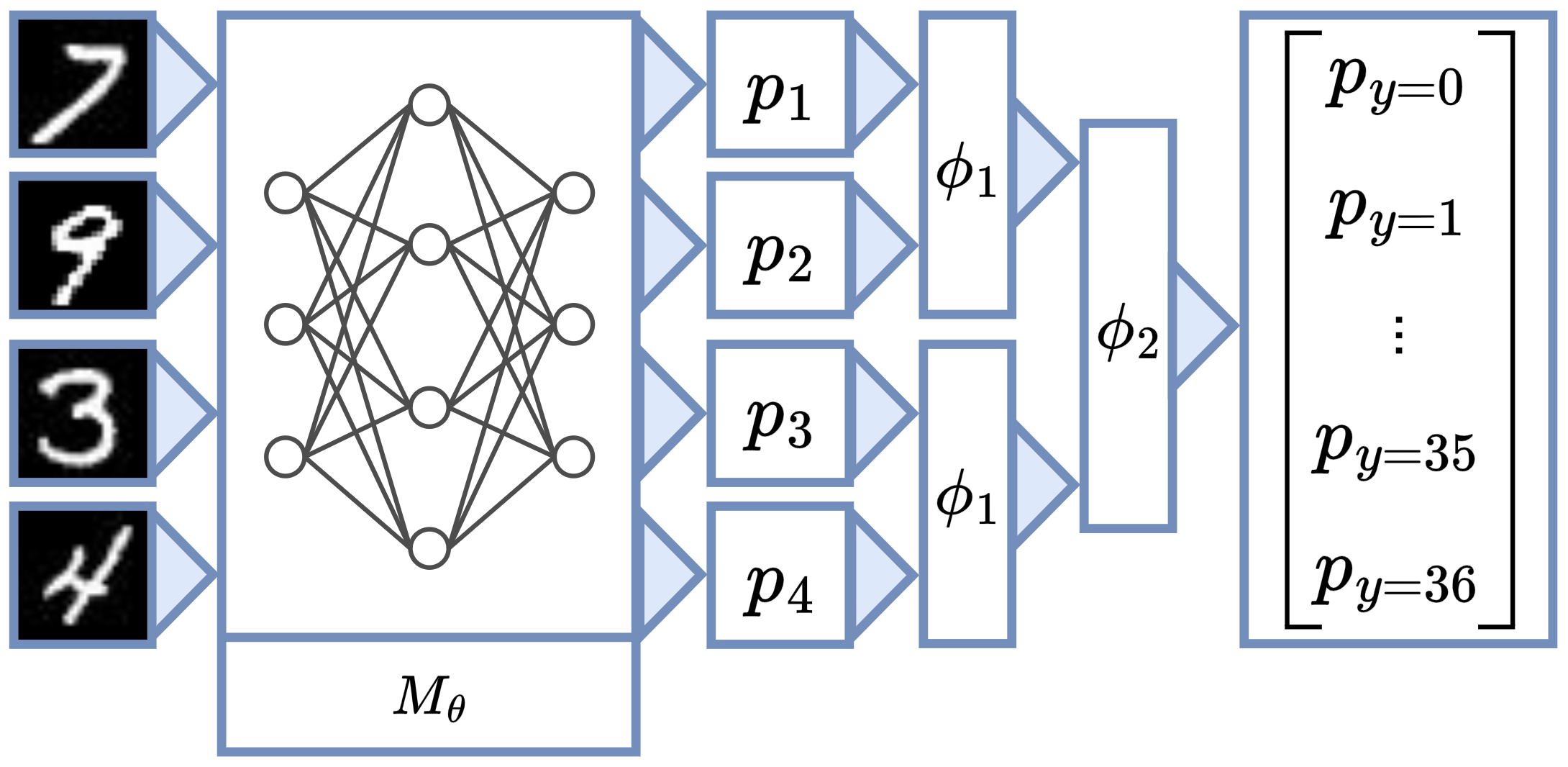

Many computational tasks benefit from being formulated as the composition of neural networks followed by a discrete symbolic program. The goal of neurosymbolic learning is to train the neural networks using end-to-end input-output labels of the composite. We introduce CTSketch, a novel, scalable neurosymbolic learning algorithm. CTSketch uses two techniques to improve the scalability of neurosymbolic inference: decompose the symbolic program into sub-programs and summarize each sub-program with a sketched tensor. This strategy allows us to approximate the output distribution of the program with simple tensor operations over the input distributions and the sketches. We provide theoretical insight into the maximum approximation error. Furthermore, we evaluate CTSketch on benchmarks from the neurosymbolic learning literature, including some designed for evaluating scalability. Our results show that CTSketch pushes neurosymbolic learning to new scales that were previously unattainable, with neural predictors obtaining high accuracy on tasks with one thousand inputs, despite supervision only on the final output.

翻译:暂无翻译